GPT-5 Pro is OpenAI’s top-tier GPT-5 variant designed for extended reasoning, code-quality improvements, and higher-stakes workflows. It sits at the apex of the GPT-5 family (which also includes standard and “Thinking” modes) and is positioned for developers and organizations that need the strongest single-query reasoning and task performance available in the GPT-5 lineup.

Key features (high-level)

- Extended reasoning: designed to produce longer chains of thought and more robust step-by-step solutions for complex tasks.

- Improved instruction following: better at obeying user constraints and producing consistent outputs across prompts.

- Tool & agent integration: built to call tools reliably (browser, code execution, file access, etc.) and to orchestrate multi-step actions. better integration with function calls, Python/tools, and agent frameworks for multi-step workflows.

- Multimodal inputs: supports richer input types used across the GPT-5 family (text + images and improved cross-modal reasoning).

- Enterprise readiness: targeted at production customers who need lower error rates and predictable tool-calling behavior.

Technical details (architecture & training)

- Chain-of-thought training and RL alignment: GPT-5’s training regimen places explicit emphasis on chain-of-thought style reasoning and reinforcement-learning-from-human-feedback (RLHF/RL) to improve stepwise problem solving and alignment.

- Inference strategies: The Pro tier appears to leverage more sophisticated inference-time processes (e.g., longer internal deliberation, ensemble or sampling strategies) to improve answer quality for high-value queries.

- Efficiency tradeoffs: It indicates a mixture-of-experts-style approach and inference-mode switching (simpler for trivial queries, heavier compute for “thinking” queries).

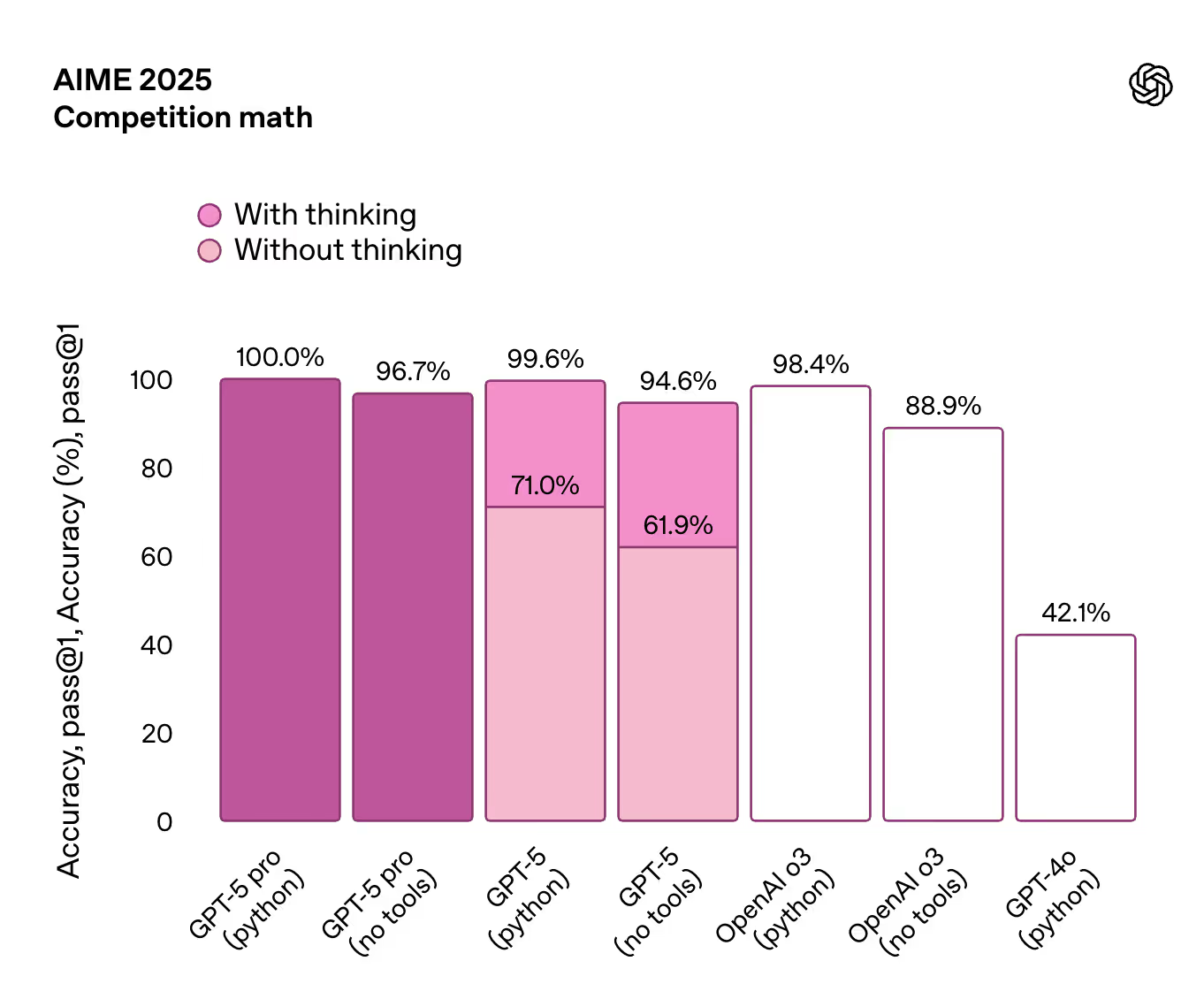

Benchmark performance

Reasoning capabilities, we usually look at the GPQA Diamond benchmark:

GPT-5 pro (with Python tools) scores the highest at 89.4% on PhD-level science questions, slightly ahead of its no-tools variant.

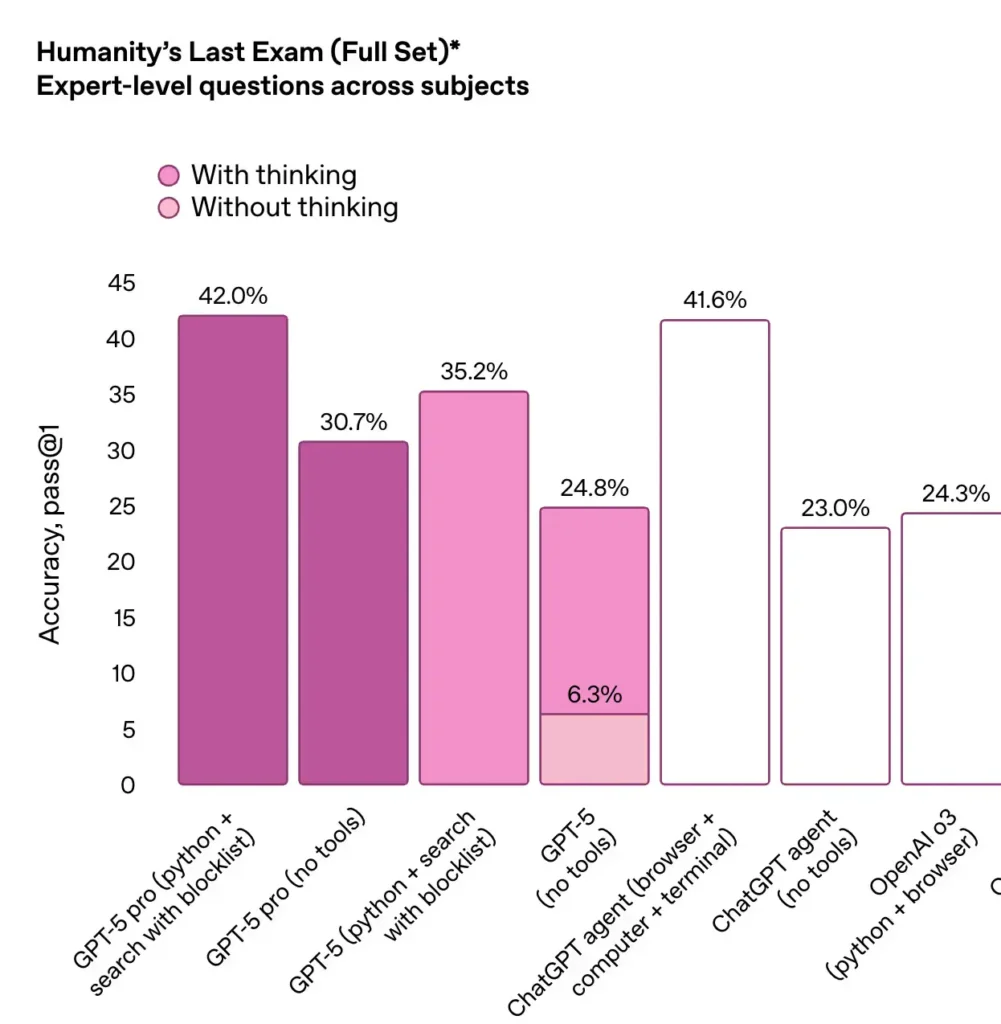

Humanity’s Last Exam:

Independent benchmark reports show GPT-5 Pro (with Python/tools) achieving top marks on certain high-difficulty science and reasoning suites (example: ~89.4% on a PhD-level science question set in one analysis), outperforming GPT-4o and previous variants on advanced reasoning tests. These results vary by benchmark and by whether tool use is allowed.

Factuality & safety: OpenAI stated GPT-5’s responses show materially lower factual error rates — for example, reported reductions of roughly ~45% less likely to contain factual errors than GPT-4o in their public material.

Limitations & known failure modes

- Benchmark variance & task sensitivity: Pro leads on many engineering and reasoning benchmarks, but scores can vary widely by configuration, prompting concern about over-tuning to benchmarks.

- Variable conversational fluency: some reviewers reported brittleness in multi-turn chat or lower conversational continuity compared with other chat-centric variants.

- Higher cost & latency: Pro’s parallel test-time compute increases per-call cost and may add latency compared with lighter GPT-5 profiles.

High-value use cases

Keywords: complex reasoning, code generation, research assistance, high-value automation, expert Q&A.

GPT-5 Pro is best suited to applications that demand strong single-turn reasoning and high-quality code output, for example:

- Software engineering: bug fixing, multi-file code edits, and complex refactors.

- Research & analytics: rapid synthesis of technical material and hypothesis generation where precision matters.

- Enterprise automation: critical decision support (legal/legal-adjacent summarization, technical due diligence) where improved reasoning reduces downstream manual work.

- High-value content generation: structured reports, polished technical documentation, and multi-stage pipelines that benefit from Pro’s higher fidelity.

For lower-cost or conversational tasks, standard GPT-5 or Thinking modes may be preferable.

Comparing GPT-5 Pro to other models

- GPT-5 Pro vs GPT-5 (standard / Thinking): Pro prioritizes maximum reasoning quality and typically outperforms standard/Thinking on select coding and reasoning benchmarks at the expense of compute/cost.

- GPT-5 Pro vs o3 / 4o: On engineering benchmarks like SWE-bench Verified and select coding suites, GPT-5 (Pro/High) is reported to exceed o3 and GPT-4o in many cases; however, gains are task-dependent and sometimes incremental, not uniformly transformational.

- GPT-5 Pro vs agent/tool setups: Some agentized systems that orchestrate multiple smaller models can match or beat larger single-model responses for specific multi-step workflows, but GPT-5 Pro’s integrated compute for parallel reasoning often simplifies single-call ergonomics and reduces orchestration overhead.

How to call GPT-5 Pro API from CometAPI

Model version: gpt-5-pro / gpt-5-pro-2025-10-06

gpt-5-pro API Pricing in CometAPI,20% off the official price:

| Input Tokens | $12.00 |

|---|---|

| Output Tokens | $96.00 |

Required Steps

- Log in to cometapi.com. If you are not our user yet, please register first

- Get the access credential API key of the interface. Click “Add Token” at the API token in the personal center, get the token key: sk-xxxxx and submit.

- Get the url of this site: https://api.cometapi.com/

Use Method

- Select the “

gpt-5-pro / gpt-5-pro-2025-10-06” endpoint to send the API request and set the request body. The request method and request body are obtained from our website API doc. Our website also provides Apifox test for your convenience. - Replace <YOUR_API_KEY> with your actual CometAPI key from your account.

- Insert your question or request into the content field—this is what the model will respond to.

- . Process the API response to get the generated answer.

CometAPI provides a fully compatible REST API—for seamless migration. Key details:

- Base URL: https://api.cometapi.com/v1/responses

- Model Names:

gpt-5-pro / gpt-5-pro-2025-10-06 - Authentication:

Bearer YOUR_CometAPI_API_KEYheader - Content-Type:

application/json.

curl --location --request POST 'https://api.cometapi.com/v1/responses' \

--header 'Authorization: sk-' \

--header 'Content-Type: application/json' \

--header 'Accept: /' \

--header 'Host: api.cometapi.com' \

--header 'Connection: keep-alive' \

--data-raw '{

"model": "gpt-5-pro",

"stream": true,

"messages": [

{

"role": "user",

"content": "Generate a cute kitten sitting on a cloud, cartoon style"

}

]

}See Also GPT-5 API