OpenAI’s GPT-5.1 Instant is the low-latency variant of the newly released GPT-5.1 family (announced November 12, 2025). It’s designed to deliver the “most-used” ChatGPT experience with faster turn-taking, warmer conversational tone defaults, improved instruction following, and a built-in adaptive-reasoning capability that decides when to reply immediately and when to spend extra compute to “think” through harder queries.

Basic information & features

- Warmer, more conversational default tone and expanded tone/personalization presets to match user preferences (examples: Professional, Friendly, Candid, Quirky, Efficient, Nerdy, Cynical).

- Adaptive reasoning: the model decides when to take extra reasoning steps before answering; Instant aims to be fast on most everyday prompts while still using extra effort when appropriate.

- Improved instruction-following (fewer misunderstandings on multi-step prompts) and generally reduced jargon for better user comprehension (especially in the Thinking variant).

- Designed for real-time UX: streaming responses, low token-roundtrip latency useful for voice assistants, live transcription, and highly interactive conversational apps.

Technical details (developer-facing)

- API model identifiers: OpenAI will expose Instant in the API under the chat-style identifier

gpt-5.1-chat-latest(Instant) andgpt-5.1for Thinking (per OpenAI’s release notes). Use the Responses API endpoint for best efficiency. - Response API & parameters: The GPT-5 family (including 5.1) is best used via the newer Responses API. Typical options you’ll pass include model name, input/messages, and optional control parameters like

verbosity/reasoning(effort) that tune how much internal reasoning the model attempts before responding (assuming the platform follows the same parameter conventions introduced with GPT-5). For highly interactive apps, enable streaming replies. - Adaptive reasoning behaviour: Instant is tuned to favor quick replies but has light adaptive reasoning—it will allocate slightly more compute on tougher prompts (math, coding, multi-step reasoning) to reduce errors while keeping average latency low. GPT-5.1 Thinking will spend more compute on harder problems and less on trivial ones.

Benchmark & safety performance

GPT-5.1 Instant is tuned to keep responses fast while improving math and coding evals (AIME 2025, Codeforces improvements were specifically noted by OpenAI).

OpenAI published a GPT-5.1 System Card addendum with production benchmark metrics and targeted safety evaluations. Key figures (Production Benchmarks, higher = better, not_unsafe metric):

- Illicit / non-violent (not_unsafe) — gpt-5.1-instant: 0.853.

- Personal data — gpt-5.1-instant: 1.000 (perfect on this benchmark).

- Harassment — gpt-5.1-instant: 0.836.

- Mental health (new eval) — gpt-5.1-instant: 0.883.

- StrongReject (jailbreak robustness, not_unsafe) — gpt-5.1-instant: 0.976 (shows strong robustness to adversarial jailbreaks compared with older instant checkpoints).

Typical and recommended use cases for GPT-5.1 Instant

- Chatbots & conversational UIs — customer support chat, sales assistants, and product guides where low latency preserves conversation flow.

- Voice assistants / streaming replies — streaming partial outputs to a UI or TTS engine for sub-second interactions.

- Summarization, rephrasing, message drafting — quick transformations that benefit from a warmer, user-friendly tone.

- Light coding help and inline debugging — for quick code snippets and suggestions; use Thinking for deeper bug hunts. (Test on your codebase.)

- Agent front-ends and retrieval-augmented workflows — where you want fast responses combined with occasional deeper reasoning/tool calls. Use the adaptive-reasoning behavior to balance cost vs. depth.

Comparison with other models

- GPT-5.1 vs GPT-5: GPT-5.1 is a tuned upgrade — warmer default tone, improved instruction following, and adaptive reasoning. OpenAI positions 5.1 as strictly better in the areas they targeted, but retains GPT-5 in a legacy menu for transition/compatibility.

- GPT-5.1 vs GPT-4.1 / GPT-4.5 / GPT-4o: GPT-5 family still targets higher reasoning and coding performance than GPT-4.x series; GPT-4.1 remains relevant for very long contexts or cost-sensitive deployments. Reporters emphasize GPT-5/5.1 lead on hard math/coding benchmarks, but exact per-task advantages depend on the benchmark.

- GPT-5.1 vs Claude / Gemini / other rivals: early commentary frames GPT-5.1 as a response to user feedback (personality + capability). Competitors (Anthropic’s Claude Sonnet series, Google’s Gemini 3 Pro, Baidu’s ERNIE variants) emphasize different tradeoffs (safety-first, multimodality, massive contexts). For technical customers, evaluate across cost, latency, safety behavior on your workloads (prompts + tool calls + domain data).

How to call GPT-5.1 Instant API from CometAPI

GPT-5.1 Instant API Pricing in CometAPI,20% off the official price:

Required Steps

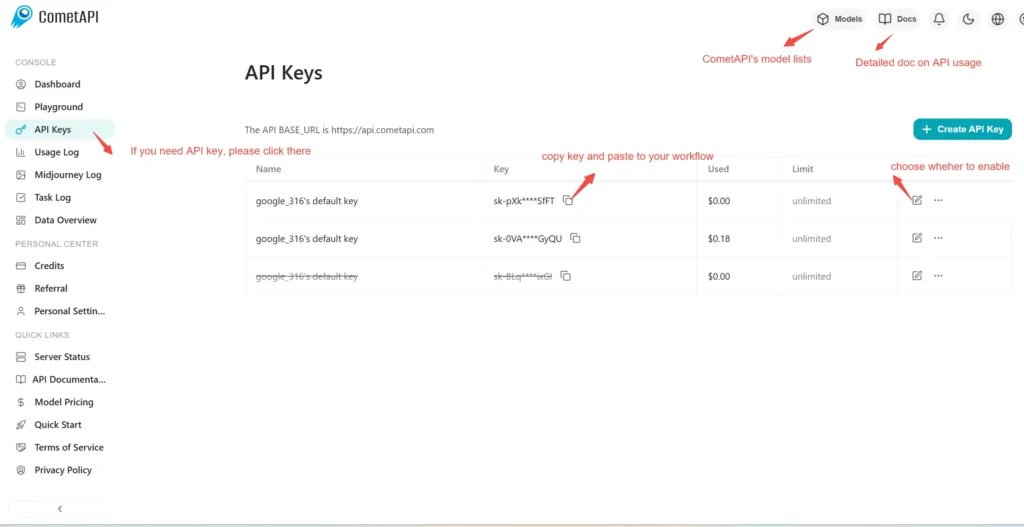

- Log in to cometapi.com. If you are not our user yet, please register first.

- Sign into your CometAPI console.

- Get the access credential API key of the interface. Click “Add Token” at the API token in the personal center, get the token key: sk-xxxxx and submit.

Use Method

- Select the “

gpt-5.1-chat-latest” endpoint to send the API request and set the request body. The request method and request body are obtained from our website API doc. Our website also provides Apifox test for your convenience. - Replace <YOUR_API_KEY> with your actual CometAPI key from your account.

- Insert your question or request into the content field—this is what the model will respond to.

- . Process the API response to get the generated answer.

CometAPI provides a fully compatible REST API—for seamless migration. Key details to Chat:

- Base URL: https://api.cometapi.com/v1/chat/completions

- Model Names:

gpt-5.1-chat-latest - Authentication:

Bearer YOUR_CometAPI_API_KEYheader - Content-Type:

application/json.

See Also Sora 2 API