The release of GLM-5, unveiled this week by China’s Zhipu AI (branded publicly as Z.AI / zai-org in many developer channels), marks another step in the accelerating cadence of large-model releases. The new model is being positioned as Zhipu’s flagship: larger in scale, tuned for long-horizon agentic tasks, and built with engineering choices intended to reduce inference cost while preserving long context. Early industry reporting and hands-on developer notes suggest meaningful gains in coding, multi-step reasoning and agent orchestration compared with previous GLM iterations — and in some tests it even challenges Claude 4.5.

What is GLM-5 and who built it?

GLM-5 is the latest major release in the GLM family: a large, open-source foundation model developed and published by Z.ai (the team behind the GLM series). Announced in early February 2026, GLM-5 is presented as a next-generation model specifically tuned for "agentic" tasks — i.e., multi-step, long-horizon workflows where a model must plan, call tools, execute, and maintain context for extended conversations or automated agents. The release is notable not only for model design but also for how it was trained and where: Z.ai used a mix of domestic Chinese hardware and toolchains as part of a push for self-sufficiency.

Reported architecture and training figures include:

- Parameter scaling: GLM-5 scales up to roughly 744B parameters (with a smaller "active" expert count cited in some technical notes, e.g., 40B active), versus earlier GLM-4 family sizes around 355B/32B active.

- Pretraining data: Training corpus size reportedly increased from ~23 trillion tokens (GLM-4 generation) to ~28.5 trillion tokens for GLM-5.

- Sparse attention / DeepSeek Sparse Attention (DSA): A sparse attention schema to preserve long context while reducing compute cost during inference.

- Design emphasis: engineering choices focused on agent orchestration, long-context reasoning, and cost-effective inference.

Origins and positioning

GLM-5 builds on a line that included GLM-4.5 (released in mid-2025) and a few iterative updates such as 4.7. Z.ai positions GLM-5 as a jump from "vibe coding" (quick single-step code outputs) toward “agentic engineering”: sustained reasoning, multi-tool orchestration, and system synthesis over long context windows. The public materials emphasize that GLM-5 was designed to handle complex systems engineering tasks — building, coordinating, and maintaining multi-step agent behaviors rather than only answering isolated queries.

What are the new features in GLM-5?

Major architectural changes

- Massive sparse scaling (MoE): GLM-5 moves to a much larger sparse Mixture-of-Experts architecture. Public figures from the developer pages and independent write-ups list the model as roughly 744B total parameters with ~40B active per token — a significant step up from GLM-4.5’s ~355B / 32B active configuration. This sparse scaling lets the model present very large total capacity while keeping per-token computation tractable.

- DeepSeek Sparse Attention (DSA): To preserve long-context capability without linearly scaling inference cost, GLM-5 integrates a sparse attention mechanism (branded DeepSeek) to keep important long-range dependencies at scale while trimming the cost of attention over mega-length contexts.

Agentic engineering as a foundational design goal

One of GLM-5’s headline features is that it’s explicitly designed for agentic engineering — meaning the model is intended to be used not just for single-turn chat or summary tasks but as the “brain” of multi-step agents that can plan, issue tool calls, manage state, and reason across long contexts. Z.ai positions GLM-5 to serve in orchestration loops: breaking down complex problems, calling external tools/APIs, and tracking long tasks over many turns.

Why agentic design matters

Agentic workflows are central to real-world automation: automated research assistants, autonomous software engineers, operations orchestration, and simulation control. A model built for this world needs strong planning, stable tool-calling behavior, and robustness over thousands of tokens of context.

Improved coding, reasoning and “long-horizon” behavior

GLM-5 emphasizes improved code generation and reasoning. Z.ai claims targeted improvements in the model’s ability to write, refactor, and debug code, plus more consistent multi-step reasoning across long interactions. Independent early-access reports and partner evaluations found the model notably stronger at developer-oriented tasks than prior GLM generations.

Practical developer features

- Larger context windows to hold documentation, codebases, and conversation state.

- Tooling primitives for safe tool invocation and result handling.

- Better few-shot and chain-of-thought performance to decompose and execute complex tasks.

- Agentic features and tool-calling: GLM-5 emphasizes native support for agents: function/tool calling, stateful sessions, and better management of long dialogues and tool-use sequences. This makes it easier to build agents that integrate web search, databases, or task automation.

How does GLM-5 perform on benchmarks?

Specific benchmark highlights

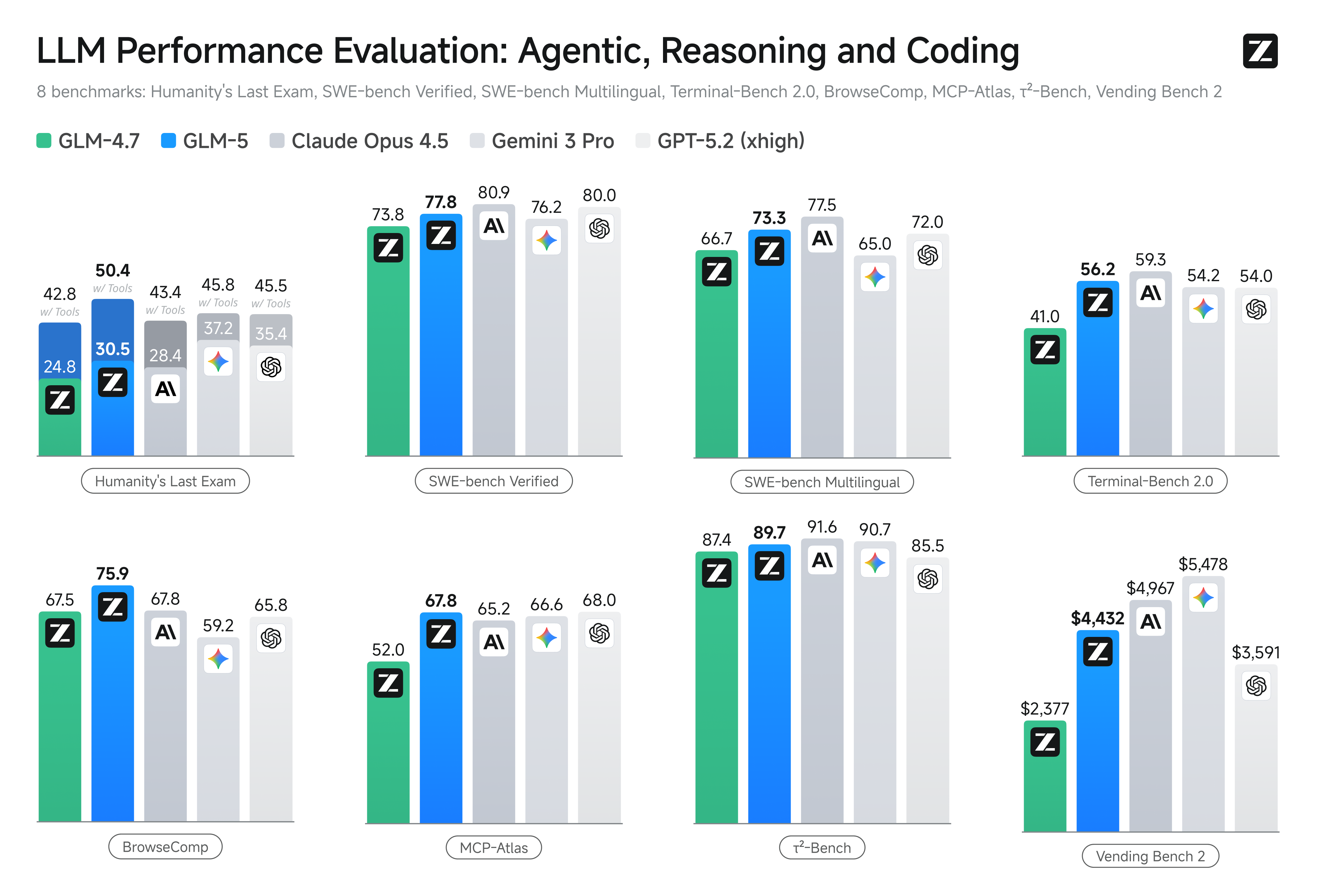

- Coding benchmarks: GLM-5 approaching (and in some cases, matching) the coding performance of highly optimized proprietary models such as Anthropic’s Claude Opus 4.5 on specific coding tasks. These results are task-dependent (unit tests, algorithmic coding, API usage), but they mark a clear improvement over GLM-4.5.

- Reasoning and agentic tests: On multi-step reasoning and agentic evaluation suites (e.g., multi-turn planning, task decomposition benchmarks), GLM-5 achieved best-in-class results for open-source models and in some metrics surpassed competing closed models on targeted tasks.

How do I access and try GLM-5?

GLM-5 is the fifth-generation large language model from Zhipu AI (Z.ai), built with a Mixture-of-Experts architecture (~745 B total, ~44 B active) and aimed at strong reasoning, coding, and agentic workflows. It officially launched around Feb 12, 2026.

As of now there are two main ways people are accessing it:

A) Official API Access (Z.ai or Aggregators)

Zhipu AI itself offers APIs for its models, and you can call GLM-5 through those APIs.

Typical steps:

- Sign up for a Z.ai/Open BigModel API account.

- Get an API key from the dashboard.

- Use an OpenAPI-style or REST API endpoint with the model name (e.g.,

glm-5).

(Similar to how you’d call GPT models from OpenAI). - Set prompts and send HTTP requests.

👉 Z.ai’s pricing page shows official GLM-5 token prices like:

- ~$1.0 per million input tokens

- ~$3.2 per million output tokens

B) Third-Party API Wrappers ——CometAPI

APIs like CometAPI or WaveSpeed wrap multiple AI models (OpenAI, Claude, Z.ai etc.) behind a unified interface.

- With services like CometAPI you could call GLM models by switching the model ID.

(CometAPI currently supports GLM-5/GLM-4.7.) - CometAPI's glm-5 is priced at 20% of the official price.

| Usage Type | Price |

|---|---|

| Input tokens | ~$0.8 per 1M tokens |

| Output tokens | ~$2.56 per 1M tokens |

Why this matters: You keep your existing OpenAI-compatible client code and just change the base URL/model ID.

C) Self-Hosting via Hugging Face / Weights

There are unofficial GLM-5 weight repositories (e.g., versions named glm-5/glm-5-fp8) visible in Hugging Face model listings.

With those you can:

- Download the model weights.

- Use tools like vLLM, SGLang, xLLM, or Transformers to serve locally or on your cloud GPU fleet.

Pros: maximal control, no ongoing API cost.

Cons: huge compute requirements — likely multiple high-end GPUs and memory (several hundred GB), making it impractical on small systems.

So — is GLM-5 worth it, and should you keep GLM-4.7?

Short answer (executive summary)

- If your work needs robust, multi-step agentic behaviour, production-grade code generation, or system-level automation: GLM-5 is worth evaluating immediately. Its architecture, scale and tuning prioritize exactly these outcomes.

- If you need cost-efficient, high-throughput microservices (short chat, classification, lightweight prompts): GLM-4.7 likely remains the most economical choice. GLM-4.7 preserves a strong capability set at significantly lower per-token cost in many provider listings and is already battle-tested.

Longer answer (practical recommendation)

Adopt a tiered model strategy: use GLM-4.7 for everyday, high-volume interactions and reserve GLM-5 for high-value engineering problems and agentic orchestration. Pilot GLM-5 on a small product slice that exercises long context, tooling integration and code correctness; measure both engineering time saved and incremental model cost. Over time, you’ll know whether GLM-5’s capability uplift justifies broader migration.

With CometAPI, you can switch between GLM-4.7 and GLM-5 at any time.

Real-world use cases where GLM-5 shines

1. Complex agent orchestration

GLM-5’s design focus on multi-step planning and tool calling makes it well suited to systems that must coordinate search, API calls, and program execution (for example: automated research assistants, iterative code generators, or multi-step customer service agents that must consult databases and external APIs).

2. Longform engineering & code base reasoning

When you need the model to analyze, refactor, or synthesize across many files or a large codebase, GLM-5’s extended context and sparse attention are direct advantages — fewer failure modes caused by truncated context and better long-span consistency.

3. Knowledge-intensive synthesis

Analysts and product teams that generate complex reports — multi-section research briefs, legal summaries, or regulatory filings — can benefit from the model’s improvements in steady multi-step reasoning and reduced hallucinations in vendor-reported tests.

4. Agentic automation for workflows

Teams building automation that must orchestrate multiple systems (e.g., planning + ticketing + deployment pipelines) can use GLM-5 as the central planner and executor, backed by tool-calling frameworks and safety wrappers.

Conclusion

GLM-5 is an important release in the rapidly evolving frontier-model landscape. Its emphasis on agentic engineering, improved coding and reasoning, and open-weights availability make it attractive for teams building long-horizon, tool-enabled AI systems. Real gains in selected tasks and encouraging cost/performance tradeoffs — but buyers should evaluate GLM-5 against their specific tasks and run their own controlled benchmarks before committing to production.

Developers can access GLM-5 via CometAPI now.To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up fo glm-5 today !

If you want to know more tips, guides and news on AI follow us on VK, X and Discord!