gpt-5.1-codex is a specialized member of OpenAI’s GPT-5.1 family, optimized for agentic, long-running software-engineering workflows (code generation, patching, large refactors, structured code review and multi-step agentic tasks).

Features

- Agentic tooling first — built to emit structured patch operations and shell calls (the model can produce

apply_patch_callandshell_callitems which your integration executes and returns outputs for). This enables reliable create/update/delete operations across files. - Responses API only — Codex variants in the 5.1 line are available only via the Responses API and are tuned for tool-driven workflows rather than conversational chat flows.

- Adaptive reasoning and latency modes — GPT-5.1 family introduces

reasoning_effort(including anonemode for latency-sensitive interactions) and extended prompt caching (up to 24h) to improve interactive coding sessions. Codex models emphasize efficient iterative work. - Steerability and code personality — tuned to be more “deliberate” for fewer wasted actions in long sessions and to produce clearer update messages for PRs and patch diffs.

- Codex-specific UX: IDE/CLI default model setting, session resume, context compaction, image/screenshot inputs for frontend tasks in Codex Web.

Technical details & operational considerations

- API surface:

gpt-5.1-codexis served via the Responses API (not Chat Completions). The Responses API supports tool calling, structured outputs, streaming, and theapply_patchandshelltools that Codex leverages. - Tool calling semantics: include tools in the request (

tools:). The model may emitapply_patch_callorshell_callitems; your code executes the patch/command and returns outputs back to the model in the follow-up request. The Responses API is agentic by default so it can orchestrate multi-step plans. - Reasoning tuning: use

reasoning={"effort":"none"}(Responses API) for minimal thinking/low latency, or{"effort":"medium"}/highfor thorough code reasoning and validation. Note thatnoneimproves parallel tool-calling and latency-sensitive code edits. - Session persistence / context: Codex and the Responses API support session resume and context compaction to summarize older context as you approach the context limit, enabling extended interactive sessions without manual context trimming.

Benchmark performance

Coding accuracy: On a diff-editing benchmark (SWE-bench Verified), early partners reported ~7% improvement in patch/edit accuracy for GPT-5.1 vs GPT-5 (partner-reported). Agent execution run-time improvements (example: “agents run 50% faster on GPT-5.1 while exceeding GPT-5 accuracy” in certain tool-heavy tasks).

SWE-bench Verified (500 problems): GPT-5.1 (high) — 76.3% vs GPT-5 (high) — 72.8% (OpenAI reported). This shows measurable uplift on real-repo patch generation tasks.

Speed / token efficiency: GPT-5.1 runs 2–3× faster than GPT-5 on many tasks (faster response times on easier tasks by using fewer reasoning tokens). Example given: a small npm command answer that took ~10s on GPT-5 takes ~2s on GPT-5.1 with substantially fewer tokens.

Limitations, safety, and operational considerations

- Hallucinations and factual errors: OpenAI continues to reduce hallucinations but explicitly warns that hallucinations are not eliminated — models can still fabricate facts or assert incorrect behavior for edge-case programming assumptions; critical systems should not rely on unconstrained model output without independent verification.

- Over-fast replies / shallow reasoning: The faster default behavior can sometimes produce responses that are “fast but superficial” (quick code snippets rather than deeper repository-aware edits) — use

reasoning: highfor deeper edits and verification steps. - Prompting discipline required: Codex variants expect tool context and structured prompting; existing GPT-5 prompts often must be adapted. The model’s reliability depends heavily on how your integration applies patches and verifies outputs (tests, CI).

How it compares (brief) to other popular models

- vs GPT-5 (baseline): GPT-5.1 emphasizes faster responses on routine tasks and better steerability for coding; reported improvements on editing/coding benchmarks (SWE-bench diff editing +7% in partner reports) and lower token usage on tool-heavy chains. For deep, deliberative reasoning, choose the

Thinking/highreasoning settings. () - vs GPT-5-Codex (prior): gpt-5.1-codex is the next generation — same Codex focus but trained/tuned for improved prompt caching,

apply_patchtooling, and adaptive reasoning that balances latency and depth.

Primary use cases (recommended)

- Interactive IDE workflows: intelligent code completion, PR drafting, inline patching and multi-turn code edits.

- Agentic automation: long-running agent tasks that require applying a sequence of patches, running shell steps, and validating via tests.

- Code review & refactoring: higher-quality diffs and structured review comments (SWE-bench improvements reported by partners).

- Test generation & validation: generate unit/integration tests, run them via a controlled shell tool, iterate on failures.

How to call gpt-5.1-codex API from CometAPI

gpt-5.1-codex API Pricing in CometAPI,20% off the official price:

| Input Tokens | $1.00 |

| Output Tokens | $8.00 |

Required Steps

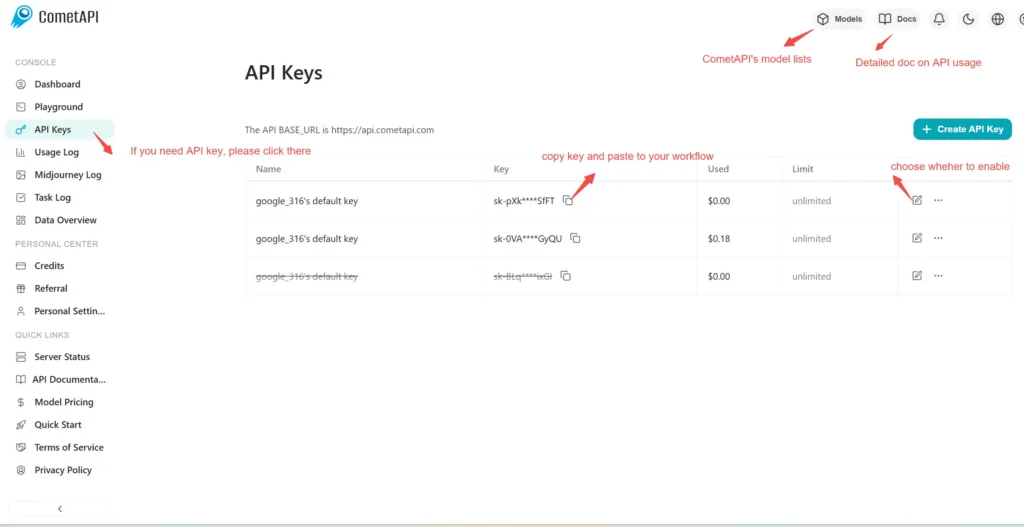

- Log in to cometapi.com. If you are not our user yet, please register first.

- Sign into your CometAPI console.

- Get the access credential API key of the interface. Click “Add Token” at the API token in the personal center, get the token key: sk-xxxxx and submit.

Use Method

- Select the “

gpt-5.1-codex” endpoint to send the API request and set the request body. The request method and request body are obtained from our website API doc. Our website also provides Apifox test for your convenience. - Replace <YOUR_API_KEY> with your actual CometAPI key from your account.

- Insert your question or request into the content field—this is what the model will respond to.

- . Process the API response to get the generated answer.

CometAPI provides a fully compatible REST API—for seamless migration. Key details to Responses

See also GPT-5.1 API and GPT-5.1-Chat-latest API