GPT 5.1 API is what GPT-5.1 Thinking is the advanced reasoning variant of OpenAI’s GPT-5.1 family, it prioritizes adaptive, higher-quality reasoning while giving developers explicit control over the latency / compute trade-off.

Basic features

- Adaptive reasoning: the model dynamically adjusts thinking depth per request — faster on routine tasks, more persistent on complex ones. This reduces latency and token use for common queries. explicitly allocates more reasoning time for complex prompts, and is more persistent on multi-step problems; can be slower for hard tasks but gives deeper answers.

- Reasoning modes:

none/low/medium/high(GPT-5.1 defaults tononefor low-latency cases; choose higher levels for more demanding tasks). The Responses API exposes areasoningparameter to control this. - Default tone & style: written to be clearer on complex topics (less jargon), more explanatory and “patient.”

- Context window (tokens / long context) Thinking: much larger — 400K token context for paid tiers.

Key technical details

- Adaptive compute allocation — training and inference design causes the model to expend fewer reasoning tokens on trivial tasks and proportionally more on difficult tasks. This is not a separate “think engine” but a dynamic allocation within the reasoning pipeline.

- Reasoning parameter in the Responses API — clients pass a

reasoningobject (for examplereasoning: { "effort": "high" }) to request deeper internal reasoning; settingreasoning: { "effort": "none" }effectively disables the extended internal reasoning pass for lower latency. The Responses API also returns reasoning/token metadata (helpful for cost and debugging). ) - Tools & parallel tool calls — GPT-5.1 improves parallel tool calling and includes named tools (like

apply_patch) that reduce failure modes for programmatic edits; parallelization increases end-to-end throughput for tool-heavy workflows. - Prompt cache and persistence —

prompt_cache_retention='24h'is supported on Responses and Chat Completions endpoints to retain context across multi-turn sessions (reduces repeated token encoding).

Benchmark performance

Latency / token efficiency examples (vendor-provided): on routine queries, OpenAI reports dramatic reductions in tokens/time (example: an npm listing command that took ~10s / ~250 tokens on GPT-5 now takes ~2s / ~50 tokens on GPT-5.1 in their representative test). Third-party early testers (e.g., asset managers, coding firms) reported 2–3× speedups on many tasks and token-efficiency gains in tool-heavy flows.

OpenAI and early partners published representative benchmark claims and measured improvements:

| Evaluation | GPT‑5.1 (high) | GPT‑5 (high) |

| SWE-bench Verified (all 500 problems) | 76.3% | 72.8% |

| GPQA Diamond (no tools) | 88.1% | 85.7% |

| AIME 2025 (no tools) | 94.0% | 94.6% |

| FrontierMath (with Python tool) | 26.7% | 26.3% |

| MMMU | 85.4% | 84.2% |

| Tau2-bench Airline | 67.0% | 62.6% |

| Tau2-bench Telecom* | 95.6% | 96.7% |

| Tau2-bench Retail | 77.9% | 81.1% |

| BrowseComp Long Context 128k | 90.0% | 90.0% |

Limitations & safety considerations

- Hallucination risk persists. Adaptive reasoning helps on complex problems but does not eliminate hallucinations; higher

reasoning_effortimproves checks but does not guarantee correctness. Always validate high-stakes outputs. - Resource and cost tradeoffs: while GPT-5.1 can be far more token-efficient on simple flows, enabling high reasoning effort or long agentic tool-use can increase token consumption and latency. Use prompt caching to mitigate repeated costs where appropriate.

- Tool safety:

apply_patchandshelltools increase automation power (and risk). Production deployments should gate tool execution (review diffs / commands before execution), use least privilege, and ensure robust CI/CD and operational guardrails.

Comparison with other models

- vs GPT-5: GPT-5.1 improves adaptive reasoning and instruction adherence; OpenAI reports faster response times on easy tasks and better persistence on hard tasks. GPT-5.1 also adds the

nonereasoning option and extended prompt caching. - vs GPT-4.x / 4.1: GPT-5.1 is designed for more agentic, tool-heavy, and coding tasks; OpenAI and partners report gains on coding benchmarks and multi-step reasoning. For many standard conversational tasks, GPT-5.1 Instant may be comparable to earlier GPT-4.x chat models but with improved steerability and personality presets.

- vs Anthropic / Claude / other LLMs: ChatGPT 5.1′;s MoA architecture gives it a distinct edge in tasks requiring complex, multi-step reasoning. It scored an unprecedented 98.20 on the HELM benchmark for complex reasoning, compared to Claude 4’s 95.60 and Gemini 2.0 Ultra’s 94.80.

Typical use cases

- Agentic coding assistants / PR reviews / code generation — improved

apply_patchreliability and better code steerability. - Complex multi-step reasoning — technical explanations, math proofs, legal summarization drafts where the model must chain steps and check work.

- Automated agents with tool usage — knowledge retrieval + tool calling workflows (database / search / shell), where parallel tool calls and more persistent reasoning increase throughput and robustness.

- Customer support automation for complex tickets — where stepwise diagnosis and multi-turn evidence collection are needed, and the model can balance speed and effort.

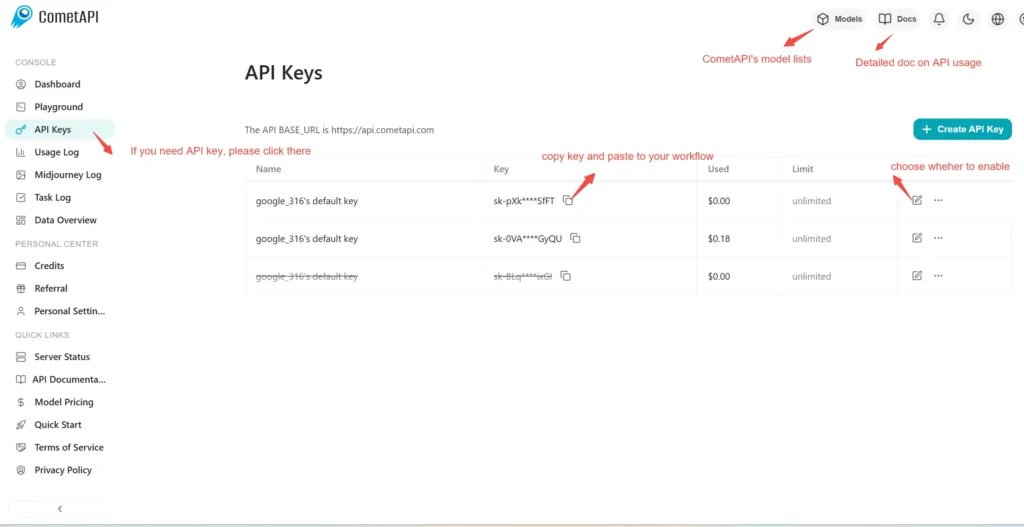

How to call GPT-5.1 API from CometAPI

gpt-5.1 API Pricing in CometAPI,20% off the official price:

| Input Tokens | $1.00 |

|---|---|

| Output Tokens | $8.00 |

Required Steps

- Log in to cometapi.com. If you are not our user yet, please register first.

- Sign into your CometAPI console.

- Get the access credential API key of the interface. Click “Add Token” at the API token in the personal center, get the token key: sk-xxxxx and submit.

Use Method

- Select the “

gpt-5.1” endpoint to send the API request and set the request body. The request method and request body are obtained from our website API doc. Our website also provides Apifox test for your convenience. - Replace <YOUR_API_KEY> with your actual CometAPI key from your account.

- Insert your question or request into the content field—this is what the model will respond to.

- . Process the API response to get the generated answer.

CometAPI provides a fully compatible REST API—for seamless migration. Key details to Chat and Responses:

- Base URL: https://api.cometapi.com/v1/chat/completions / https://api.cometapi.com/v1/responses

- Model Names:

gpt-5.1 - Authentication:

Bearer YOUR_CometAPI_API_KEYheader - Content-Type:

application/json.