Grok 4.1 Fast is xAI’s production-focused large model, optimized for agentic tool-calling, long-context workflows, and low-latency inference. It’s a multimodal, two-variant family designed to run autonomous agents that search, execute code, call services, and reason over extremely large contexts (up to 2 million tokens).

Key features

- Two variants:

grok-4-1-fast-reasoning(thinking / agentic) andgrok-4-1-fast-non-reasoning(instant “Fast” responses). - Massive context window: 2,000,000 tokens — designed for multi-hour transcripts, large document collections, and long multi-turn planning.

- First-party Agent Tools API: built-in web/X browsing, server-side code execution, file search, and “MCP” connectors so the model can act as an autonomous agent without external glue.

- Modalities: Multimodal (text + images and upgraded visual capabilities including chart analysis and OCR-level extraction).

How does Grok 4.1 Fast work?

- Architecture & modes: Grok 4.1 Fast is presented as a single model family that can be configured for “reasoning” (internal chains-of-thought and higher deliberation) or non-reasoning “fast” operation for lower latency. The reasoning mode can be turned on/off by API parameters (e.g.,

reasoning.enabled) on provider layers such as CometAPI. - Training signal: xAI reports reinforcement-learning in simulated agentic environments (tool-heavy training) to improve performance on long-horizon, multi-turn tool calling tasks (they reference training on τ²-bench Telecom and long-context RL).

- Tool orchestration: Tools run on xAI infrastructure; Grok can invoke multiple tools in parallel and decide agentic plans across turns (web search, X search, code execution, file retrieval, MCP servers).

- Throughput & rate limits: example published limits include 480 requests/minute and 4,000,000 tokens/minute for the

grok-4-1-fast-reasoningcluster .

Grok 4.1 fast Model versions & naming

grok-4-1-fast-reasoning— “thinking” agentic mode: internal reasoning tokens, tool orchestration, best for complex multi-step workflows.grok-4-1-fast-non-reasoning— instant “Fast” mode: minimal internal thinking tokens, lower latency for chat, brainstorming, short form writing.

Grok 4.1 fast Benchmarks performance

xAI highlight several benchmark wins and measured improvements versus prior Grok releases and some competing models. Key published numbers:

- τ²-bench (telecom agentic tool benchmark): reported 100% score with total cost $105。

- Berkeley Function Calling v4: reported 72% overall accuracy (xAI published figure) with total reported cost ~$400 in that benchmark context.

- Research & agentic search (Research-Eval / Reka / X Browse): xAI reports superior scores and lower cost vs several competitors on internal/industry agentic-search benchmarks (examples: Grok 4.1 Fast: Research-Eval and X Browse scores substantially higher than GPT-5 and Claude Sonnet 4.5 in xAI’s published tables).

- Factuality / hallucination: Grok 4.1 Fast halves the hallucination rate compared to Grok 4 Fast on FActScore and related internal metrics.

Grok 4.1 fast Limitations & risks

- Hallucinations are reduced, not eliminated. Published reductions are meaningful (xAI reports cutting hallucination rates substantially vs previous Grok 4 Fast) but factual errors still occur in edge cases and rapid-response workflows—validate mission-critical outputs independently.

- Tool trust surface: server-side tools increase convenience but also expand the attack surface (tool misuse, incorrect external results, or stale sources). Use provenance checks and guardrails; treat automated tool outputs as evidence to be verified.

- Not all-purpose SOTA: reviews indicate Grok series excels at STEM, reasoning, and long-context agentic tasks, but may lag in some multimodal visual comprehension and creative generation tasks compared to the very latest multimodal offerings from other vendors.

How Grok 4.1 fast compares to other leading models

- Versus Grok 4 / Grok 4.1 (non-Fast): Fast trades some internal compute/“thinking” overhead for latency and token economy while aiming to keep reasoning quality near Grok 4 levels; it’s optimized for production agentic use rather than raw peak reasoning on heavy offline benchmarks. ()

- Versus Google Gemini family / OpenAI GPT family / Anthropic Claude: independent reviews and tech press note Grok’s strengths in logical reasoning, tool calling and long context handling, while other vendors sometimes lead in multimodal vision, creative generation, or different price/performance tradeoffs.

How to call Grok 4.1 fast API from CometAPI

Grok 4.1 fast Pricing in CometAPI,20% off the official price:

| Input Tokens | $0.16 |

| Output Tokens | $0.40 |

Required Steps

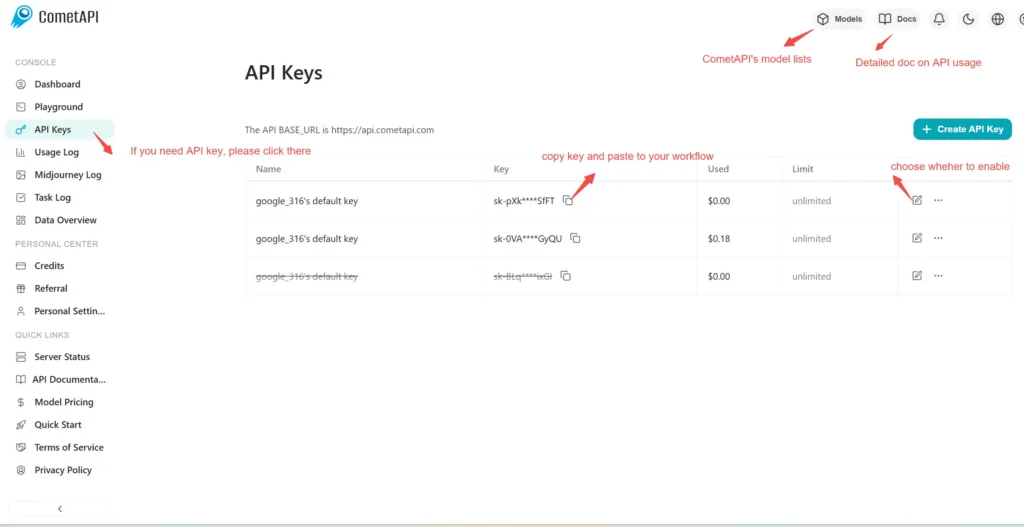

- Log in to cometapi.com. If you are not our user yet, please register first.

- Sign into your CometAPI console.

- Get the access credential API key of the interface. Click “Add Token” at the API token in the personal center, get the token key: sk-xxxxx and submit.

Use Method

- Select the “

grok-4-1-fast-reasoning/ grok-4-1-fast-non-reasoning” endpoint to send the API request and set the request body. The request method and request body are obtained from our website API doc. Our website also provides Apifox test for your convenience. - Replace <YOUR_API_KEY> with your actual CometAPI key from your account.

- Insert your question or request into the content field—this is what the model will respond to.

- . Process the API response to get the generated answer.

CometAPI provides a fully compatible REST API—for seamless migration. Key details to Chat :

- Base URL: https://api.cometapi.com/v1/chat/completions

- Model Names:

grok-4-1-fast-reasoning/ grok-4-1-fast-non-reasoning - Authentication:

Bearer YOUR_CometAPI_API_KEYheader - Content-Type:

application/json.

See also GPT-5.1 API