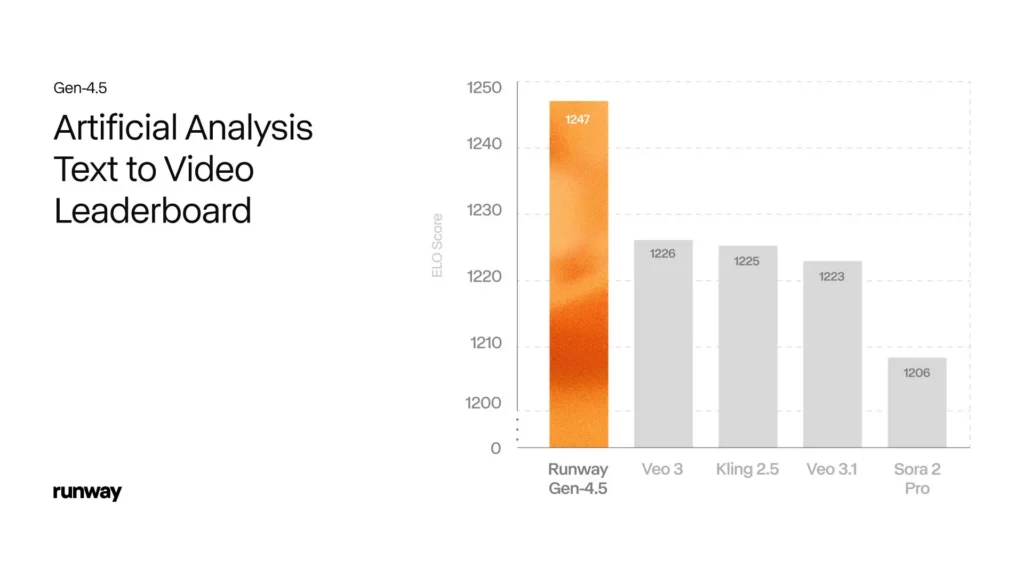

Runway Gen-4.5 is the company’s latest flagship text-to-video model, announced December 1, 2025. It’s positioned as an incremental but meaningful evolution over the Gen-4 family, with focused improvements in motion quality, prompt adherence, and temporal/physical realism — the exact areas that historically separated “good” AI video from “believable” AI video. Runway Gen-4.5 leads the current Artificial Analysis text-to-video leaderboard (1,247 Elo points) and is tuned for cinematic, controllable outputs—while still carrying typical generative-AI limitations such as small-detail artifacts and occasional causal errors.

Below is a deep, practical, and (where possible) evidence-backed look at what Gen-4.5 is, what’s new vs Gen-4, how it stacks up against competitors like Google’s Veo (3.1) and OpenAI’s Sora 2, real-world performance signals and benchmark claims, and a frank discussion of limitations, risks and best practices.

What is Runway Gen-4.5?

Runway Gen-4.5 is the latest text-to-video generation model from Runway, released as an iterative but substantial upgrade on the company’s Gen-4 line. Runway positions Gen-4.5 as a “new frontier” for video generation, emphasizing three primary improvements over earlier releases: markedly improved physical accuracy (objects carrying realistic weight and momentum), stronger prompt adherence (what you ask for is more reliably what you get), and higher visual fidelity across motion and time (details like hair, fabric weave and surface specularity remain coherent across frames). Gen-4.5 currently sits at the top of independent human-judged leaderboards used for text-to-video benchmarking.

Where did Runway Gen-4.5 come from and why does it matter?

Runway’s video models have evolved quickly from Gen-1 through Gen-3/Alpha to Gen-4; Gen-4.5 is presented as a consolidation and optimization of architectural upgrades, pretraining data strategies, and post-training techniques intended to maximize dynamics, temporal consistency and controllability. For creators and production teams, these improvements aim to make AI-generated clips functionally useful in previsualization, advertising/marketing content and short-form narrative production by reducing the “rough draft” feel that earlier text-to-video models often exhibited.

4 headline features of Runway Gen-4.5

1) Improved physical realism and motion dynamics

Runway Gen-4.5 emphasizes smoother, more physically plausible motion. Gen-4.5 focuses on realistic object motion — weight, inertia, liquids, cloth, and physically plausible collisions — producing sequences where interactions look less “floaty” and more grounded. In demos and my test the model demonstrates improved object trajectories, camera motion realism, and fewer “floaty” artifacts that plagued earlier video models. This is one of the headline upgrades compared with Gen-4.

2) Visual fidelity and style controls

Runway Gen-4.5 extends Runway’s control modes (text-to-video, image-to-video, video-to-video, keyframes) and improves photorealistic rendering, stylization, and cinematic composition. Runway claims Gen-4.5 can generate photoreal clips that are difficult to distinguish from real footage in short sequences, especially when combined with a good reference image or keyframes.

3) Better prompt adherence and compositional awareness.

The model demonstrates improved fidelity when prompts include multiple actors, camera directions, or cross-scene continuity constraints; it adheres to instructions more reliably compared to prior generations. higher accuracy in following descriptive prompts, leading to fewer hallucinated or irrelevant elements across a clip.

4) Higher visual detail and temporal stability.

Surface texture, hair/filament continuity, and consistent lighting across frames are noticeably improved. characters and objects are less likely to change appearance mid-clip. Runway claims these gains were made while preserving Gen-4’s latency profile. One of the more production-oriented advances is the model’s improved handling of character facial expressions and implied emotion across shots. While Runway Gen-4.5 is not a substitute for trained actors, it better preserves emotional continuity (a character’s expression persists through a camera move, for instance) and can generate plausible performance cues from compact directives like “anxious smile, glancing away, breathes sharply.”

How does Runway Gen-4.5 perform in benchmarks and real tests?

Runway reports an Elo score of 1,247 on the Artificial Analysis text-to-video leaderboard (as of the announcement) — positioning Gen-4.5 at the top of that particular benchmark at the time of reporting. Benchmarks like these use pairwise human or automated preference judgments across many model outputs;

Practical performance (what users can expect)

- Clip lengths & resolution: Gen-4.5 is currently optimized for short cinematic clips (single-shot outputs commonly 4–20s at HD/1080p). Runway emphasized delivering higher fidelity without adding latency vs Gen-4.

- Render times & cost: Runway’s messaging is that costs/latency are comparable to Gen-4 across subscription tiers; real-world times will vary with chosen resolution, quality setting and queue load.

How does Runway Gen-4.5 differ from Gen-4?

Gen-4 established Runway’s production intentions: consistent characters, image-to-video control modes (image→video, keyframing, video→video), and an emphasis on user workflows. Gen-4.5 keeps that foundation but pushes world modelling (physics, motion) and prompt adherence further without sacrificing throughput. In practice, Gen-4 may still be excellent for fast, style-driven tasks and lighter budgets; Gen-4.5 is the upgrade path when you need more believable dynamics and fine-grained control.

What changed technically (high level)

Runway Gen-4.5 is portrayed as an evolution rather than a complete architectural rewrite. Runway’s materials say the model benefits from improved pre-training data efficiency and post-training techniques (e.g., targeted fine-tuning and temporal regularization). Practically, that translates to better weight/motion modeling, more coherent multielement scenes, and improved retention of high-frequency details (hair, cloth weave) across frames.

Practical differences creators will notice

- Better physical behaviour: objects obey perceived mass and liquids/fluids behave more plausibly.

- Fewer identity breaks: characters and objects are less likely to change appearance mid-clip.

- Same speed, higher quality: Runway states performance (latency) is comparable to Gen-4 while quality rises. That makes Gen-4.5 attractive to production teams who can’t accept large rendering delays.

When to pick Gen-4 vs Gen-4.5

- Use Gen-4 when you need a cheaper, fast proof-of-concept or when existing pipelines/controls are already tuned to that engine.

- Use Gen-4.5 when you need improved realism, complex multi-object interactions, or production-grade output where motion physics and prompt accuracy matter (e.g., product visualizations, VFX previsualization, character-driven shorts).

Compatibility with Gen-4 controls. All the editor modes Runway supports (image→video, keyframes, video→video, actor references) are being rolled into Gen-4.5 so creators can reuse familiar controls with better results.

How does Gen-4.5 compare to Veo 3.1 and Sora 2?

How does it compare to Google’s Veo 3.1?

Veo 3.1 is Google’s high-fidelity text-to-video family (Veo 3 → 3.1 updates). The model is praised for cinematic texture, strong style rendering, and tight color/lighting control. Independent comparisons indicate Veo 3.1 excels at mood and stylized scenes and is widely available via Google’s APIs, but it can struggle on multi-object physics and long-range temporal coherence compared with the very best specialized contenders. Early blind tests and user writeups suggest Runway Gen-4.5 pulls ahead in motion plausibility and prompt adherence for physics-heavy prompts, while Veo often wins on stylized, painterly, or cinematic single-scene tests.

Where Veo tends to lead: audio fidelity and structured narrative features (Flow/Veo Studio), and close integration into Google’s ecosystem (Gemini API/Vertex AI).

Where Gen-4.5 tends to lead: blind human preference tests for visual realism, prompt adherence, and complex motion behavior (per Video Arena rankings cited by Runway). In several public blind comparisons Gen-4.5 has a narrow lead in Elo scoring over Veo variants, though the margin and meaning vary by content type.

How does it compare to OpenAI’s Sora 2?

Sora 2 (OpenAI) emphasizes physical accuracy, synchronized audio (including dialogue & sound effects), and controllability . Sora 2 often fares well in making coherent animated scenes with high-level narrative cues and in workflows where audio and dialogue are important parts of the generation pipeline.

Where Sora 2 tends to lead: integrated audio generation and multimodal sync in certain settings; tends to produce highly atmospheric, narrative-oriented clips.

Where Gen-4.5 tends to lead: according to the independent blind comparisons cited by Runway, perceived visual realism, prompt fidelity and motion consistency. Again, the practical choice depends on your values: if native audio generation + integrated tools are critical, Sora 2 or Veo may be preferable; if pure visual fidelity for complex scenes is the priority, Gen-4.5’s blind-test advantage is meaningful.

Practical comparison table (summary)

| Area | Runway Gen-4.5 | Runway Gen-4 (prior) | Google Veo 3.1 | OpenAI Sora 2 |

|---|---|---|---|---|

| Release / Positioning | Dec 2025 — “Gen-4.5”: quality & fidelity bump; top benchmark score (1,247 Elo) | Earlier Gen-4: major step for consistency & controllability | Veo 3.1: Google’s video generator; native audio & fast/fast-quality options | Sora 2: OpenAI’s flagship video+audio model; emphasizes physical accuracy & synchronized audio |

| Core strengths | Motion quality, prompt fidelity, cinematic visuals, API integration | Character continuity, multi-shot consistency, controllability | Fast 8s outputs, native audio/dialogue generation, optimized for speed/UX | Physics & realism, synchronized sound/dialogue, controllability |

| Output length / formats | Short cinematic clips; supports image→video, text→video, keyframes, etc. | Short clips; similar control modes | 8-second high-quality videos, Veo 3.1 Fast option | 720p/1080p outputs with audio, emphasis on fidelity |

| Native audio | Not the primary headline (focus is visual fidelity), but Runway supports audio workflows via tooling | Limited native audio generation | Native audio generation (sound effects, dialogue). Focus on audio quality. | Synchronized audio and sound effects are explicit features. |

| Typical limitations | Small-detail artifacts (faces/crowds), occasional causal/time errors | Earlier artifacts, more inconsistency than 4.5 in motions | Short duration is a design tradeoff; quality vs length | Narrow failure modes on complex scenes; still evolving |

- Visual realism & motion: Gen-4.5 > Veo 3.1 ≈ Sora 2 (varies by scene).

- Audio & native sound: Veo 3.1 ≥ Sora 2 > Runway (Runway has workflow audio tools but Veo & Sora incorporate deeper native audio generation in productization).

- Controls & editing: Runway (keyframes, image→video, reference continuity) and Veo (Flow Studio) both offer strong control; Sora focuses on synced multimodal controls.

- In short: Sora 2 is strong at narrative continuity; Veo 3.1 is strong at cinematic texture; Gen-4.5 is strong at motion realism and controllability.

What concrete limitations and risks remain with Gen-4.5?

No model is perfect, and Gen-4.5 has known limitations and real-world risks to consider before adoption.

Technical limitations

- Edge-case physics and causal errors: While much improved, the model still produces occasional causal missequencing (for example, an effect preceding its cause) and subtle object permanence failures when scenes get very complex. These are less frequent but still present.

- Long-form coherence: Like most current text-to-video models, Gen-4.5 is optimized for short clips (seconds long). Generating extended scenes or full sequences still requires stitching, editorial intervention, or hybrid workflows.

- Identity and consistency at scale: Producing hundreds of shots with the exact same character acting consistently remains workflow-heavy; Gen-4.5 helps but doesn’t obviate reference design systems or centralized asset pipelines.

Safety, misuse, and ethical risks

- Deepfake / impersonation risk: Any higher-fidelity video generator increases the risk of realistic but deceptive media. Organizations should implement safeguards (watermarking, content policies, identity verification flows) and monitor misuse risk.

- Copyright and dataset provenance: Training data provenance remains a broader industry concern. Creators and rights holders should be aware that outputs may reflect learned patterns from copyrighted material, which raises legal and ethical questions about reuse in commercial contexts.

- Bias and representational harms: Generative models may reproduce biases present in training data (e.g., over/under-representation, stereotypical portrayals). Rigorous testing and in-pipeline mitigation strategies are still necessary.

Conclusion — Where Gen-4.5 fits in the evolving AI video landscape

Runway Gen-4.5 represents a significant step forward in text-to-video realism and controllability. It’s currently ranked highly in independent blind preference leaderboards, and Runway’s product messaging and early reporting position it as a practical upgrade for creators who need more convincing motion, better prompt fidelity, and improved temporal coherence without trading off generation speed. At the same time, competing systems from Google (Veo 3.1) and OpenAI (Sora 2) continue to push complementary strengths such as integrated audio, productized story/narrative tooling and deeper ecosystem integrations. Choosing the right platform still depends on the project: whether you prioritize visual realism, native audio, platform integration or governance controls.

Gen-4.5 is rolling out across plans with comparable pricing to Gen-4.

Developers can access Veo 3.1 , Sora 2 and Runway/gen4_aleph etc through CometAPI, the latest model version is always updated with the official website. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Free trial of gen-4.5 !

If you want to know more tips, guides and news on AI follow us on VK, X and Discord!