Mistral Large 3 is the newest “frontier” model family released by Mistral AI in early December 2025. It’s an open-weight, production-oriented, multimodal foundation model built around a granular sparse Mixture-of-Experts (MoE) design and intended to deliver “frontier” reasoning, long-context understanding, and vision + text capabilities while keeping inference practical through sparsity and modern quantization. Mistral Large 3 as having 675 billion total parameters with ~41 billion active parameters at inference and a 256k token context window in its default configuration — a combination designed to push both capability and scale without forcing every inference to touch all parameters.

What is Mistral Large 3? How it work?

What is Mistral Large 3?

Mistral Large 3 is Mistral AI’s flagship frontier model in the Mistral 3 family — a large, open-weight, multimodal Mixture-of-Experts (MoE) model released under an Apache-2.0 license. It’s designed to deliver “frontier” capability (reasoning, coding, long-context understanding, multimodal tasks) while keeping inference compute sparse by activating only a subset of the model’s experts for each token. Mistral’s official materials describe Large 3 as a model with ~675 billion total parameters and roughly 40–41 billion active parameters used per forward pass; it also includes a vision encoder and is engineered to handle very long context windows (Mistral and partners cite up to 256k tokens).

In short: it’s a MoE model that packs huge capacity in total (so it can store diverse specialties) but only computes on a much smaller active subset at inference time — aiming to give frontier performance more efficiently than a dense model of comparable total size.

Core architecture: Granular Mixture-of-Experts (MoE)

At a high level, Mistral Large 3 replaces some (or many) feed-forward sublayers of a transformer with MoE layers. Each MoE layer contains:

- Many experts — independent sub-networks (normally FFN blocks). In aggregate they produce the model’s very large total parameter count (e.g., hundreds of billions).

- A router / gating network — a small network that looks at the token representation and decides which expert(s) should process that token. Modern MoE routers typically pick only the top-k experts (sparse gating), often k=1 or k=2, to keep compute low.

- Sparse activation — for any given token, only the selected experts run; the rest are skipped. This is where the efficiency comes from: total stored parameters >> active parameters computed per token.

Mistral calls its design granular MoE to emphasize that the model has many small/specialized experts and a routing scheme optimized to scale across many GPUs and long contexts. The result: very large representational capacity while keeping per-token compute closer to a much smaller dense model,Total Parameters:

- Total Parameters: 675 billion; sum of all parameters stored across every expert and the rest of the transformer. This number indicates the model’s gross capacity (how much knowledge and specialization it can hold).

- Active Parameters: 41 billion. the subset of parameters that are actually used/computed for a typical forward pass, because the router only activates a few experts per token. This is the metric that more closely relates to inference compute and memory use per request. Mistral’s public materials list ~41B active parameters; some model pages show slightly different counts for specific variants (e.g., 39B) — that can reflect variant/instruct versions or rounding.

Training Configuration:

- Trained from scratch using 3000 NVIDIA H200 GPUs;

- Data covers multiple languages, multiple tasks, and multiple modalities;

- Supports image input and cross-language inference.

Feature table of Mistral Large 3

| Category | Technical Capability Description |

|---|---|

| Multimodal Understanding | Supports image input and analysis, enabling comprehension of visual content during dialogue. |

| Multilingual Support | Natively supports 10+ major languages (English, French, Spanish, German, Italian, Portuguese, Dutch, Chinese, Japanese, Korean, Arabic, etc.). |

| System Prompt Support | Highly consistent with system instructions and contextual prompts, suitable for complex workflows. |

| Agent Capabilities | Supports native function calling and structured JSON output, enabling direct tool invocation or external system integration. |

| Context Window | Supports an ultra-long context window of 256K tokens, among the longest of open-source models. |

| Performance Positioning | Production-grade performance with strong long-context understanding and stable output. |

| Open-source License | Apache 2.0 License, freely usable for commercial modification. |

Overview:

- Performance is comparable to mainstream closed-source models;

- Outstanding performance in multilingual tasks (especially in non-English and non-Chinese scenarios);

- Possesses image understanding and instruction following capabilities;

- Provides a basic version (Base) and an instruction-optimized version (Instruct), with an inference-optimized version (Reasoning) coming soon.

How does Mistral Large 3 perform on benchmarks?

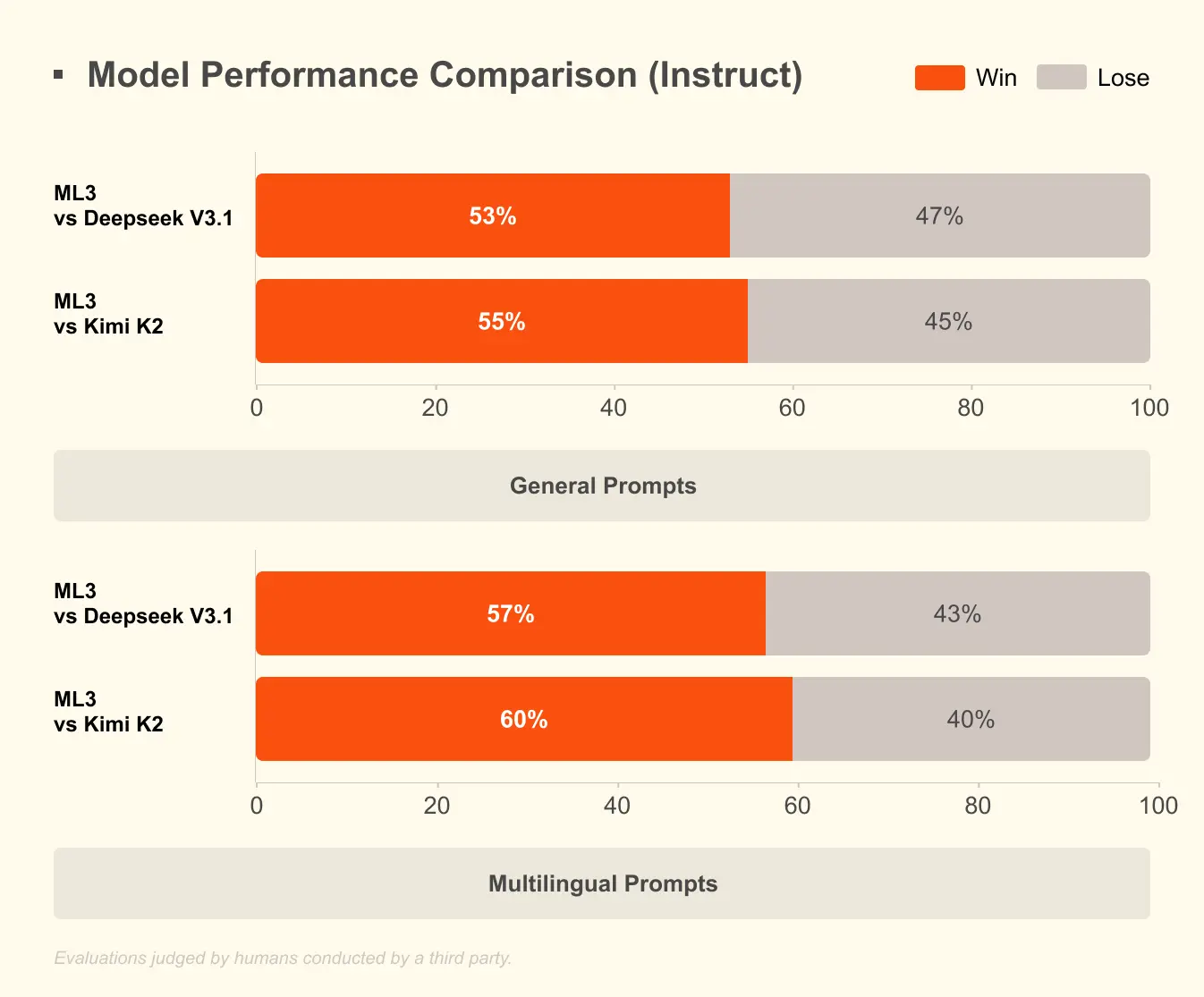

Early public benchmarks and leaderboards show Mistral Large 3 placing highly among open-source models: LMArena placement of #2 in OSS non-reasoning models and mentions top-tier leaderboard positions on a variety of standard tasks(e.g., GPQA, MMLU and other reasoning/general knowledge suites).

![Mistral Large 3 is the newest “frontier” model family released by Mistral AI in early December 2025. It’s an open-weight, production-oriented, multimodal foundation model built around a granular sparse Mixture-of-Experts (MoE) design and intended to deliver “frontier” reasoning, long-context understanding, and vision + text capabilities while keeping inference practical through sparsity and modern quantization. Mistral Large 3 as having 675 billion total parameters with ~41 billion active parameters at inference and a 256k token context window in its default configuration — a combination designed to push both capability and scale without forcing every inference to touch all parameters.

What is Mistral Large 3? How it work?

What is Mistral Large 3?

Mistral Large 3 is Mistral AI’s flagship frontier model in the Mistral 3 family — a large, open-weight, multimodal Mixture-of-Experts (MoE) model released under an Apache-2.0 license. It’s designed to deliver “frontier” capability (reasoning, coding, long-context understanding, multimodal tasks) while keeping inference compute sparse by activating only a subset of the model’s experts for each token.

Mistral Large 3 adopts a Mixture-of-Experts (MoE) approach: instead of activating every parameter for each token, the model routes token processing to a subset of expert subnetworks. The published counts for Large 3 are approximately 41 billion active parameters (the parameters that typically participate for a token) and 675 billion total parameters across all experts — a sparse-but-massive design that aims to hit the sweet spot between compute efficiency and model capacity. The model also supports an extremely long context window (documented at 256k tokens) and multimodal inputs (text + image).

In short: it’s a MoE model that packs huge capacity in total (so it can store diverse specialties) but only computes on a much smaller active subset at inference time — aiming to give frontier performance more efficiently than a dense model of comparable total size.

Core architecture: Granular Mixture-of-Experts (MoE)

At a high level, Mistral Large 3 replaces some (or many) feed-forward sublayers of a transformer with MoE layers. Each MoE layer contains:

- Many experts — independent sub-networks (normally FFN blocks). In aggregate they produce the model’s very large total parameter count (e.g., hundreds of billions).

- A router / gating network — a small network that looks at the token representation and decides which expert(s) should process that token. Modern MoE routers typically pick only the top-k experts (sparse gating), often k=1 or k=2, to keep compute low.

- Sparse activation — for any given token, only the selected experts run; the rest are skipped. This is where the efficiency comes from: total stored parameters >> active parameters computed per token.

Mistral calls its design granular MoE to emphasize that the model has many small/specialized experts and a routing scheme optimized to scale across many GPUs and long contexts. The result: very large representational capacity while keeping per-token compute closer to a much smaller dense model,Total Parameters:

- Total Parameters: 675 billion; sum of all parameters stored across every expert and the rest of the transformer. This number indicates the model’s gross capacity (how much knowledge and specialization it can hold).

- Active Parameters: 41 billion. the subset of parameters that are actually used/computed for a typical forward pass, because the router only activates a few experts per token. This is the metric that more closely relates to inference compute and memory use per request. Mistral’s public materials list ~41B active parameters; some model pages show slightly different counts for specific variants (e.g., 39B) — that can reflect variant/instruct versions or rounding.

Training Configuration:

- Trained from scratch using 3000 NVIDIA H200 GPUs;

- Data covers multiple languages, multiple tasks, and multiple modalities;

- Supports image input and cross-language inference.

Feature table of Mistral Large 3

| Category | Technical Capability Description |

|---|---|

| Multimodal Understanding | Supports image input and analysis, enabling comprehension of visual content during dialogue. |

| Multilingual Support | Natively supports 10+ major languages (English, French, Spanish, German, Italian, Portuguese, Dutch, Chinese, Japanese, Korean, Arabic, etc.). |

| System Prompt Support | Highly consistent with system instructions and contextual prompts, suitable for complex workflows. |

| Agent Capabilities | Supports native function calling and structured JSON output, enabling direct tool invocation or external system integration. |

| Context Window | Supports an ultra-long context window of 256K tokens, among the longest of open-source models. |

| Performance Positioning | Production-grade performance with strong long-context understanding and stable output. |

| Open-source License | Apache 2.0 License, freely usable for commercial modification. |

Overview:

- Performance is comparable to mainstream closed-source models;

- Outstanding performance in multilingual tasks (especially in non-English and non-Chinese scenarios);

- Possesses image understanding and instruction following capabilities;

- Provides a basic version (Base) and an instruction-optimized version (Instruct), with an inference-optimized version (Reasoning) coming soon.

How does Mistral Large 3 perform on benchmarks?

Early public benchmarks and leaderboards show Mistral Large 3 placing highly among open-source models: LMArena placement of #2 in OSS non-reasoning models and mentions top-tier leaderboard positions on a variety of standard tasks(e.g., GPQA, MMLU and other reasoning/general knowledge suites).]()

Strengths demonstrated so far

- Long-document comprehension and retrieval-augmented tasks: The combination of long context and sparse capacity gives Mistral Large 3 an advantage on long-context tasks (document QA, summarization across large documents).

- General knowledge and instruction following: In instruct-tuned variants Mistral Large 3 is strong on many “general assistant” tasks and system-prompt adherence.

- Energy and throughput (on optimized hardware): NVIDIA’s analysis shows impressive energy efficiency and throughput gains when Mistral Large 3 is run on GB200 NVL72 with MoE-specific optimizations — numbers that translate directly to per-token cost and scalability for enterprises.

How can you access and use Mistral Large 3?

Hosted cloud access (quick path)

Mistral Large 3 is available through multiple cloud and platform partners:

- Hugging Face hosts model cards and inference artifacts (model bundles including instruct variants and optimized NVFP4 artifacts). You can call the model through Hugging Face Inference API or download compatible artifacts.

- Azure / Microsoft Foundry announced Mistral Large 3 availability for enterprise workloads.

- NVIDIA published accelerated runtimes and optimization notes for GB200/H200 families and partners like Red Hat published vLLM instructions.

These hosted routes let you get started quickly without dealing with MoE runtime engineering.

Running locally or on your infra (advanced)

Running Mistral Large 3 locally or on private infra is feasible but nontrivial:

Options:

- Hugging Face artifacts + accelerate/transformers — can be used for smaller variants or if you have a GPU farm and appropriate sharding tools. The model card lists platform-specific constraints and recommended formats (e.g., NVFP4).

- vLLM — an inference server optimized for large LLMs and long contexts; Red Hat and other partners published guides to run Mistral Large 3 on vLLM to get efficient throughput and latency.

- Specialized stacks (NVIDIA Triton / NVL72 / custom kernels) — needed for best latency/efficiency at scale; NVIDIA published a blog on accelerating Mistral 3 with GB200/H200 and NVL72 runtimes.

- Ollama / local VM managers — community guides show local setups (Ollama, Docker) for experimentation; expect large RAM/GPU footprints and the need to use model variants or quantized checkpoints.

Example: Hugging Face inference (python)

This is a simple example using the Hugging Face Inference API (suitable for instruction variants). Replace HF_API_KEY and MODEL with the values from the model card:

# Example: call Mistral Large 3 via Hugging Face Inference APIimport requests, json, osHF_API_KEY = os.environ.get("HF_API_KEY")MODEL = "mistralai/Mistral-Large-3-675B-Instruct-2512"headers = {"Authorization": f"Bearer {HF_API_KEY}", "Content-Type": "application/json"}payload = { "inputs": "Summarize the following document in 3 bullet points: <paste your long text here>", "parameters": {"max_new_tokens": 256, "temperature": 0.0}}r = requests.post(f"https://api-inference.huggingface.co/models/{MODEL}", headers=headers, data=json.dumps(payload))print(r.json())

Note: For very long contexts (tens of thousands of tokens), check the provider’s streaming / chunking recommendations and the model variant’s supported context length.

Example: starting a vLLM server (conceptual)

vLLM is a high-performance inference server used by enterprises. Below is a conceptual start (check vLLM docs for flags, model path, and MoE support):

# conceptual example — adjust to your environment and model pathvllm --model-path /models/mistral-large-3-instruct \ --num-gpus 4 \ --max-batch-size 8 \ --max-seq-len 65536 \ --log-level info

Then use the vLLM Python client or HTTP API to send requests. For MoE models you must ensure vLLM build and runtime support sparse expert kernels and the model’s checkpoint format (NVFP4/FP8/BF16).

Practical best practices for deploying Mistral Large 3

Choose the right variant and precision

- Start with an instruction-tuned checkpoint for assistant workflows (the model family ships an Instruct variant). Use base models only when you plan to fine-tune or apply your own instruction tuning.

- Use optimized low-precision variants (NVFP4, FP8, BF16) when available for your hardware; these provide massive efficiency wins with minimal quality degradation if the checkpoint is produced and validated by the model vendor.

Memory, sharding, and hardware

- Don’t expect to run the 675B total parameter checkpoint on a single commodity GPU — even though only ~41B are active per token, the full checkpoint is enormous and requires sharding strategies plus high-memory accelerators (GB200/H200 class) or orchestrated CPU+GPU offload.

- Use model parallelism + expert placement: MoE models benefit from placing experts across devices to balance routing traffic. Follow vendor guidance on expert assignment.

Long-context engineering

- Chunk and retrieve: For many long-doc tasks, combine a retrieval component with the 256k context to keep latency and cost manageable — i.e., retrieve relevant chunks, then pass a focused context to the model.

- Streaming and windowing: For continuous streams, maintain a sliding window and summarize older context into condensed notes to keep the model’s attention budget effective.

Prompt engineering for MoE models

- Prefer explicit instructions: Instruction-tuned checkpoints respond better to clear tasks and examples. Use few-shot examples in the prompt for complex structured output.

- Chain-of-thought and system messages: For reasoning tasks, structure prompts that encourage stepwise reasoning and verify intermediate results. But beware: prompting chain-of-thought increases token consumption and latency.

Conclusion

Mistral Large 3 is an important milestone in the open-weight model landscape: a 675B total / ~41B active MoE model with a 256k context window, multimodal abilities, and deployment recipes that have been co-optimized with major infrastructure partners. It offers a compelling performance-for-cost profile for entserprises that can adopt the MoE runtime and hardware stack, while still requiring careful evaluation for specialized reasoning tasks and operational readiness.

To begin, explore more AI models (such as Gemini 3 Pro) ’ capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !