Technical specifications of GPT 5.2 Codex

| Item | GPT-5.2-Codex (public specs) |

|---|---|

| Model family | GPT-5.2 (Codex variant — coding/agentic optimized). |

| Input types | Text, Image (vision inputs for screenshots/diagrams). |

| Output types | Text (code, explanations, commands, patches). |

| Context window | 400,000 tokens (very long-context support). |

| Max output tokens | 128,000 (per call). |

| Reasoning effort levels | low, medium, high, xhigh (controls internal reasoning/compute allocation). |

| Knowledge cutoff | August 31, 2025 (model’s training cutoff). |

| Parent family / variants | GPT-5.2 family: gpt-5.2 (Thinking), gpt-5.2-chat-latest (Instant), gpt-5.2-pro (Pro); Codex is an optimised variant for agentic coding. |

What is the GPT-5.2-Codex

GPT-5.2-Codex is a purpose-built derivative of the GPT-5.2 family engineered for professional software engineering workflows and defensive cybersecurity tasks. It extends GPT-5.2’s general-purpose enhancements (improved long-context reasoning, tool-calling reliability, and vision understanding) with extra tuning and safety controls for real-world agentic coding: large refactorings, repository-scale edits, terminal interaction, and interpreting screenshots/diagrams commonly shared during engineering.

Main features of GPT-5.2 Codex

- Very long context handling: 400k token window makes it feasible to reason across whole repositories, long issue histories, or multi-file diffs without losing context.

- Vision + code: Generates, refactors, and migrates code across multiple languages; better at large refactorings and multi-file edits compared with prior Codex variants. Improved vision lets the model interpret screenshots, diagrams, charts, and UI surfaces shared in debugging sessions — useful for front-end debugging and reverse engineering UI bugs.

- Agentic/terminal competence: Trained and benchmarked for terminal tasks and agent workflows (compiling, running tests, installing dependencies, making commits). Demonstrated ability to run compilation flows, orchestrate package installs, configure servers, and reproduce dev env steps when given terminal context. Benchmarked on Terminal-Bench.

- Configurable reasoning effort:

xhighmode for deep, multi-step problem solving (allocate more internal compute/steps when the task is complex).

Benchmark performance of GPT-5.2 Codex

OpenAI reporting cite improved benchmark outcomes for agentic coding tasks:

- SWE-Bench Pro: ~56.4% accuracy on large real-world software engineering tasks (reported post-release for GPT-5.2-Codex).

- Terminal-Bench 2.0: ~64% accuracy on terminal/agentic task sets.

(These represent reported aggregate task success rates on complex, repository-scale benchmarks used to evaluate agentic coding capabilities.)

How GPT-5.2-Codex compares to other models

- vs GPT-5.2 (general): Codex is a specialized tuning of GPT-5.2: same core improvements (long context, vision) but additional training/optimization for agentic coding (terminal ops, refactoring). Expect better multi-file edits, terminal robustness, and Windows environment compatibility.

- vs GPT-5.1-Codex-Max: GPT-5.2-Codex advances Windows performance, context compression, and vision; benchmarks reported for 5.2 show improvements on SWE-Bench Pro and Terminal-Bench relative to predecessors.

- vs competing models (e.g., Google Gemini family): GPT-5.2 competitive with or ahead of Gemini 3 Pro on many long-horizon and multimodal tasks. The practical edge for Codex is its agentic coding optimizations and IDE integrations; however, leaderboard positioning and winners depend on task and evaluation protocol.

Representative enterprise use cases

- Large-scale refactors and migrations — Codex can manage multi-file refactors and iterative testing sequences while preserving high-level intent across long sessions.

- Automated code review & remediation — Codex’s ability to reason across repositories and run/validate patches makes it suitable for automated PR reviews, suggested fixes, and regression detection.

- DevOps / CI orchestration — Terminal-bench improvements point to reliable orchestration of build/test/deploy steps in sandboxed flows.

- Defensive cybersecurity — Faster vulnerability triage, exploit reproduction for validation, and defensive CTF work in controlled, audited environments (note: requires strict access control).

- Design → prototype workflows — Convert mocks/screenshots into functional front-end prototypes and iterate interactively.

How to access GPT-5.2 Codex API

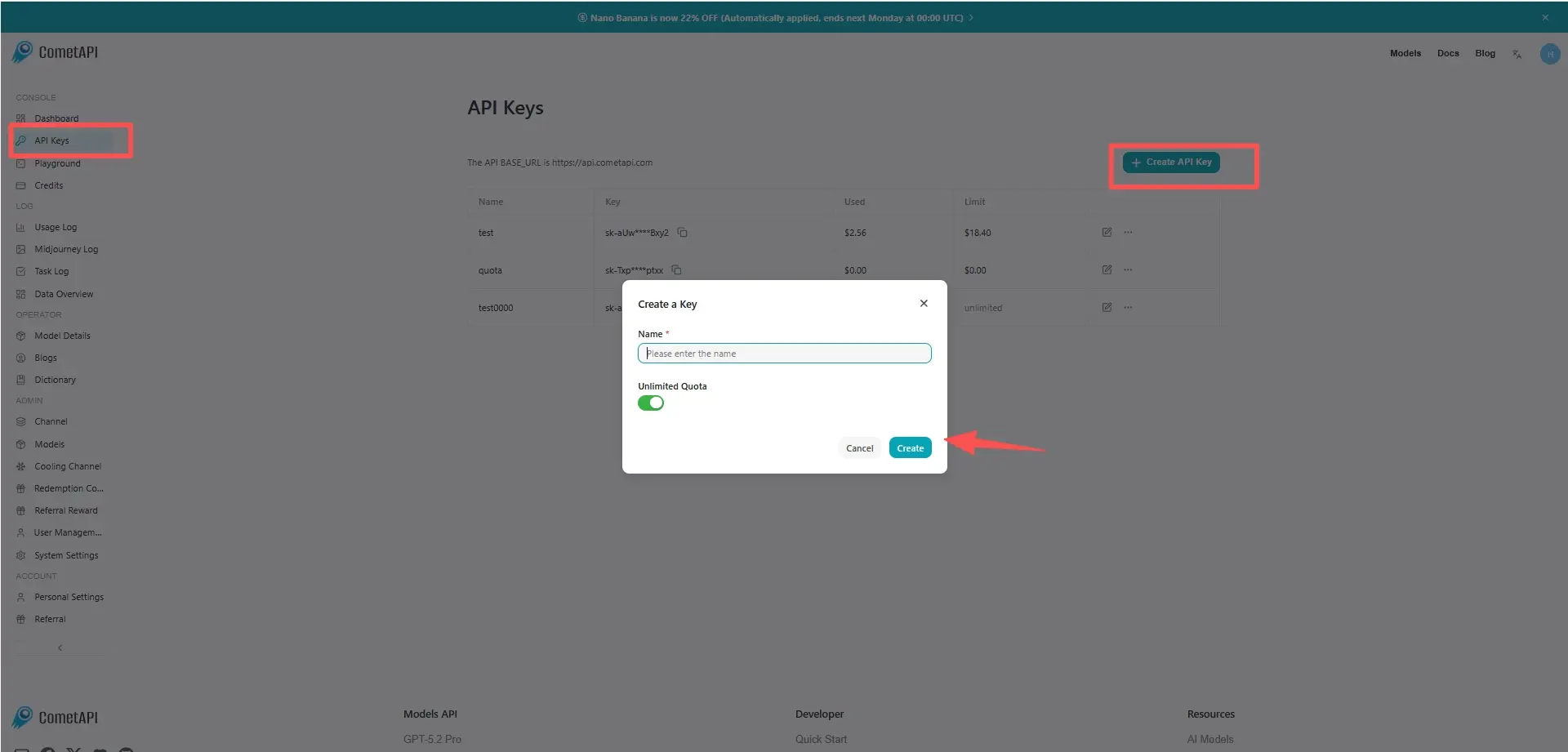

Step 1: Sign Up for API Key

Log in to cometapi.com. If you are not our user yet, please register first. Sign into your CometAPI console. Get the access credential API key of the interface. Click “Add Token” at the API token in the personal center, get the token key: sk-xxxxx and submit.

Step 2: Send Requests to GPT 5.2 Codex API

Select the “gpt-5.2-codex” endpoint to send the API request and set the request body. The request method and request body are obtained from our website API doc. Our website also provides Apifox test for your convenience. Replace <YOUR_API_KEY> with your actual CometAPI key from your account. base url is Responses

Insert your question or request into the content field—this is what the model will respond to . Process the API response to get the generated answer.

Step 3: Retrieve and Verify Results

Process the API response to get the generated answer. After processing, the API responds with the task status and output data.