GPT 4.5 In-Depth Review : Features, Price & Comparisions

In an exciting livestream event on Thursday, the 27th, OpenAI revealed a research preview of GPT-4.5, the latest iteration of its flagship large language model. The company’s representatives lauded this new version as their most capable and versatile chat model to date. It will initially be open to software developers and people with ChatGPT Pro subscriptions.

The release of GPT-4.5 will mark the end of an era of sorts for OpenAI. In a post on X earlier this month, OpenAI CEO Sam Altman said the model would be the last that the company introduces that did not use additional computing power to ponder over queries before responding.

What is GPT 4.5?

GPT 4.5 is OpenAI’s largest model yet — Experts have estimated that GPT-4 could have as many as 1.8 trillion parameters, the values that get tweaked when a model is trained.By scaling unsupervised learning, GPT 4.5 improves its ability to recognize patterns, draw connections, and generate creative insights without reasoning.

GPT 4.5 is an example of scaling unsupervised learning by scaling up compute and data, along with architecture and optimization innovations. And GPT-4.5 is more natural in user interaction, covers a wider range of knowledge, and can better understand and respond to user intent, leading to reduced hallucinations and more reliability across a wide range of topics.

What are the upgrades of GPT 4.5 and its features

EQ upgrade:

The biggest feature of GPT-4.5 is its enhanced “emotional intelligence” (EQ), which can provide a more natural, warm and smooth conversation experience. OpenAI CEO Sam Altman shared on social media: “This is the first time I feel like AI is talking to a thoughtful person. It really provides valuable advice, and even made me lean back in my chair a few times, surprised that AI can give such excellent answers.”

In the human preference test, users generally believe that GPT 4.5’s responses are more in line with human communication habits than GPT-4o. Specifically, the new model received higher ratings in creative intelligence (56.8%), professional issues (63.2%) and daily issues (57.0%).

Reduced hallucinations:

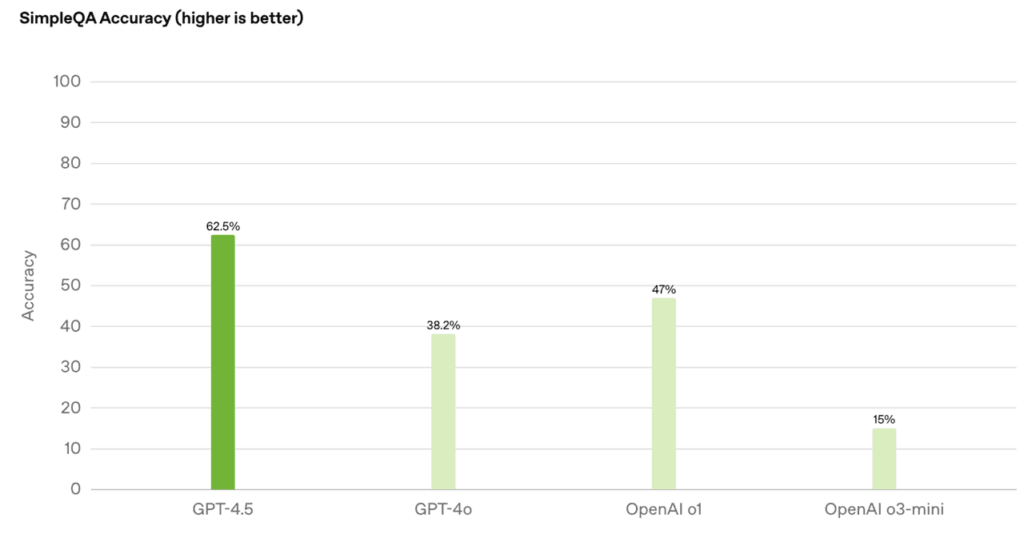

Through large-scale “unsupervised learning”, GPT 4.5 has made significant progress in knowledge accuracy and reducing “hallucinations” (false information):

- Achieving 62.5% accuracy in SimpleQA evaluation, the hallucination rate dropped to 37.1%

- Achieving an accuracy of 0.78 on the PersonQA dataset, far better than GPT-4o (0.28) and o1 (0.55)

Knowledge base expansion and Expression Upgrade

Efficiency increased dramatically: computing power consumption decreased by 10 times, knowledge base doubled, but the cost was higher (Pro users have priority experience at $200/month). In addition, GPT 4.5 has been optimized in architecture and innovation, improving controllability, understanding of nuances and natural conversation capabilities, and is particularly suitable for writing, programming, solving practical problems, and interactive scenarios that require a high degree of empathy.

Technical architecture highlights

Computing power upgrade: Based on Microsoft Azure supercomputing training, the computing power is 10 times that of GPT-40, the computing efficiency is improved by more than 10 times, and distributed training across data centers is supported.

Safety optimization: Integrate traditional supervised fine-tuning (SFT) and RLHF, introduce new supervision technology, and reduce the risk of harmful output.

Multimodal limitations: Voice/video is not supported yet, but image understanding is added to assist SVG animation design and copyright-free music generation.

Related topics:The Best 8 Most Popular AI Models Comparison of 2025

GPT 4.5 API Pricing Explained: Is It really worth it?

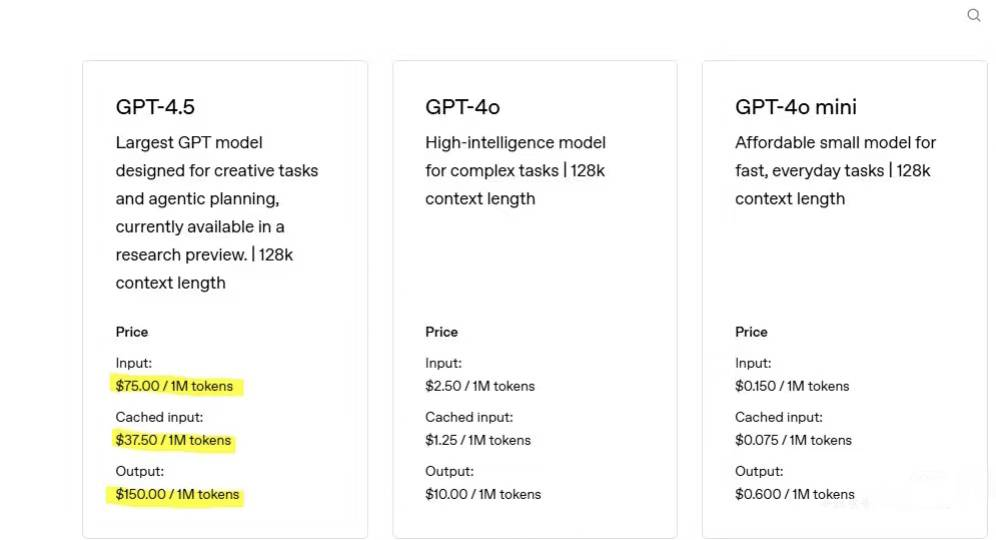

GPT‑4.5 is built on a colossal architecture with 12.8 trillion parameters and a 128k token context window. This enormous scale and compute-intensive design come with premium pricing. For instance, a workload with 750k input tokens and 250k output tokens can cost around $147—roughly 30–34× more expensive than earlier models like GPT‑4o.

GPT series price comparison

The new model is now available for research preview to ChatGPT Pro users and will be rolled out to Plus, Team, Enterprise, and Education users over the next two weeks.

GPT 4.5 vs Other Language Models

The aesthetic intuition of design writing has been upgraded, making it more suitable for creative work and emotional interaction than other models. The reasoning has been downgraded, and it has clearly abandoned the positioning of “the strongest model”. Its reasoning ability lags behind its competitors. GPT-4.5 has raised the standard for conversational AI, but its high price makes it a professional tool rather than a mass-market solution.

Comprehensive API Pricing Comparison Across Leading AI Models

| Model | Input Cost (per 1M tokens) | Output Cost (per 1M tokens) | Context Window | Comments |

| GPT‑4.5 | 75 | 150 | 128k tokens | Premium pricing for advanced emotional and conversational capabilities |

| GPT‑4o | 2.5 | 10 | 128k tokens | Cost-effective baseline with fast, multimodal support |

| Claude 3.7 Sonnet | 3 | 15 | 200k tokens | Exceptionally economical; supports both text and images |

| DeepSeek R1 | ~$0.55 | ~$2.19 | 64k tokens | Aggressive pricing; caching can further reduce costs for high-volume use cases |

| Google Gemini 2.0 Flash | ~$0.15 | ~$0.60 | Up to 1M tokens | Ultra-low cost with massive context capacity; ideal for high-volume tasks |

Technical Capabilities & Cost Trade-offs

Context & Multimodality:

GPT‑4.5: Supports a 128k token context but is text-only.

Claude 3.7 Sonnet: Offers a larger 200k token window and image processing for enhanced long-context performance.

Google Gemini 2.0 Flash: Boasts an impressive 1M token window, ideal for extensive content processing (though text quality may vary).

Specialized Tasks:

Coding Benchmarks: GPT‑4.5 achieves around 38% accuracy on coding tasks (e.g., SWE‑Bench), whereas Claude 3.7 Sonnet delivers significantly better cost efficiency and performance in technical tasks.

Emotional Intelligence: GPT‑4.5 excels in delivering nuanced, emotionally rich dialogue, making it ideal for customer support and coaching applications.

Conclusion

GPT-4.5 is the “last non-inference model”. Its unsupervised learning capability will be integrated with the o-series reasoning technology, paving the way for the GPT-5 released at the end of May. The release of GPT-4.5 is not only a technological upgrade, but also a reconstruction of the human-machine collaboration model. Although the high price and computing power bottleneck are controversial, its breakthroughs in emotional resonance and practicality provide a new paradigm for the integration of AI into education, medical care and other fields. AI has unlimited development potential!

Common FAQ’s on GPT 4.5

What are its limitations?

It lacks chain-of-thought reasoning, and can be slower due to its size. It also doesn’t produce multimodal output like audio or video.

Can it produce fully accurate answers 100% of the time?

No. While GPT-4.5 generally hallucinates less than previous models, users should still verify important or sensitive outputs.

Does GPT-4.5 support images?

Yes, GPT-4.5 accepts image inputs, can generate SVG images in-line, and generate images via DALL·E.

Does GPT-4.5 support searching the web?

Yes, GPT-4.5 has access to the latest up-to-date information with search.

What files and file types does it work with?

GPT-4.5 supports all files and file types.