Luma Labs Releases Modify Video that use with Luma Ray2

Luma Labs has introduced a paradigm‐shifting solution: a tool called Modify Video that enables comprehensive scene reconstruction without compromising the original performance, camera moves, or character animation. By decoupling “what” is happening (the actors’ motions and expressions) from “where” it’s happening (the environment, textures, lighting, and visual style), Modify Video unlocks unprecedented creative flexibility.

Key Features and How They Work

1. Motion Extraction & Puppeteering

Automatic Full-Body and Facial Motion Capture: Modify Video can analyze an input clip and automatically extract full-body poses, facial expressions, and lip-sync information.

Driving New Characters or Objects: Once these “motion data” are captured, they can be applied to drive any new 3D character, prop, or camera movement.

For example, you could “transfer” an actor’s performance onto a creature such as a monster, or you could have a tabletop move in perfect sync with a dance routine.

Why It Matters:

By separating the performance from its original appearance, creators can experiment with alternate characters or objects while preserving the nuance and timing of the original act.

2. 🌆 World & Style Swapping

- Preserve Action, Change Everything Else

Without altering the core character animation or timing, you can completely revamp the look, feel, and material properties of the scene. - Examples of Transformations

- Convert a rundown garage into the interior of a spaceship.

- Turn a bright, sunny street into a moody, neon-lit night scene.

- Replace a cartoonish aesthetic with photorealistic textures and lighting.

- Underlying Technology

Modify Video of luma AI builds a high-fidelity understanding of the original scene’s geometry and temporal coherence, ensuring that any visual changes remain stable over time—avoiding distortions, jitter, or “time-warping” artifacts.

3. Isolated Element Edits

- Targeted Adjustments Without Greenscreens

You can pick and choose individual elements to modify—such as a character’s outfit, a specific prop, or even just the sky—without affecting the rest of the frame. - Illustrative Use Cases

- Change the color or style of a performer’s costume.

- Swap out one actor’s face for another’s.

- Add a UFO cruising through the sky above a scene.

- No Frame-by-Frame Tracking Required

Because the tool understands spatial and temporal context, you don’t need to manually rotoscope or carry out painstaking per-frame adjustments. The edits blend naturally into the footage.

Modes of Operation & Creative Flexibility

Modify Video offers three preset transformation modes—each granting a different level of creative freedom. Users select the mode that best fits their project’s needs:

Adhere Mode (Minimal Adjustment)

Purpose: Preserve the original video’s structure as much as possible, making only subtle style or texture tweaks.

When to Use: Ideal for situations where you want to maintain consistency across multiple shots or make minor post-production fixes—such as tweaking background color or swapping out a slightly off-model prop—while leaving the actors’ performances and camera angles untouched.

Flex Mode (Balanced Creativity)

Purpose: Keep key elements—like a character’s motion, facial expression, and timing—intact, but allow for broader creative modifications.

When to Use: Suitable for projects that need a compromise between faithful reproduction and fresh reinterpretation. For instance, you could change the overall setting to a different style or swap a character’s costume and basic props, while still retaining the essence of the original performance. This mode is especially useful for quickly generating multiple stylistic variations, such as for client previews.

Reimagine Mode (Full Reconstruction)

Purpose: Unleash maximum creative freedom by enabling you to completely redesign environments, characters, or even turn a performer into a non-human entity (like a fantasy creature).

When to Use: Perfect for art-driven projects, concept shorts, or any scenario where you want to radically re-envision the footage. In Reimagine Mode, you can overhaul a scene or character appearance from the ground up, producing striking, surreal, or otherworldly results.

Choosing the Right Mode

- Adhere Mode is your go-to when the goal is to make fine adjustments without disturbing the original asset’s integrity—such as ensuring visual consistency across a multi-shot sequence.

- Flex Mode works best when you need a balance: you still safeguard the authenticity of the performance, but you can play around with setting, lighting, costume, or minor character redesigns.

- Reimagine Mode is tailor-made for projects that demand out-of-the-box creativity—where the entire world or primary characters need to be overhauled for maximum visual impact.

How to Use Modify Video of luma AI

Modify Video is integrated into Luma Dream Machine: Ray 2, supporting clips up to 10 seconds in length. The workflow is as follows:

- Upload a Short Video Clip:For best results, keep clips in the 5–10 second range, shot at high resolution with minimal camera shake.

- Choose a Transformation Mode: Select Adhere, Flex, or Reimagine depending on how radical a change you want.

- Provide a Reference Frame or Style Prompt (Optional): If you have a specific look or keyframe (e.g., a still from a concept art piece), you can upload it.

- Input a Text Prompt (If Desired): Detailed, positive descriptions (e.g., “A neon‐lit cyberpunk street at dusk with hovering vehicles”) help the system interpret your vision accurately.

- Adjust “Modification Intensity”: Dial between subtle and dramatic transformations.

- Generate and Review Multiple Variants: The system outputs several versions; you can pick the best one and iterate further or export directly.

Pre-Shoot Recommendations

- Stabilize Your Camera: Use a tripod or gimbal to minimize shake and ensure clean motion extraction.

- Choose Simple Backgrounds: Plain walls or uncluttered spaces help the AI isolate subjects more effectively.

- Capture High Resolution, Well‐Lit Footage: Clear frames yield more accurate pose and texture data.

- Keep Clips Under 10 Seconds: Although the system supports up to 10 seconds, segments of 5–7 seconds balance depth of content with processing efficiency.

Limitations to Consider

- 10‐Second Clip Maximum

If you have longer sequences, you’ll need to break them into multiple segments for processing. - Quality of Input Affects Output

Blurry, low‐resolution, or noisy footage can degrade motion capture accuracy and final visual fidelity. - Chaotic or Crowded Scenes

Shots featuring many fast‐moving objects or heavily cluttered backgrounds may produce unstable artifacts or unintended blending errors. - Complex Overlapping Elements

Scenes with multiple overlapping actors or props can challenge the isolation algorithms—simpler setups usually yield cleaner results.

In summary, Modify Video represents a transformative leap for post-production flexibility—allowing you to preserve a performance’s essence while rebuilding its entire world. When paired with Ray 2’s newly integrated features like Adobe Firefly compatibility, Camera Angle Concepts, and Keyframes control, Luma AI empowers creators to tell stories with unprecedented agility and creativity. Whether you need subtle style tweaks or full-blown, surreal reimaginings, this evolving toolset dramatically expands what’s possible in every 10-second clip.

Getting Started

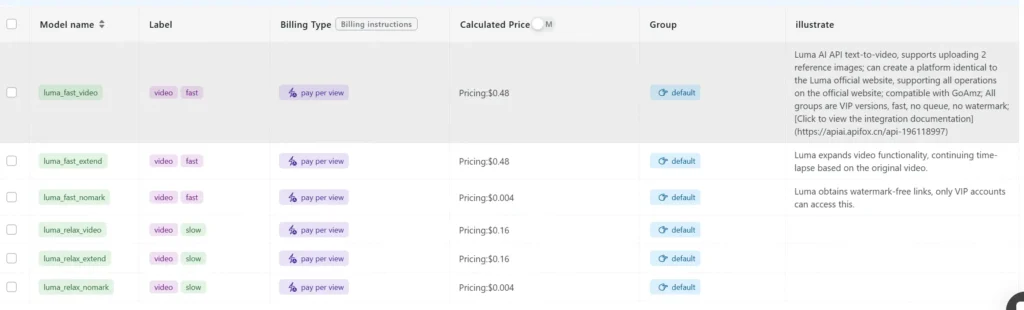

CometAPI provides a unified REST interface that aggregates hundreds of AI models—under a consistent endpoint, with built-in API-key management, usage quotas, and billing dashboards. Instead of juggling multiple vendor URLs and credentials.

Developers can access latest Luma API through CometAPI. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate: