Gemini 3.0 Exposed: What will it bring and when will it be released?

In the rapidly evolving world of artificial intelligence, Google’s Gemini series has emerged as one of the most ambitious and closely watched model families. With each iteration, Gemini has pushed the boundaries of multimodal understanding, context length, and real-time reasoning—culminating in the highly praised Gemini 2.5 Pro. Now, the AI community eagerly anticipates the next leap: Gemini 3.0. Drawing on recent leaks, official signals, and expert analysis, this article explores what Gemini 3.0 will bring and when you can expect its launch.

What Is Gemini 3?

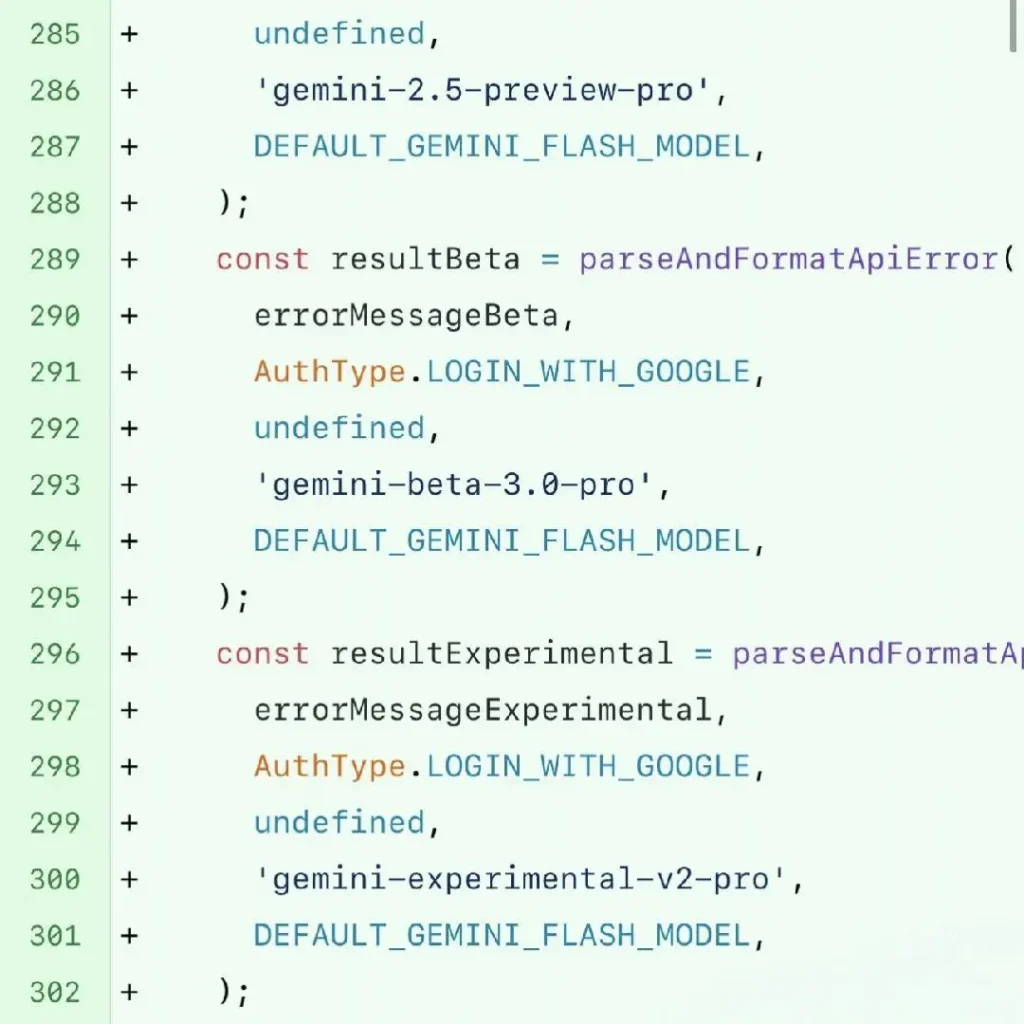

Gemini 3 represents Google DeepMind’s next-generation large language model (LLM), poised to build on the foundations laid by Gemini 2.x. References to internal “gemini-beta-3.0-pro” and “gemini-beta-3.0-flash” versions have been spotted in the open‑source Gemini CLI tool, signaling that Google is preparing a significant upgrade in both capability and performance Unlike its predecessors, Gemini 3 is expected to integrate an advanced “Deep Think” reasoning architecture—designed to tackle complex multi‑step reasoning tasks more reliably than earlier models .

Under the hood, Gemini 3 leverages innovations from Gemini 2.5 Pro—released June 17, 2025—and extends support for multimodal inputs, allowing seamless comprehension of text, images, audio, and potentially video. By unifying its architecture across different data modalities, Gemini 3 aims to deliver more human‑like understanding and generation capabilities, reducing the need for multiple specialized models in a single application.

What New Features Will Gemini 3.0 Bring?

Expanded Multimodal Integration

Gemini 2.5 already handles text, images, audio, and short videos. Gemini 3.0 aims to extend this to real‑time video (up to 60 FPS), 3D object understanding, and geospatial data analysis—enabling applications from live video summarization to augmented‐reality navigation. Such capabilities would allow developers to build interfaces that interpret complex scenes—such as robotics vision or immersive learning environments—directly on the model.

Enhanced Context Handling

One of Gemini 2.5’s headline feats is its 1 million‑token context window, far exceeding most competitors. Gemini 3.0 is projected to introduce a “multi‑million” token window, with smarter retrieval and memory mechanisms to maintain coherence across extremely long documents or conversations ([felloai.com][1]). This will revolutionize workflows involving legal briefs, scientific literature reviews, and collaborative editing—where maintaining deep context is critical.

Built‑in Advanced Reasoning

Gemini 2.5’s “Deep Think” mode requires a manual toggle to engage the verifier module. In contrast, Gemini 3.0 is expected to embed verifier reasoning by default, streamlining outputs and reducing user intervention. According to statements from DeepMind leadership, the new model will integrate planning loops at every inference step, allowing it to self‑correct and outline multi‐step plans without external prompts .

Inference Efficiency and Tool Orchestration

Despite its size, Gemini 2.5 Flash already delivers sub‑second response times on high‑end hardware. Gemini 3.0 aims for near‑real‑time performance by leveraging Google’s upcoming TPU v5p accelerators and optimized algorithms . Moreover, tool orchestration—already demonstrated by Project Mariner agents in 2.5—will evolve into multi‑agent tool orchestration, enabling parallel interactions with browsers, code execution environments, and third‑party APIs for sophisticated workflows.

When Will Gemini 3.0 Be Released?

Google’s Official Cadence

Looking back, Google has followed an annual major‑release cadence: Gemini 1.0 in December 2023, Gemini 2.0 in December 2024, and a mid‑cycle Gemini 2.5 in mid‑2025 . This pattern suggests that Gemini 3.0 could arrive around December 2025.

Potential Rollout Plan

A plausible rollout timeline:

- October 2025: Preview for enterprise and Vertex AI partners

- November–December 2025: General developer access via Gemini 3 Pro/Ultra tiers on Google Cloud

- Early 2026: Consumer‑facing deployment—embedded in Pixel devices, Android 17, Workspace, and Search.

Are There Beta or Preview Releases?

Indeed, code commits spotted in the Gemini CLI repository already reference “beta-3.0-pro” builds, suggesting a limited early‑access program for select enterprise and academic partners . These beta releases will likely help Google gather feedback on real‑world performance, uncover edge‑case failures, and refine API endpoints before a full public rollout.

Developers interested in early access can monitor updates on Google Studio and the Vertex AI Model Garden, where Gemini 2.x versions currently appear. Google’s model lifecycle documentation indicates that major model versions undergo a staged release: initial alpha, followed by beta, release candidate, and finally Stable. Gemini 3 should follow this pattern, providing transparent deprecation timelines for older models .

Developers can also pay attention to the CometAPI website, we will update the latest AI news in time and introduce the latest and most advanced AI models.

Getting Started

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

Developers can access Gemini-2.5 Pro and Gemini-2.5 Flash through CometAPI, the latest models listed are as of the article’s publication date. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.