How to Run Gemma 3 270M Locally today? 3 Best Ways for Developers

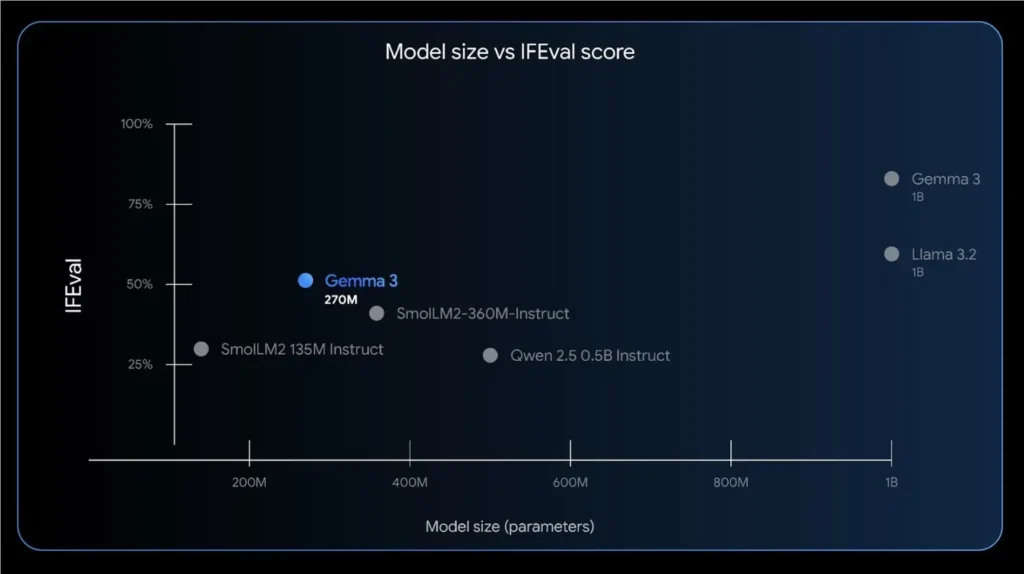

Google launches new Gemma 3 270M model lately, If you love tinkering with compact, efficient models and getting things to run on a laptop, phone, or small server, Gemma 3 270M is a delightful new friend: a 270-million-parameter model from Google designed for extreme efficiency and task-specific fine-tuning. It’s intentionally tiny, power-frugal, and surprisingly capable for many instruction-following and classification tasks — and the ecosystem already supplies multiple easy ways to run it locally: (1) Hugging Face / Transformers (PyTorch), (2) containerized runtimes like Ollama / LM Studio, and (3) ultra-light GGUF / llama.cpp style runners for CPUs and phones. Below I’ll walk you through the architecture highlights, then give three practical, copy-pastaable methods (including commands and code), examples, plus pros/cons and my best tips so you don’t waste time fighting the stack.

What is Gemma 3 270M and why should I care?

Gemma 3 270M is the smallest released Gemma-3 family member intended as a compact base model: it balances a low parameter count (≈270M) with a modern architecture, large vocabulary and instruction-tuned behavior so you can run capable language tasks on single GPUs or even on stronger CPUs/edge devices after quantization. The model is provided by Google in the Gemma-3 family and has been distributed openly via model hubs and GGUF/ggml collections for local usage.

Why care? Because a 270M model lets you:

- iterate quickly during development (fast startup, lower memory),

- run offline for privacy or latency reasons,

- fine-tune cheaply (LoRA / adapters) for specialized tasks,

- and deploy to constrained infrastructure (on-device or single-GPU services).

How is Gemma 3 architected?

Gemma 3 follows the Gemma/Gemini research lineage: it’s a transformer-based causal language model family with variants tuned and engineered for efficiency and multimodality. The 270M model is a text-focused configuration (the smallest Gemma 3 sizes are text-only), trained and optimized to be instruction-friendly out of the box while preserving the same family design choices that scale up into the 1B–27B variants. The model supports very long contexts (note: the smallest Gemma 3 models are documented with a 32k token context limit).

What extensions and runtime ecosystems exist?

Google and the community have released multiple runtime and distribution artifacts to make Gemma 3 easy to run:

- gemma.cpp — an official lightweight pure-C++ inference runtime optimized for portability. It’s targeted at experimentation and platforms where a tiny, standalone runtime matters.

- Hugging Face model cards and GGUF/llama.cpp artifacts — the model is available on Hugging Face and community collections provide GGUF builds, LoRA adapters, and quantized variants for

llama.cppand similar runtimes. - Ollama / LM Studio / Docker / Transformers integrations — commercial and open-source tooling have added native support or installers for Gemma 3 variants, including QAT (quantization-aware training) variants to lower memory usage.

How can I run Gemma 3 270M with Hugging Face Transformers (PyTorch)?

Why choose this method?

This is the most flexible path for development, experimentation, and fine-tuning using standard PyTorch tooling, Accelerate, and Hugging Face Trainer or custom loops. It’s ideal if you want to integrate Gemma into Python apps, fine-tune, or use GPU acceleration.

What you need

- A machine with Python, pip, and optionally a CUDA GPU (but CPU works for small tests).

- An accepted license for the HF model (you must accept Google’s terms on Hugging Face before downloading).

Quick install

python -m venv venv && source venv/bin/activate

pip install --upgrade pip

pip install torch torchvision --extra-index-url https://download.pytorch.org/whl/cu118 # or cpu-only

pip install transformers accelerate

Minimal inference code (PyTorch + Transformers)

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline

import torch

model_id = "google/gemma-3-270m" # ensure you've accepted HF license

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id, torch_dtype=torch.float16, device_map="auto")

nlp = pipeline("text-generation", model=model, tokenizer=tokenizer)

print(nlp("Explain Newton's second law in one sentence.", max_new_tokens=64)[0]["generated_text"])

Example output (what to expect)

Short, instruction-following answers suitable for classification, summarization, and small chat flows. For heavier reasoning tasks, consider larger sizes, but 270M gives excellent bang-for-energy for many use cases.

Advantages and tips

- Full compatibility with HF ecosystem (datasets, Trainer, TRL).

- Use

device_map="auto"andtorch_dtype=torch.float16to make GPU memory efficient. - For tiny local machines, offload to CPU or use mixed precision; but if you want speed, a modest GPU helps a lot.

How can I run Gemma 3 270M via Ollama or LM Studio (zero-config runnable)?

What is Ollama/LM Studio and why use them?

Ollama and LM Studio are local containerized runtimes that act like app stores for models — you pull a model and run it with a single command. They handle packaging/quantized files, memory consumption, and provide a convenient CLI/UI. This is the fastest route from zero → local chat. Ollama explicitly lists Gemma 3 270M in its model library.

Quick Ollama steps

- Install Ollama from https://ollama.com/download

- Pull and run:

# Pull (downloads the model)

ollama pull gemma3:270m

# Start an interactive session (CLI)

ollama run gemma3:270m

Example usage (scripted)

# Run a single prompt and exit

ollama run gemma3:270m --prompt "Summarize the latest Python 3.12 features in one paragraph."

Example: LM Studio (conceptual steps)

- Install LM Studio (desktop).

- Search the model hub inside the app for “gemma-3-270m”.

- Choose a quantized variant (Q4_0 or similar) and download.

- Click “Load” and start chatting.

Advantages and tips

- Super low friction: no manual conversion, model discovery in the UI, easy for demos.

- Ollama handles model storage/updates; use it if you want a no-ops local environment.

- If you need integration in production code, Ollama offers APIs to serve local endpoints.

How can I run Gemma 3 270M using GGUF / llama.cpp on tiny devices?

Why this path exists

If your goal is the smallest memory footprint (phone, Raspberry Pi, tiny VPS) or you want blazing cold-start speed, community conversions to GGUF (the modern ggml format) and inference via llama.cpp/ggml tooling are the way to go. People are already running Gemma 3 270M on phones with extreme quantization (Q4/Q8 variants) and tiny RAM needs.

How to get a GGUF (conversion / download)

- Many community forks have converted

google/gemma-3-270mto GGUF and published them on Hugging Face (search forgemma-3-270m-GGUF). Example repos includeNikolayKozloff/gemma-3-270m-Q8_0-GGUFand ggml-org collections.

Run with llama.cpp (CLI)

# clone and build llama.cpp

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

make

# then, download or place gemma-3-270m.gguf in the folder

./main -m gemma-3-270m-q8_0.gguf -p "Write a haiku about debugging." --ctx_size 2048

Or run the server:

# start a local server (conversation mode)

./llama-server --hf-repo NikolayKozloff/gemma-3-270m-Q8_0-GGUF --hf-file gemma-3-270m-q8_0.gguf -c 2048

Example: run on Android (community workflows)

- Use a prebuilt GGUF and a mobile frontend (some community apps and builds wrap

llama.cppfor Android). Expect to trade off fidelity for speed at very low quantization (INT4 / Q4_0). Community doc pages show sample steps for phone runs.

Advantages and tips

- Tiny memory footprints: quantized GGUFs let you run models in hundreds of MBs.

- Speed on CPU:

llama.cppis extremely optimized for CPU inference. - Tip: try different quant levels (Q4_0, Q5/K) and test prompt quality; lower bits are faster but may degrade quality. Use

--ctx_sizeto match the model’s intended context when you need long context.

How should I choose which method to use?

Short decision guide:

- I want to prototype or fine-tune in Python / GPU → Hugging Face + Transformers. (Best for training/fine-tuning.)

- I want quick local conversational demos with minimal setup → Ollama / LM Studio. (Best for demos and non-developer stakeholders.)

- I want to run offline on a phone or tiny server → GGUF + llama.cpp. (Best for extreme edge efficiency.)

What are the advantages and practical tips for running Gemma 3 270M locally?

Resource and quantization tips

- Memory footprint: The full-precision 16-bit footprint for the 270M model is tiny (roughly several hundred megabytes for model parameters), but RO-and KV caches push peak memory higher. Community reporting indicates full precision could be ~0.5 GB while INT4 quantized variants can drop to ~100–200 MB — a huge win for edge and low-RAM setups. Always account for additional memory used by runtime, tokenizer, and system overhead.

- Use QAT/INT4 when possible: Google and community providers supply quantization-aware trained (QAT) builds and INT4/INT8 GGUFs. These reduce RAM and often maintain surprisingly good quality for many tasks.

Performance and contextual settings

- Context windows: The Gemma 3 family supports very long contexts; the 270M/1B variants are documented for up to 32k tokens. Tune

--contextor-cflags in runtimes that expose them. - Threading and batching: For CPU inference, increase thread counts and use batching if latency allows. For GPU, prefer FP16 and device mapping to reduce memory fragmentation.

Safety, license, and responsible use

- Gemma 3 is released with model artifacts and usage guidelines; adhere to the Responsible Generative AI Toolkit and any license conditions attached to the weights (especially for commercial use or distribution). If you are deploying public-facing services, apply moderation layers (e.g., ShieldGemma) and content filters.

What common problems will I see and how can I troubleshoot them?

Model file / format errors

- If a runtime complains about unknown model architecture, you likely have a format mismatch (e.g., trying to load a GGUF in a runtime expecting a Transformers checkpoint). Convert model artifacts using the official conversion scripts or use the runtime recommended artifacts (Hugging Face → Transformers, GGUF → llama.cpp). Community guides and collections often host pre-converted GGUFs to save time.

Out of memory

- Use quantized builds (INT4/INT8), reduce batch sizes, switch to CPU if you have tight GPU VRAM, or offload parts of the model using device_map/accelerate.

Unexpected quality drop with quantization

- Try higher-precision quantization (INT8) or QAT artifacts rather than naive post-training quantization. Fine-tuning a quantized model on a few domain examples can recover task-sensitive performance.

Final thoughts

Gemma 3 270M is an excellent “small but modern” model for local experimentation, fine-tuning and deployment. Pick Hugging Face + Transformers when you need full Python control and training; pick GGUF + ggml solutions for the lightest-weight inference; and pick GUI/packaging layers (LM Studio / Ollama) for rapid demos and non-technical stakeholders. For fine-tuning, LoRA/PEFT recipes dramatically lower costs and make the 270M model practical to adapt to real tasks. Always validate outputs, follow license/safety guidance, and choose the quantization level that balances memory and quality.

Getting Started

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

The latest integration Gemma 3 270M will soon appear on CometAPI, so stay tuned!While we finalize Gemma 3 270M Model upload, explore our other gemini models(Such as gemma 2,Gemini 2.5 Flash, Gemini 2.5 Pro ) on the Models page or try them in the AI Playground. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.