Integrate the FlowiseAI with the CometAPI: Step-by-step guide

The low-code visual AI tooling space is moving fast. Flowise — an open-source visual builder for LLM workflows — continues to add community integrations and release frequent updates; CometAPI is one of several unified AI API platforms that now expose hundreds of models via a single endpoint; and no-code backend builders like BuildShip likewise provide nodes to call CometAPI so you can wire the same models into server workflows. This guide shows why and when to combine these pieces, and then walks you through a concrete Flowise → CometAPI integration , how to wire Prompt → LLM Chain → CometAPI, recommended best practices, and example use cases.

What is FlowiseAI and why does it matter?

FlowiseAI is an open-source visual platform for building LLM workflows, chat assistants, and agentic pipelines. It offers a drag-and-drop canvas made of nodes (integrations) that represent prompts, chains, LLM connectors, retrievers, memory, tools, and outputs — letting teams prototype and ship LLM-powered systems without wiring everything by hand. Flowise also exposes APIs, tracing, evaluation tooling and community-maintained nodes so it’s useful both for rapid prototyping and production experimentation.

Why that matters: by using Flowise you get visual observability and fast iteration on prompt chains and model choices — and by adding third-party connectors (like CometAPI) you can switch or experiment with many underlying models with minimal changes.

What is CometAPI and what does it provide?

CometAPI is a unified API layer that aggregates access to hundreds of AI models (OpenAI, Anthropic/Claude, Google/Gemini, Replicate models, image & audio providers, etc.) behind a single, consistent request format and authentication scheme. That means you can pick or swap models, compare cost/latency, or fall back across providers programmatically without rewriting your application code. The platform is positioned as a cost-management and vendor-agnostic access layer for LLMs and multimodal models.

What this buys you in practice: simpler credential management for multi-model evaluation, the ability to A/B different models quickly, and (often) cost optimization by selecting cheaper model variants when appropriate.

Why would you integrate FlowiseAI with CometAPI?

Integrating Flowise with CometAPI gives you the convenience of Flowise’s visual builder together with centralized access to many model backends via CometAPI. Benefits include:

- Single credential management for multiple model endpoints (through CometAPI).

- Easy model A/Bing and provider-level routing inside a visual chain (switch models without changing node wiring).

- Faster experimentation: swap models, adjust prompts, and compare outputs inside Flowise flows.

- Reduced engineering friction for teams who want a visual orchestration layer but need multiple model providers behind the scenes.

- Why would you integrate FlowiseAI with CometAPI?

- Integrating Flowise with CometAPI gives you the convenience of Flowise’s visual builder together with centralized access to many model backends via CometAPI. Benefits include:

- Single credential management for multiple model endpoints (through CometAPI).

- Easy model A/Bing and provider-level routing inside a visual chain (switch models without changing node wiring).

- Faster experimentation: swap models, adjust prompts, and compare outputs inside Flowise flows.

- Reduced engineering friction for teams who want a visual orchestration layer but need multiple model providers behind the scenes.

- These capabilities accelerate RAG setups, agent orchestration and model composition workflows while keeping the visual provenance and traceability Flowise provides.

These capabilities accelerate RAG setups, agent orchestration and model composition workflows while keeping the visual provenance and traceability Flowise provides.

What environment and preconditions do you need before integration?

Checklist (minimum):

- Log in to FlowiseAI

- A CometAPI account + API key (you’ll retrieve this from CometAPI Console). Note: CometAPI uses a base path (e.g.

https://api.cometapi.com/v1/) for requests — you’ll supply that in Flowise node settings.

Security & operational preps:

- Store API keys in Flowise Credentials — don’t put keys in prompt templates or node code.

- Plan quota and rate limits: both CometAPI and the underlying model vendors may impose limits — check your plan and apply client-side throttling or retries if needed.

- Observe cost: when switching models you can change token usage and costs materially — instrument metrics and set guardrails.

How do I integrate CometAPI with FlowiseAI? (Find and add the CometAPI node — what are the steps?)

Follow these practical steps to add the ChatCometAPI node and configure credentials.

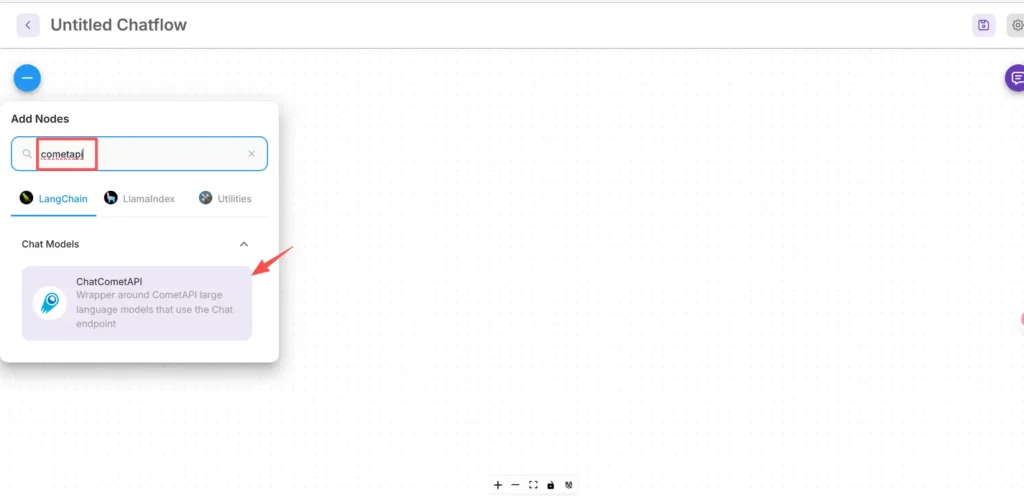

Step 1 — Confirm you have the node available

- In the FlowiseAI canvas, click Add new.

- In the search box, type and search for “cometapi” to find the CometAPI box. Some community nodes appear under LangChain → Chat Models.

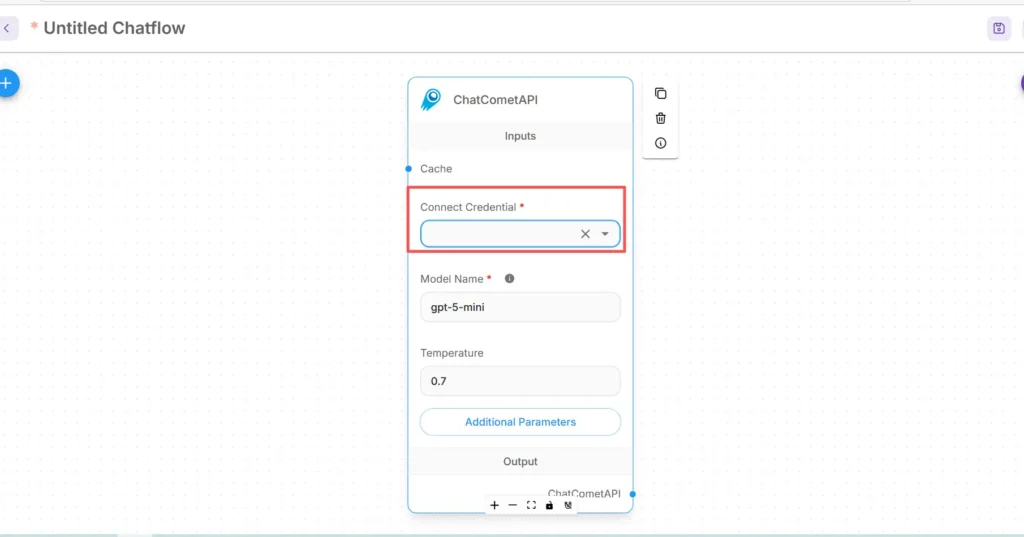

Step 2 — Add the CometAPI node into your flow

- Drag the ChatCometAPI node into your workspace. The node exposes model selection, temperature, max tokens, and other inference parameters.

- Place a Start/Input node (or your chatbot fronting node) upstream of the CometAPI node.

Step 3 — Configure credentials for CometAPI

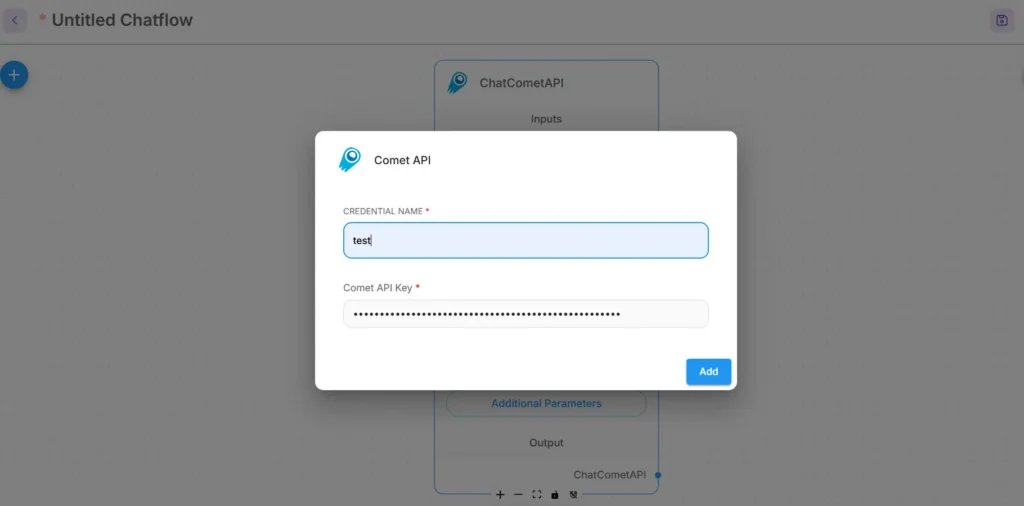

- In the Connect Credential dropdown menu of the CometAPI node, select Create New and find the API Key / Credentials field.(In Flowise, or open Credentials)

- Enter your CometAPI API key (preferably via Flowise’s credential manager or as an environment variable). On production, use secrets management

- Choose the default underlying model (or leave it adjustable from the node inputs if you want runtime model switching). CometAPI typically accepts a

modelparameter indicating which vendor/model to use.

Step 4 — Set the Base Path and additional params.

In the ChatCometAPI node settings expand Additional Parameters and set the Base Path to https://api.cometapi.com/v1/ (this is required so the node points to CometAPI’s v1 gateway). Optionally adjust default model name or provider parameters supported by CometAPI.

Troubleshooting tips:

- If calls fail, check network egress and any firewall/NAT rules between Flowise and CometAPI endpoints.

- Check rate limits and error responses returned by CometAPI; implement exponential backoff on 429/5xx.

How do I add a Prompt node and set up the LLM chain in Flowise?

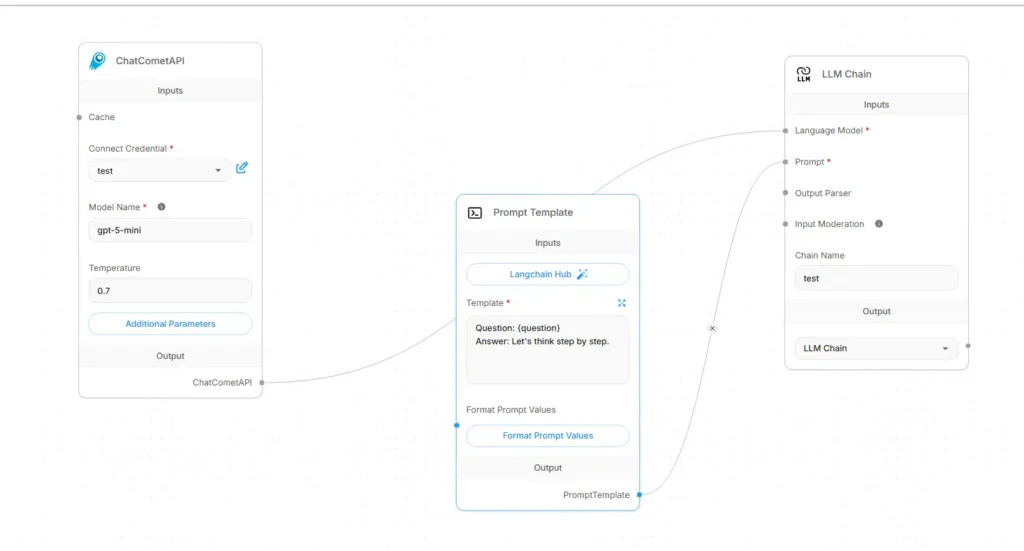

Flowise LLM flows are typically assembled as Input → Prompt Template → LLM Chain → Output. Here’s a concrete wiring recipe using CometAPI as the language model:

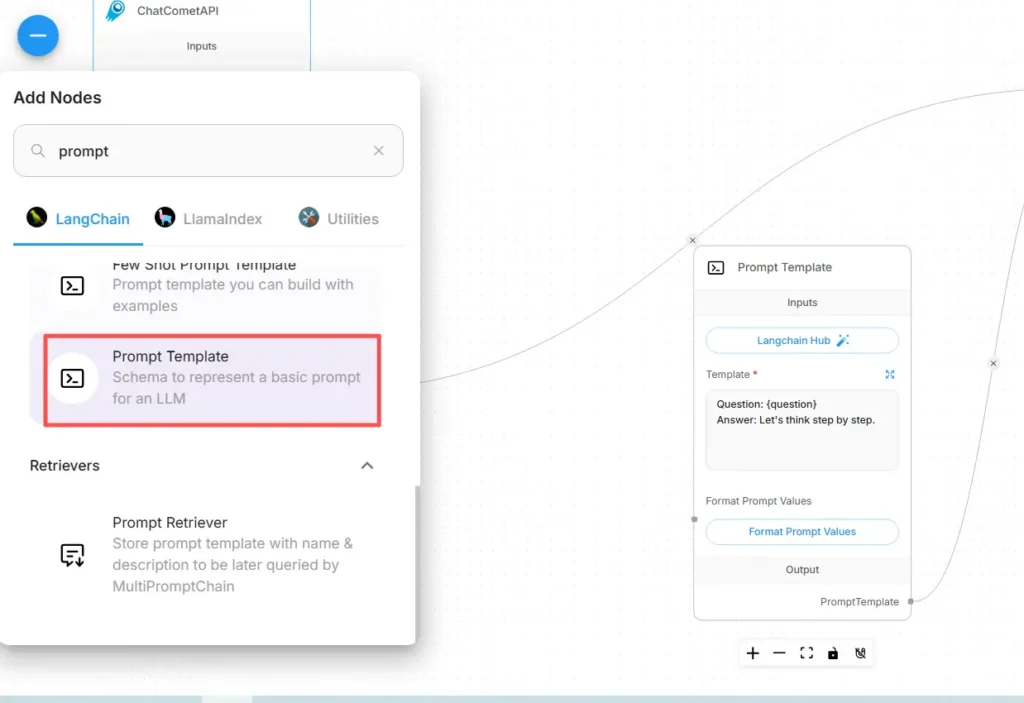

Step A — Create a Prompt Template node

- Add a Prompt Template node (or “Prompt” node) to the canvas.

- In the Prompt node, author your template using variables for dynamic content, e.g.:

You are an expert SRE. Given the following user question: {{user_input}}

Produce a step-by-step diagnostic plan and concise summary.- Expose

user_inputas the variable to be filled from the Start/Input node.

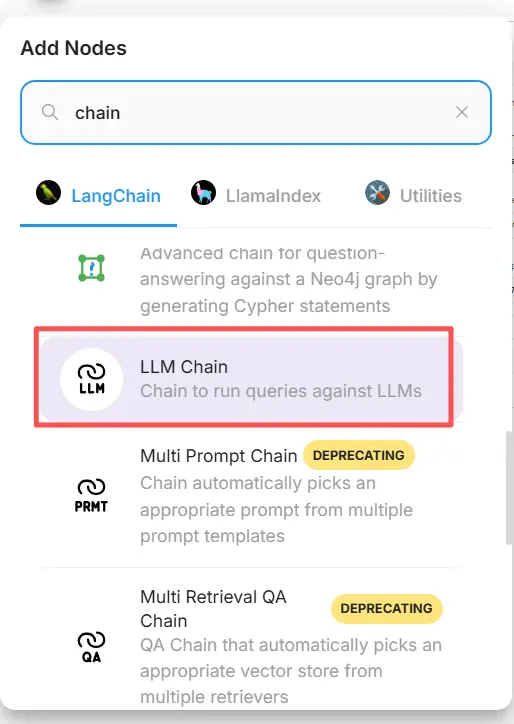

Step B — Add an LLM Chain node (LLM wrapper)

Add an LLM Chain or LLM node that represents a prompt → model invocation. In Flowise, the LLM Chain node usually has two main inputs: Language Model (the model/inference node) and Prompt (the prompt template).

Step C — Connect the nodes (explicit wiring)

- Connect the CometAPI node to the LLM Chain’s Language Model input.

This tells the chain which model to call for generation. (CometAPI becomes the model provider.) - Connect the Prompt Template node to the LLM Chain’s Prompt input.

The chain will combine the prompt template with the variable values and send the resulting prompt to CometAPI. - Connect the Start/Input node to the Prompt Template’s

user_inputvariable. - Connect the LLM Chain output to the Output node (UI response or downstream tool).

Visually the flow should read:

Start/Input → Prompt Template → LLM Chain (Language Model = CometAPI node) → Output

Step D — Test with a dry run

- Run the flow in Flowise’s test console. Inspect the prompt sent to CometAPI, response tokens, and latency. Adjust temperature, max tokens, or top-p in the CometAPI node settings to tune creativity vs. determinism.

What practical use cases does this integration enable?

Below are strong use cases where Flowise + CometAPI gives tangible benefits:

1) Multi-model routing / best-tool selection

Build flows that choose models per subtask: fast summarization with a low-cost model, factual grounding with a high-accuracy model, and image generation via an image model — all via CometAPI parameters without changing Flowise wiring.

2) Model A/B testing and evaluation

Spin up two parallel LLM Chains in Flowise (A vs. B), route the same prompt to different CometAPI model choices, and feed results to a small comparator node that scores outputs. Use metrics to decide which model to adopt.

3) Hybrid RAG (Retriever + LLM)

Use Flowise’s document store and vector retriever to gather context, then send a combined prompt template to CometAPI’s model. The unified API simplifies swapping the LLM used for final synthesis.

4) Agentic tooling (APIs, DBs, code)

Compose tools (HTTP GET/POST, DB calls) inside Flowise agents, use CometAPI for language reasoning and action selection, and route outputs to connectors/tools. Flowise supports request tools and agent patterns for this.

Getting Started

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

To begin, explore the model’s capabilities in the Playground and consult the Flowise API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !

Final recommendations and wrap up

Integrating Flowise with CometAPI is a pragmatic way to combine rapid visual orchestration (Flowise) with flexible access to many model providers (CometAPI). The pattern — store prompts as templates, keep model nodes decoupled, and instrument runs carefully — lets teams iterate quickly and switch providers without rewriting flows. Remember to manage credentials securely, account for latency and cost, and instrument your flows for observability and governance.