Grok Code Fast 1 API: What is and How to Access

When xAI announced Grok Code Fast 1 in late August 2025, the AI community got a clear signal: Grok is no longer just a conversational assistant — it’s being weaponized for developer workflows. Grok Code Fast 1 (short: Code Fast 1) is a purpose-built, low-latency, low-cost reasoning model tuned specifically for coding tasks and agentic coding workflows — that is, workflows where the model can plan, call tools, and act like an autonomous coding assistant inside IDEs and pipelines. The model has already started showing up in partner integrations (notably as an opt-in preview in GitHub Copilot) and in a number of cloud and third-party provider catalogs such as CometAPI.

What is Grok Code Fast 1 and why does it matter?

xAI’s grok-code-fast-1 as a deliberately focused, low-latency coding model aimed at being an active partner inside developer tools and automated workflows. It is positioned as a practical “pair programmer” optimized for speed, agentic tool use (search, function calls, code edits, tests), and large-context reasoning across repositories, it is a specialist variant in xAI’s Grok family that prioritizes two things: interactive speed and economical token costs for coding workflows. Rather than competing to be the broadest, multimodal generalist, it targets the everyday developer loop: read code, propose edits, call tools (linters/tests), and iterate quickly.

Why it matters now:

- Teams increasingly expect instant feedback inside IDEs and CI — waiting multiple seconds for each assistant iteration breaks flow. Grok Code Fast 1 is explicitly designed to reduce that friction.

- It supports function calling, structured outputs, and visible reasoning traces, enabling better automation of multi-step tasks (search → edit → test → validate). That makes it a natural fit for agentic coding systems and orchestrated developer assistants.

Why “agentic” matters here

Agentic models are more than “autocomplete.” They can:

- decide which external tool to call (run tests, fetch package docs),

- break a task into substeps and execute them,

- return structured JSON results or make git-style changes programmatically.

Grok Code Fast 1 deliberately exposes its reasoning traces (so developers can inspect the chain-of-thought during streaming) and emphasizes native tool calling — two features that support safe, steerable agentic coding.

Performance and Speed Grok Code Fast 1

How does Grok measure speed?

“Fast” in the model’s branding refers to multiple dimensions:

- Inference latency — token throughput and response time when generating code or reasoning traces. The model is optimized for lower latency so it fits interactive IDE loops (autocomplete, code suggestions, quick bug fixes) rather than only long-batch jobs.

- Cost efficiency — token pricing and model configuration aim to reduce per-use cost for routine coding tasks; third-party marketplaces list it with lower rates compared with larger, more general models.

- Developer productivity — perceived “speed” in a workflow: how quickly a developer can go from prompt to runnable code, including the model’s ability to call functions and return structured, testable outputs.

Real-world performance notes

| Action / Model | Grok Code Fast 1 (Observed) |

|---|---|

| Simple Line Completion | Instantaneous |

| Function Generation (5-10 lines) | < 1 second |

| Complex Component/File Generation (50+ lines) | 2-5 seconds |

| Refactoring a large function | 5-10 seconds |

Performance Comparison

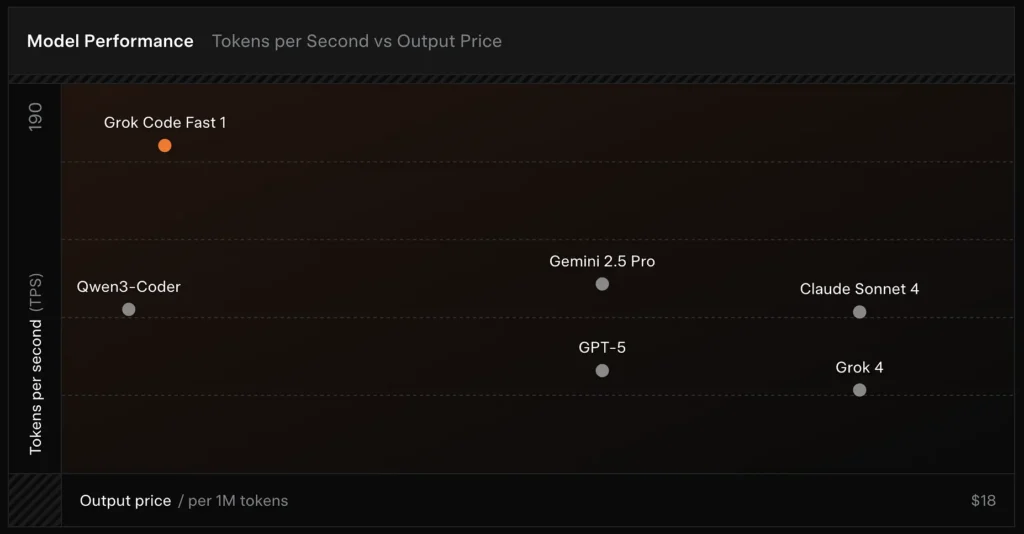

- Speed: Reached 190 tokens/second in testing.

- Price Comparison: GPT-5 output costs approximately $18 per 1M tokens, while Grok Code Fast-1 is only $1.50.

- Accuracy: Scored 70.8% on the SWE-Bench-Verified benchmark.

Design choices that enable speed

- Large context window (256k tokens): lets the model ingest big codebases or long conversation histories without truncation, reducing the need for repeated context uploads.

- Cache-friendly prompting: the model and platform are optimized to cache prefix tokens that rarely change across agentic steps, which reduces repeated compute and improves latency for multi-step tool interactions.

- Native tool-calling protocol: instead of ad hoc XML or brittle string-based “function calls,” Grok’s API supports structured function / tool definitions the model can invoke during its reasoning process (with summaries or “thinking traces” streamed back). This minimizes parsing work and lets the model combine multiple tools reliably.

What features does Grok Code Fast 1 provide?

Below are the core features that make Grok Code Fast 1 appealing for developer-facing integrations.

Core capabilities

- Agentic coding: built-in support for calling tools (test runners, linters, package lookups, git operations) and composing multi-step workflows.

- Reasoning traces in streaming: when used in streaming mode, the API surfaces intermediate “reasoning content” so developers and systems can observe the model’s planning and intervene.

- Structured outputs & function calling: returns JSON or typed results suitable for programmatic consumption (not just free-form text).

- Very large context (256k tokens): powerful for single-session, cross-file tasks.

- Fast Inference: Innovative acceleration technology and prompt cache optimization significantly improve inference speed, Response speed is extremely fast, often completing dozens of tool calls by the time a user finishes reading a prompt.

- Agentic Programming Optimization: Common development tools: grep, terminal operations, and file editing. Seamlessly integrated into major IDEs such as Cursor, GitHub Copilot, and Cline.

- Programming Language Coverage: Excellent skills in a variety of languages: TypeScript, Python, Java, Rust, C++, and Go. Able to handle a full range of development tasks, from building projects from scratch to troubleshooting complex codebases and performing detailed bug fixes.

Developer ergonomics

- OpenAI-compatible SDK surface: xAI’s API emphasizes compatibility with popular SDKs and provides migration guidance to shorten developer onboarding.

- CometAPI and BYOK support: third-party providers like CometAPI expose Grok Code Fast 1 via REST for teams that prefer OpenAI-compatible endpoints. This helps integration in toolchains that expect OpenAI-like APIs.

How is Grok Code Fast 1 different from general-purpose LLMs?

Grok Code Fast 1 trades some of the breadth of a flagship conversational model in exchange for tighter tuning around code, developer tooling, and rapid tool loops. In practice this means:

- Faster round-trip latency for token generation and tool calls.

- Crisper, action-focused outputs (structured responses, JSON/function call metadata).

- Cost model tuned for high-volume code interactions (cheaper per-token in many gateway listings)

How agentic is Grok Code Fast 1 — what does “agentic coding” mean in practice?

“Agentic” means the model can plan and execute multi-step tasks with external tool interactions. For Grok Code Fast 1, agentic power takes these forms:

- Function calling: Grok can request calls to external functions (e.g., run tests, fetch files, call linters) and incorporate the returned results for follow-up decisions.

- Visible reasoning traces: outputs may include stepwise reasoning that you can inspect and use to debug or steer agent behavior. This transparency helps when automating changes across a codebase.

- Persistent tool loops: Grok is designed to be used in short, repeated planning→execution→verification cycles rather than expecting a single monolithic reply.

Use cases that benefit most from agentic behavior

- Automated code repair: locate failing tests, propose edits, run tests, iterate.

- Repository analysis: search for usage patterns across thousands of files, create summaries, or propose refactors with citations to exact files/lines.

- Assisted PR generation: compose PR descriptions, generate diff patches, and annotate tests — all within an orchestrated flow that can run in CI.

How can developers access and use the Grok Code Fast 1 API?

xAI exposes Grok models through its public API and partner integrations. There are three common access patterns:

- Direct xAI API — create an xAI account, generate an API key in the console, and call the REST endpoints. The xAI docs show the REST base as

https://api.x.aiand specify standard Bearer token authentication. The docs and guides provide curl and SDK examples and emphasize compatibility with OpenAI-style requests for many tooling layers. - IDE/service partners (preview integrations) — GitHub Copilot (opt-in public preview) and other partners (Cursor, Cline, etc.) have been announced as launch collaborators, enabling Grok Code Fast 1 inside VS Code and similar tools, sometimes via “bring your own key” flows. If you use Copilot for Pro or enterprise tiers, look for the Grok Code Fast 1 opt-in option.

- Third-party gateways (CometAPI, API aggregators) — vendors normalize API calls across providers and sometimes surface different rate tiers (helpful for prototyping or multi-provider fallbacks). CometAPI and other registries list model contexts, sample pricing, and example calls.

Below are two practical code examples (Python native SDK streaming and REST via CometAPI) that illustrate how you might drive Grok Code Fast 1 in a real app.

Design your tools: register function/tool definitions in the request so the model can call them; for streaming, capture

reasoning_contentto monitor the model’s plan.

Use case code: Python (native xAI SDK, streaming sampler)

This example is adapted from xAI’s documentation patterns. Replace

XAI_API_KEYwith your real key and adjust the tool definitions to your environment. Streaming shows tokens and reasoning traces.

# Save as grok_code_fast_example.py

import os

import asyncio

# Hypothetical xai_sdk per xAI docs

import xai_sdk

API_KEY = os.getenv("XAI_API_KEY") # store your key securely

async def main():

client = xai_sdk.Client(api_key=API_KEY)

# Example: ask the model to add a unit test and fix failing code

prompt = """

Repo structure:

/src/math_utils.py

/tests/test_math_utils.py

Task: run the tests, identify the first failing test case, and modify src/math_utils.py

to fix the bug. Show the minimal code diff and run tests again.

"""

# Start a streaming sample; we want to see reasoning traces

async for chunk in client.sampler.sample(

model="grok-code-fast-1",

prompt=prompt,

max_len=1024,

stream=True,

return_reasoning=True, # stream reasoning_content when available

):

# chunk may include tokens and reasoning traces

if hasattr(chunk, "delta"):

if getattr(chunk.delta, "reasoning_content", None):

# model is exposing its internal planning steps

print("[REASONING]", chunk.delta.reasoning_content, flush=True)

if getattr(chunk.delta, "token_str", None):

print(chunk.delta.token_str, end="", flush=True)

if __name__ == "__main__":

asyncio.run(main())

Notes

- The

return_reasoning=Trueflag represents the docs’ guidance to stream reasoning traces — capture and display them so you can audit the model’s plan. - In a real agentic setup you would also register tools (e.g.,

run_tests,apply_patch) and authorize the model to call them. The model can then decide to invokerun_tests()and use outputs to inform a patch.

Use case code: REST ( CometAPI / OpenAI-compatible)

If your stack expects OpenAI-style REST endpoints, CometAPI exposes

grok-code-fast-1as a compatible model string. Example below uses theopenai-style client pattern.

import os

import requests

CometAPI_KEY = os.getenv("CometAPI_API_KEY")

BASE = "https://api.cometapi.com/v1/chat/completions"

headers = {

"Authorization": f"Bearer {CometAPI_KEY}",

"Content-Type": "application/json",

}

payload = {

"model": "grok-code-fast-1",

"messages": [

{"role": "system", "content": "You are Grok Code Fast 1, a fast coding assistant."},

{"role": "user", "content": "Write a function in Python that merges two sorted lists into one sorted list."}

],

"max_tokens": 300,

"stream": False

}

resp = requests.post(BASE, json=payload, headers=headers)

resp.raise_for_status()

print(resp.json())

Notes

- CometAPI acts as a bridge when native gRPC or SDK access is problematic in your environment; it supports the same 256k context and exposes

grok-code-fast-1. Check provider availability and rate limits.

What are practical integration patterns and best practices?

IDE-first (pair programming)

Embed Grok Code Fast 1 as the completion/assistant model inside VS Code or other IDEs. Use short prompts that ask for small, testable edits. Keep the assistant in a tight loop: generate patch → run tests → re-run assistant with failing test output.

CI automation

Use Grok Code Fast 1 to triage flaky failures, suggest fixes, or auto-generate unit tests for newly added code. Because it’s priced and architected for low latency, it’s suitable for frequent CI runs compared to more expensive generalist models.

Agent orchestration

Combine the model with robust tool guards: always execute proposed patches in a sandbox, run the full test suite, and require human review for non-trivial security or design changes. Use visible reasoning traces to audit actions and make them reproducible.

Prompt engineering tips

- Provide the model with the exact files or a small, focused context window for edits.

- Prefer structured output schemas for diffs or JSON summaries — they’re easier to validate automatically.

- When running multi-step flows, log the model’s tool calls and results so you can replay or debug agent behaviour.

Concrete use case: auto-fix a failing pytest test

Below is an illustrative Python workflow (simplified) showing how you might integrate Grok Code Fast 1 into a test-fix loop.

# pseudo-code: agentic test-fix loop with grok-code-fast-1

# 1) collect failing test output

failing_test_output = run_pytest_and_capture("tests/test_math.py")

# 2) ask Grok to propose a patch and tests

prompt = f"""

Pyproject: repo root

Failing test output:

{failing_test_output}

Please:

1) Explain root cause briefly.

2) Provide a patch in unified diff format that should fix the issue.

3) Suggest a minimal new/updated unit test to prove the fix.

"""

resp = call_grok_model("grok-code-fast-1", prompt, show_reasoning=True)

# 3) parse structured patch from response (validate!)

patch = extract_patch_from_response(resp)

if is_patch_safe(patch):

apply_patch(patch)

test_result = run_pytest_and_capture("tests/test_math.py")

report_back_to_grok(test_result)

else:

alert_human_review(resp)This loop shows how agentic behavior (propose → validate → run → iterate) can be implemented while the developer retains control over application of changes.

Getting Started

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

Developers can access Grok Code Fast 1 API through CometAPI,the latest model version is always updated with the official website. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !

Conclusion

Grok Code Fast 1 is not billed as the single “best” model for every job. Instead, it’s a specialist — tuned for agentic, tool-rich coding workflows where speed, a large context window, and low cost per iteration matter most. That combination makes it a practical daily driver for many engineering teams: fast enough for live editor experiences, cheap enough to iterate, and sufficiently transparent to be safely integrated with appropriate boundaries.