Google I/O 2025 releases the latest update of Gemini 2.5 series models

At Google I/O 2025, held in Mountain View, California, Google DeepMind and Google AI teams unveiled significant enhancements to their Gemini 2.5 series of large-language models. These updates span both the Gemini 2.5 Pro and Gemini 2.5 Flash variants, introducing advanced reasoning capabilities, native audio output, multilingual support, security safeguards, and substantial efficiency gains. Collectively, these improvements aim to empower developers, enterprises, and end users with more reliable, natural, and cost-effective AI services across Google AI Studio, the Gemini API, and Vertex AI .

Gemini 2.5 Pro Enhancements

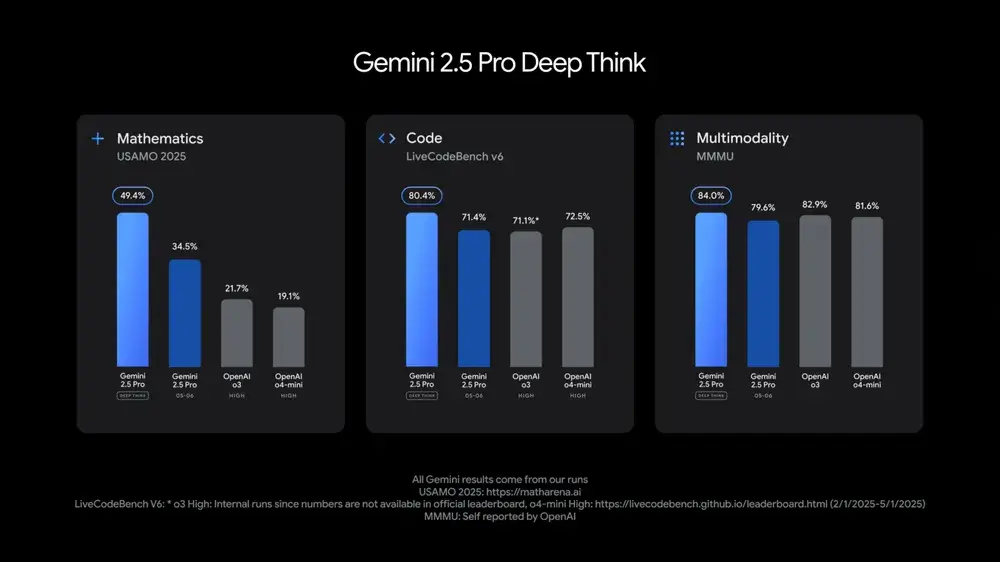

Deep Think: Enhanced Multi-Hypothesis Reasoning

A marquee feature for 2.5 Pro is Deep Think, an experimental reasoning mode that allows the model to internally generate, compare, and refine multiple solution pathways before producing its final output. Early benchmarks demonstrate Deep Think’s prowess: it leads on the 2025 USAMO math exam, atop the LiveCodeBench for competition-level coding, and scores 84.0% on the MMMU multimodal reasoning test .

Starting this Month, Deep Think is available to “trusted testers” through the Gemini API. Google said it needs more time for security assessments before making Deep Think more widely available.

Native Audio and Multilingual Dialogue

Building on its text capabilities, Gemini 2.5 Pro now supports native audio output across 24 languages. This feature provides context-aware prosody and emotional inflection, making AI interactions feel more human. Alongside audio, the model’s dialogue system adapts tone dynamically—amplifying empathy in customer-service and educational applications. Early demos included real-time voice chats with nuanced emphasis and multilingual code walkthroughs, highlighting Google’s push toward truly conversational AI .

Security and Trustworthiness

Security enhancements in 2.5 Pro focus on mitigating indirect prompt-injection attacks. The updated framework employs stricter input sanitization and dynamic context filtering, essential for regulated industries handling sensitive data. According to Google’s developer blog, these safeguards reduce vulnerability by up to 40% in internal red-team evaluations, laying the groundwork for broader enterprise adoption.

Gemini 2.5 Flash Optimizations

Efficiency and Speed

Gemini 2.5 Flash, the latency-optimized sibling of 2.5 Pro, has been overhauled for 22% greater computational efficiency and faster response times. During the keynote, Demis Hassabis noted that Flash now “performs better in nearly every dimension” compared to its predecessor, with preview availability for developers in Google AI Studio, Vertex AI, and the Gemini mobile app. The general availability launch is slated for early June 2025 .The latest version of Gemini 2.5 flash is currently released is gemini-2.5-flash-preview-05-20 at Google I/O 2025.

Expanded Multimodal Capabilities

Both Flash and Pro variants share new multimodal reasoning abilities, allowing users to co-iterate on text, images, audio, and even video inputs. Notable use cases shown at I/O included fractal visualizations generated from a single prompt and “Video to Learning App” pipelines that convert instructional videos into interactive educational experiences.

Developer Ecosystem Updates

Google AI Studio Integration

Google AI Studio now offers seamless access to both Gemini 2.5 Pro and Flash. The native code editor embeds the models directly, enabling developers to generate production-ready web apps through simple prompts. Starter templates showcase tasks from conversational agents with audio to real-time data-analysis dashboards, reducing prototype cycles from weeks to minutes .

Gemini API Advancements

The Gemini API received several updates:

- Streamlined Function-Calling: Simplified schema definitions cut integration effort by 30%.

- Thinking Budget Controls: Developers can now dial in reasoning depth for cost-performance trade-offs.

- Agentic Workflows: New endpoints support multi-step agent orchestration via Project Mariner, enabling the AI to autonomously perform up to 10 linked tasks (e.g., data retrieval, summarization, and report generation) with a single call.

Gemini Code Assist General Availability

Gemini 2.5 powers Gemini Code Assist for individuals and GitHub integrations, now generally available for free and paid tiers. This tool excels at code transformation, front-end UI generation, and automated refactoring, meeting the needs of both novice programmers and seasoned engineers .

Availability and Pricing

The Gemini 2.5 series is accessible to all Google Cloud customers via Vertex AI, with performance tiers determined by subscription level:

- AI Pro ($19.99/month) grants access to Gemini 2.5 Flash and standard 2.5 Pro features.

- AI Ultra ($249.99/month) unlocks Deep Think, priority throughput, and the full suite of multimodal and agentic capabilities, along with bundled services like 30 TB cloud storage and YouTube Premium .

Conclusion and Outlook

With the latest Gemini 2.5 Pro and Flash updates, Google is setting a new benchmark for AI reasoning, interactivity, and developer productivity. Deep Think’s multi-hypothesis evaluation, combined with native audio, security advancements, and efficiency gains, paves the way for more intelligent, trustworthy, and accessible AI systems. As these models roll out in June, their integration across Google AI Studio, the Gemini API, and Vertex AI will accelerate innovation—from smarter coding assistants to immersive educational tools—reshaping how individuals and organizations harness artificial intelligence.

Getting Started

CometAPI provides a unified REST interface that aggregates hundreds of AI models—including Gemini family—under a consistent endpoint, with built-in API-key management, usage quotas, and billing dashboards. Instead of juggling multiple vendor URLs and credentials.

Developers can access Gemini 2.5 Flash Pre API (model: gemini-2.5-flash-preview-05-20) and Gemini 2.5 Pro API (model: gemini-2.5-pro-preview-05-06)etc through CometAPI. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key.