Grok 3 and o3 represent the latest frontier in large-language modeling from two of the most closely watched AI labs today. As xAI and OpenAI vie for dominance in reasoning, multimodality, and real-world impact, understanding the distinctions between Grok 3 and o3 is crucial for developers, researchers, and enterprises considering adoption. This in-depth comparison explores their origins, architectural innovations, benchmark performances, practical applications, and value propositions, helping you determine which model aligns best with your objectives.

What are Grok 3 and o3’s origins and release timelines?

Understanding the genesis and visions behind Grok 3 and o3 sets the stage for appreciating how each model has been positioned in the AI landscape.

What is Grok 3

xAI’s Grok series began as an unguarded, rule-light chatbot on X (formerly Twitter). Grok 2.0 introduced FLUX.1 integration, but Grok 3 marks a pivot: it’s explicitly marketed as an “Age of Reasoning Agents” offering deep domain expertise in finance, coding, and legal text extraction . Elon Musk’s vision emphasizes open debate and fewer content constraints, enabling Grok 3 to generate controversial or unfiltered insights when needed . The “Big Brain” mode taps additional computational passes, mimicking human deliberation, and a new DeepSearch engine scours real-time web and X data for granular context.

xAI’s Grok series was conceived to push beyond conversational agents into the realm of autonomous reasoning. Grok 3, unveiled in beta on February 19, 2025, was promoted as “our most advanced model yet,” blending superior reasoning modules with extensive pretrained knowledge to support deeper, context-aware dialogues and tasks. Elon Musk emphasized that Grok 3 “surpasses all current AI rivals,” including GPT-4o, Gemini, and Anthropic’s Claude, framing it as a direct challenge to OpenAI’s offerings .

What is o3

OpenAI’s o-series traces back to early experiments in chaining reasoning steps before generating responses. On April 16, 2025, OpenAI formally released o3 alongside o4-mini, highlighting their ability to “think for longer before responding” and to agentically invoke external tools and APIs—capabilities crucial for complex, multimodal workflows . Sam Altman lauded o3 as demonstrating “genius-level intelligence,” signaling confidence in the model’s capacity to tackle tasks traditionally reserved for expert human operators.

OpenAI’s O-series evolved from O1’s introduction of private chain-of-thought in late 2024. O3’s architecture retains transformer foundations but schedules inference steps to “think” internally before outputting answers. Early access rounds during December 2024–January 2025 solicited feedback from security researchers, fine-tuning parameters to balance latency with reasoning accuracy. O3-mini, targeted at cost-sensitive applications, maintains latency targets akin to O1-mini while boosting STEM capabilities. O3 itself, reserved for Pro and enterprise users, increases inference time for complex tasks, embodying OpenAI’s incremental but safety-conscious development ethos.

How do their model architectures and training strategies differ?

While both models build on transformer foundations, they diverge in scale, reasoning mechanisms, and multimodal integrations.

Core architecture

- Grok 3: Retains a large-scale transformer backbone augmented with bespoke reasoning layers designed to sequence inferential steps explicitly. This architecture aims to mirror human-like chain-of-thought but at machine scale.

- o3: Implements an “agentic” reasoning paradigm where the model dynamically allocates computing effort across multiple passes—low, medium, or high—to optimize the trade-off between response latency and depth of analysis.

Training data and scale

- Grok 3: According to xAI, Grok 3 was trained on approximately 200,000 GPUs over several weeks, encompassing a mix of web-scale text, code repositories, and curated multimedia datasets to enable both linguistic and visual understanding .

- o3: Built on OpenAI’s extensive corpus of web and licensed datasets, o3’s training also incorporated reinforcement learning from human feedback (RLHF) tuned specifically for high-level reasoning tasks. While OpenAI has not disclosed GPU counts, the release notes emphasize efficient scaling to support an API tier for both researchers and enterprise clients .

Multimodal capabilities

- Grok 3: The beta release teased image generation and deep search functionalities, suggesting xAI is aiming for a unified model capable of both understanding and creating visual content alongside text .

- o3: Supports full tool integration, enabling natively chained calls to OpenAI’s image, code execution, and knowledge-base APIs, thereby offering a modular approach to multimodality rather than a monolithic, all-in-one model.

Model scale, compute allocation, and reasoning passes

Grok 3’s claim of “10× more compute” than Grok 2 leverages large-scale reinforcement learning to allow iterative error correction over seconds or minutes, with results aggregated via consensus@64 to enhance accuracy. This approach mirrors ensemble methods: 64 candidate answers are generated and the most frequent selected. O3, by contrast, integrates chain-of-thought as an internal planning step, avoiding external sampling but increasing internal compute per token. O3’s reasoning depth is dynamically adjusted: simpler queries use fewer “thinking” steps, while complex prompts trigger longer internal deliberations .

Which model offers superior benchmark performance?

Academic and coding benchmarks

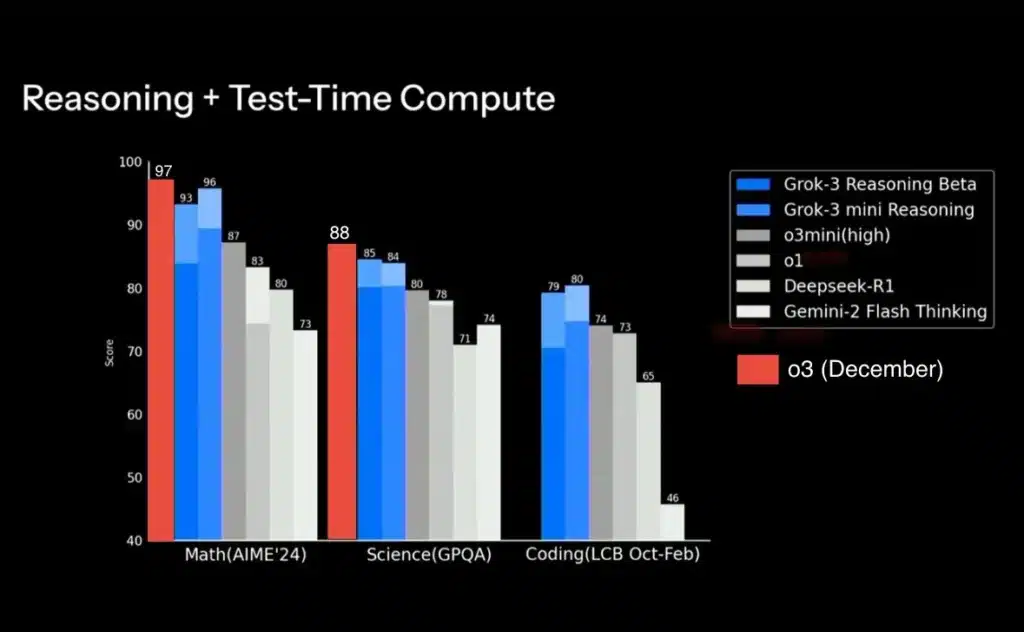

On the AIME 2025 mathematical reasoning test, Grok 3’s “consensus@64” method achieved an 89.2% accuracy, marginally ahead of O3-mini-high’s 87.3% on the same exam. In coding challenges, O3 recorded a Codeforces Elo of 2727, surpassing both Grok 3 (est. Elo ~2500) and O3-mini (Elo ~2300) .

Real-world user preferences and adversarial testing

xAI reports a Chatbot Arena Elo of 1402 for Grok 3—tested against human and AI opponents—outperforming Grok 2’s 1203 score x.ai. OpenAI’s internal evaluations show O3 achieving a 91% user satisfaction rate in comparative studies versus O1, with notable gains in “depth of explanation” metrics OpenAI. However, independent audits have questioned xAI’s benchmark methodology for over-representing Grok 3’s consensus sampling benefits without comparable variants for O3, underscoring the need for standardized evaluation protocols.

In what real-world applications do these models excel?

Beyond benchmarks, real-world tasks illuminate how each model can drive value across industries.

Creative and research workflows

- Grok 3: Early reviewers praised its “deep search” feature, which surfaces niche academic references and generates detailed outlines for thought-heavy content such as technical papers and creative writing prompts . The integrated image generation further allows seamless ideation cycles combining text and visuals.

- o3: Developers leverage its multi-pass reasoning to prototype complex software modules, debug code snippets, and generate data visualizations via chained calls—streamlining end-to-end research workflows without leaving the API environment .

Scientific and lab-based tasks

- Grok 3: While xAI’s beta has not been extensively tested in lab contexts, its enhanced reasoning core shows promise for hypothesis generation and literature reviews, potentially reducing the time scientists spend on preliminary data mining.

- o3: Proven in controlled virology troubleshooting, o3 can assist in protocol design, error analysis, and data interpretation, effectively acting as a virtual lab assistant. However, organizations must implement strict governance to mitigate biosecurity risks.

What ecosystems and integrations drive adoption?

Grok 3: X integration and real-time insights

Grok 3 is deeply woven into X’s Premium+ and SuperGrok tiers, offering in-app chatbot experiences, voice mode previews, and enterprise API access via docs.x.ai . DeepSearch and soon DeeperSearch empower professionals to query real-time social sentiment, legal filings, or financial data directly without leaving X. However, content moderation gaps have sparked controversy when Grok 3 outputs misinformation or offensive content, prompting xAI to hint at forthcoming guardrail layers.

O3: Multi-platform and developer-centric deployment

OpenAI has deployed O3 across ChatGPT (Plus, Pro, Enterprise) and API endpoints, as well as integrations with Microsoft Azure and GitHub Copilot. Developers leverage O3’s chain-of-thought via SDK flags, enabling selective reasoning passes per use case. O3-mini’s free availability to all ChatGPT users (with rate limits) democratizes access, while Pro subscribers unlock the “high” reasoning tier. File and image uploads further extend O3’s applicability to document analysis and visual question answering .

How do pricing models compare?

xAI’s model-centric pricing

Grok 3’s enterprise API launched at $3 per million input tokens and $15 per million output tokens in April 2025, with discounting for volume commitments. Grok 3 mini is offered at roughly half these rates, catering to lower-budget projects . X Premium+ users pay $40/month for priority access, while SuperGrok subscribers incur an undisclosed premium for “unlimited” Grok queries.

OpenAI’s tiered access strategy

OpenAI bundles O3-mini within ChatGPT Plus ($20/month) and Pro ($30/month) plans: Plus users gain medium-tier reasoning, Pro unlocks high-tier without extra fees. O3 API calls cost $6 per million tokens—twice the O1 rate but half of Grok 3’s output token price—reflecting OpenAI’s commitment to balancing cost and capability . This tiered approach simplifies budgeting for startups and researchers, albeit at the expense of fine-grained control over reasoning levels that xAI exposes.

Grok 3 vs O3: Which one should you choose?

Performance comparison: Speed, scalability, and reliability

| Performance Metric | o3 | Grok 3 |

|---|---|---|

| Response time | Average 120ms under load | Average 90ms under load |

| Scalability | Horizontal scaling with Kubernetes | Vertical scaling with optimized caching |

| Uptime reliability | 99.95% SLA | 99.9% SLA |

| Throughput (requests/sec) | 5000+ | 4500+ |

| Data processing latency | 150ms (batch mode) | 80ms (real-time streaming) |

Selecting between Grok 3 and o3 depends on specific requirements, strategic priorities, and risk tolerance.

Use-case driven recommendations

- For deep research and multimodal creativity: Grok 3’s integrated image and deep-search capabilities make it ideal for content agencies, design studios, and academic institutions seeking an all-in-one sketchpad for ideation and prototyping .

- For enterprise workflows and toolchains: o3’s agentic tool integration and immediate API access suit software teams, financial analysts, and scientific labs that require modular, reliable augmentation within existing pipelines.

Use Grok 3 and O3 in CometAPI

CometAPI offer a price far lower than the official price to help you integrate [O3 API](https://www.cometapi.com/o1-preview-api/) (model name: o3/ o3-2025-04-16) and Grok 3 API (model name: grok-3;grok-3-latest;), and you will get $1 in your account after registering and logging in! Welcome to register and experience CometAPI.

To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Note that some developers may need to verify their organization before using the model.

Pricing in CometAPI is structured as follows:

| Category | O3 API | Grok 3 |

| API Pricing | o3/ o3-2025-04-16 Input Tokens: $8 / M tokens Output Tokens: $32/ M tokens | grok-3;grok-3-latest Input Tokens: $1.6 / M tokens Output Tokens: $6.4 / M tokens grok-3-fast Input Tokens: $4 / M tokens Output Tokens: $20 / M tokens |

Conclusion

Grok 3 and O3 epitomize the current frontier of AI reasoning. Grok 3 stakes its claim on raw compute, open integration with social media, and unfiltered outputs, appealing to power users and enterprises seeking real-time insights. O3, on the other hand, embodies a measured approach to integrated chain-of-thought, broad platform support, and tiered pricing that fosters widespread adoption. Ultimately, the choice hinges on project requirements: Grok 3 excels in dynamic, data-rich environments, while O3 offers consistency, safety, and ecosystem maturity. As both xAI and OpenAI refine their models, users can expect continuous advances in accuracy, efficiency, and multimodality, shaping the next generation of AI assistants.