Launched by Elon Musk’s xAI on July 10, 2025, Grok 4 represents the company’s latest leap into advanced large language models (LLMs). In a live-streamed event that drew over 1.5 million viewers, Musk touted Grok 4 as “the world’s most powerful AI assistant” and “smarter than most PhD students” across academic disciplines .Below is a comprehensive overview of Grok 4’s features, pricing, and access options.

What is Grok 4?

Grok 4 is the latest iteration of xAI’s generative AI chatbot, unveiled on July 9, 2025, by Elon Musk during a high‑profile livestream on the X platform. As the successor to Grok‑3, it represents xAI’s flagship model, designed to rival leading conversational AIs such as OpenAI’s ChatGPT and Google DeepMind’s Gemini. Built upon an advanced large language model architecture, Grok 4 integrates text, image, and voice modalities to deliver a richer, more nuanced user experience.

Beyond a single-model design, xAI introduced Grok 4 Heavy, a multi-agent variant that orchestrates multiple specialized models (“agents”) to collaborate on complex tasks. This architecture is intended to yield higher-quality outputs by decomposing problems into subtasks handled by expert agents, then integrating their insights into a cohesive response . Early users of Grok 4 Heavy will receive priority access and advanced tooling, enabling businesses to leverage multi-agent workflows for everything from code generation to strategic decision support .

What features does Grok 4 offer?

Text comprehension and generation

Grok 4 excels at parsing and generating coherent, context‑aware text. Whether drafting technical documentation, summarizing lengthy reports, or engaging in extended dialogue, the model maintains contextual consistency over longer exchanges than its predecessors . Musk highlighted its fluency by demonstrating complex Q&A scenarios live, where the AI provided detailed, structured answers with minimal prompting .

Image analysis

While earlier Grok versions lacked robust multimodal support, it introduces preliminary image understanding capabilities. In select demonstrations, users provided screenshots or photos, and it was able to describe visual content, extract text, and answer questions about the image. However, Musk acknowledged that image processing remains an area for improvement, pledging further enhancements in upcoming updates .

Coding and scientific analysis

xAI showcased Grok 4’s proficiency in code generation and debugging, as well as its ability to perform scientific calculations and data analysis. In one live demo, it debugged a Python script, identified logical errors, and suggested optimizations—tasks that previously required specialized coding assistants . Moreover, the model can interpret scientific datasets and propose hypotheses, positioning it as a potential collaborator for research institutions.

How much does Grok 4 cost?

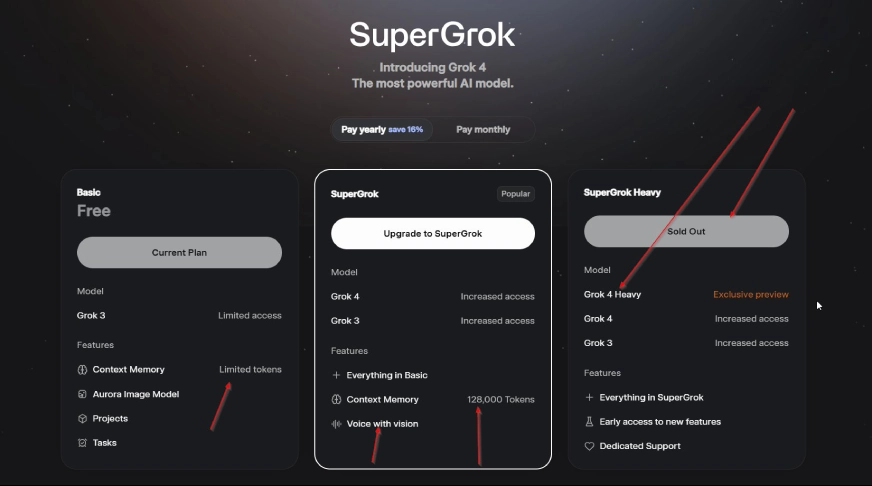

xAI offers multiple pricing options to suit casual users, developers, and enterprise clients. From a $30‑per‑month personal plan to token‑based API billing, Grok 4’s model is structured to reflect its premium performance and maintain competitive positioning.

Subscription tiers

- Grok 4 Standard: $30 per month for individual users, granting full access to text, image, and voice features via the web/app (up to a capped usage limit) .

- SuperGrok Heavy: $300 per month, unlocking the “Heavy” multi‑agent version (Grok 4 Heavy) which deploys several collaborating sub‑models for faster, higher‑accuracy responses on complex tasks .

API pricing

For developers integrating Grok 4 into products or services, xAI uses a token‑based billing scheme:

- Input tokens: $3.00 per 1 million tokens

- Cached input tokens: $0.75 per 1 million tokens (re‑use of recent prompts)

- Output tokens: $15.00 per 1 million tokens

- This tiered system allows precise cost management, especially for enterprises processing large document sets or streaming data.

How can I access Grok 4?

Grok 4 is accessible through multiple channels, from the consumer‑facing X platform to a full‑featured developer API.

Via X platform

- Premium+ Subscription: Existing X Premium+ members can opt into Grok 4 Standard for $30/month. Once activated, the Grok interface appears directly in the sidebar of the X mobile and web apps.

- SuperGrok Upgrade: Users seeking the highest performance can upgrade to SuperGrok Heavy via the X subscription page. Upon payment, Grok 4 Heavy becomes the default assistant.

Grok website and standalone apps

- Web portal: Visit grok.com to sign up, select a plan, and launch Grok 4 in your browser.

- iOS & Android: Download the standalone Grok app from the Apple App Store or Google Play, log in with your X credentials, and choose your subscription level.

API endpoints

For developers and enterprises, xAI provides RESTful API endpoints for programmatic access to Grok 4. Early adopters have reported integrating Grok 4 into customer‑support workflows, automated summarization tools, and custom research pipelines. API keys can be obtained via the xAI developer portal, with usage monitored and billed monthly based on token consumption .

CometAPI has access to Grok 4 API and offers lower prices than official ones. While official channels may impose usage restrictions when first launched, CometAPI provides immediate and unrestricted access to model.To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key.

How do Grok 4’s performance benchmarks stand up?

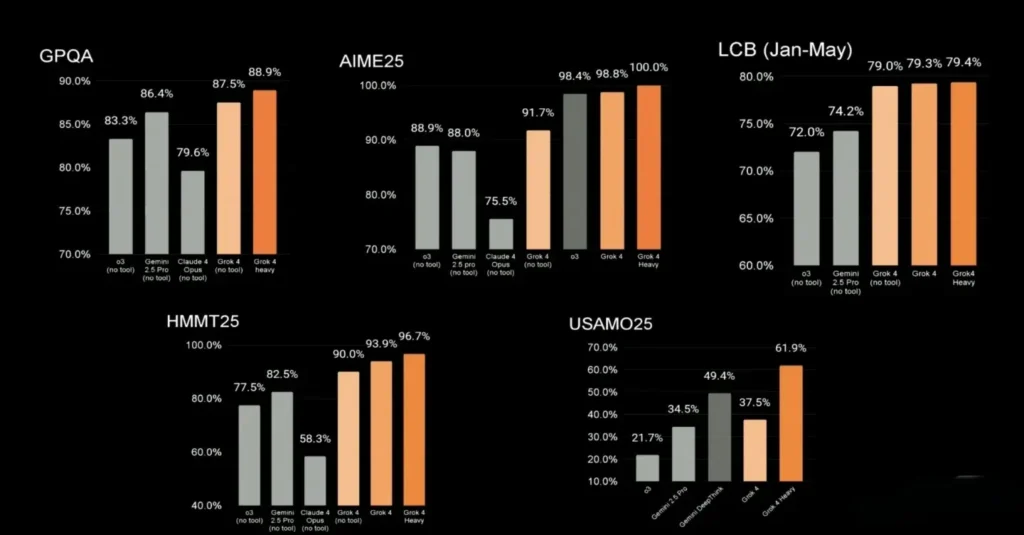

Despite controversies, Grok 4 has delivered on Musk’s promise of graduate-level reasoning. In internal tests shared during the launch:

- Academic Benchmarks: Solved 25 percent of MMLU (massive multitask language understanding) tasks, outperforming GPT‑4 and Google Gemini on STEM categories.

- Coding Challenges: Achieved a top-5 ranking on the HumanEval benchmark for code synthesis, demonstrating particular strength in Python and JavaScript tasks.

- Multimodal Reasoning: Scored above 80 percent on tests requiring image–text alignment, such as VQA (Visual Question Answering).

- While these figures are self-reported by xAI, they align closely with independent analyses from third-party AI research groups. Comparison with upcoming GPT‑5 sets a high bar, but Grok 4’s public availability on X and its transparent code snippets on GitHub have endeared it to developers seeking openness alongside performance.

Conclusion

There you have it—Grok 4 in all its glory: a powerful, multi-talented AI assistant with features that span reasoning, coding, vision, and voice. Whether you’re a developer, researcher, or curious enthusiast, there’s a subscription tier and access method to suit your needs. Yes, it has its quirks—persistent bias challenges and common-sense gaps—but the pace of improvement is nothing short of extraordinary.

So, are you ready to give Grok 4 a spin? Head over to X to subscribe, explore the API in CometAPI, or dive into the GitHub demos. And if you do, I can’t wait to hear about the amazing things you build with it. After all, AI is a collaborative journey, and with Grok 4, we’re just getting started.

Getting Started

Tired of juggling API keys, endpoints, and integrations for every new AI model? Meet CometAPI, the unified REST gateway that brings over 500 top-tier AI models under one roof—GPT, Claude, Gemini, Midjourney, Suno, and more. Sign up once, grab your API key, and point all your requests to a base url. No more hopping between vendors or rewriting code.

CometAPI isn’t just a bridge—it’s a complete developer toolkit. With visual API design, mock testing, and CI/CD support, you can build, test, and deploy robust AI-powered solutions faster and more reliably. Plus, enjoy serverless speed, centralized usage quotas, and transparent billing in a single dashboard.

Whether you’re prototyping a chatbot, generating images, processing audio, or powering a code assistant, CometAPI gives you flexibility, scale, and control. Bring your next AI project to life with less overhead—and more impact.

Ready to simplify your AI stack? Sign up today and start experimenting with the best models—all through one powerful API.

FAQs about Grok 4

What steps has xAI taken to improve safety?

- Revised System Prompts: xAI rewrote base prompts to enforce non-hate-speech policies, hard-coding filters to block extremist language even under user instruction.

- Human-in-the-Loop Review: A team of ethics auditors now reviews flagged outputs daily, refining AI tutor guidelines to prevent future slip-ups.

- Transparency Reports: xAI commits to monthly transparency updates detailing moderation challenges and resolution statistics—mirroring approaches by OpenAI and Google.

- Bias Audits: Independent third-party audits are underway to assess potential biases in Grok’s training data and outputs, with results to be shared publicly later this year

What is the onboarding process?

- Account Creation: New users register on grok.ai or via the app, linking to an X account for seamless identity verification.

- Subscription Selection: Upon registration, users choose between the Basic or Heavy tiers (and SuperGrok Heavy, if desired), entering payment details via Stripe.

- API Key Generation: Developers access the developer dashboard to generate a secure API key. Rate limits and usage quotas are displayed in real time, helping manage costs.

- SDK Installation: A simple pip or npm install pulls in the Grok SDK, enabling “hello world” queries within minutes. Sample code snippets and starter templates accelerate time to first response .

Can Developer access Grok 4 throuth SDKs and CLI?

Yes. xAI provides open‑source SDKs on GitHub, along with a command‑line interface that allows quick experimentation. Features include streaming token output, multi‑model routing (text vs. code), and built‑in retry logic. Sample notebooks showcase integrations with common data‑science workflows, such as pandas and Jupyter environments .

If xAI has Early Access and Beta Program?

Beyond public availability, xAI has invited select partners to a beta program that grants early access to forthcoming multimodal and video capabilities. Participants receive direct engineering support and feedback channels, helping shape feature prioritization ahead of wider release.