Luma AI has become one of the most talked-about tools in consumer and prosumer content creation: an app and cloud service that converts smartphone photos and video into photoreal 3D NeRFs, and — via its Dream Machine / Ray2 models — generates images and short videos from text or image prompts. But speed is one of the first practical questions creators ask: how long will a capture, render, or video generation actually take?

How long does Luma AI take to generate a Dream Machine (text → video) clip?

Official baseline times

Luma’s product pages and learning hub give fast baseline timings for their image and short-video generation pipelines: image batches are measured in tens of seconds and short video jobs in seconds to a few minutes under normal conditions for paid users and internal benchmarks. Those official metrics reflect optimized model runs on Luma’s infrastructure (Ray2 / Dream Machine stack) and are the best-case numbers for small, short clips.

Real-world ranges you should expect

Edge cases / free tier or peak load: free users or times of heavy demand have produced waits of hours or jobs “stuck in queue” until capacity freed up; community threads document multi-hour waits during peak periods or outages. If low latency is critical, factor in this variability and consider paid/priority options.

Small social clips (5–15 sec): in many cases, the generation step alone can complete in under a minute to a few minutes for paid users during normal load — but total wall-clock time can be longer when you include queuing, preprocessing, and streaming/export steps.

Higher-detail or longer clips (20–60 sec): these can take several minutes to tens of minutes, particularly if you ask for higher resolution, complex camera moves, or iterative refinement. Third-party reviews and user accounts report typical times in the 5–30 minute band for more complex short videos.

How long does Luma AI take to produce a 3D capture (NeRF / Genie / Phone capture)?

Typical 3D capture workflows and their time profiles

Luma’s 3D capture tools (the mobile capture app + Genie-like features) transform a set of photos or a recorded video into a NeRF-like 3D model or textured mesh. Unlike short Dream Machine clips, 3D reconstruction is heavier: it must ingest many frames, estimate camera poses, optimize volumetric geometry, and synthesize textures. Public tutorials and hands-on guides report real-world processing times from several minutes up to multiple hours, depending on capture length and quality. A commonly cited tutorial example showed 30 minutes to an hour for a moderate capture; other capture types (long walkthroughs, high-resolution frames) can take longer.

Representative ranges

- Quick object/product scans (20–80 photos, short capture): a few minutes to ~30 minutes.

- Room-scale or walk-through captures (hundreds to thousands of frames): 30 minutes to several hours, depending on input size and final export fidelity.

- High-fidelity exports for game engines (meshes, high-res textures): add extra time for mesh generation, retopology, and baking — this can push jobs into the hours.

Why 3D takes longer than short videos

3D reconstruction is iterative and optimization-heavy: the model refines volumetric fields and texture predictions across many frames, which is computationally intensive. Luma’s backend parallelizes much of this work, but the scale of per-job computation remains larger than a single short video generation.

What are the main factors that affect Luma AI’s processing time?

Model & pipeline choice (Ray2, Photon, Genie, Modify Video)

Different Luma models and features are engineered for different trade-offs: Ray2 and Dream Machine prioritize photoreal video generation with low-latency interactive feedback, while Photon and Genie are optimized for image enhancement or 3D reconstruction and may be heavier by design. Choosing a model with higher fidelity settings will increase compute time. Official docs and the API describe multiple model endpoints and quality flags that affect runtime.

Input size and complexity

- Number of frames / photos: more input = more optimization steps.

- Resolution: higher output resolutions and higher-resolution inputs increase processing time.

- Length of requested clip: longer clips require more rendering and motion coherence checks.

Account tier, queuing, and priority

Paid subscriptions and enterprise/API customers often receive priority or higher-rate limits. Free-tier users will commonly see longer queue times when the system is under strain. Community reports back this up: paid plans generally reduce waiting and improve throughput.

System load & time of day

Real-world user threads show that generation times can spike during peak hours or when major feature launches trigger surges. Luma’s team continuously updates infrastructure (see changelogs) to address capacity, but transient delays still happen.

Network/upload time and client device

For capture workflows, upload speed and device performance matter: large multi-gigabyte capture uploads increase wall-clock times before processing even begins. Luma’s docs note maximum file sizes and recommend capture best practices to minimize unnecessary data transfer.

How can I estimate job time in advance and reduce wait?

Quick estimation checklist

- Classify your job: image, short video (<15s), longer video (>15s), or 3D capture.

- Count inputs: number of photos / video length (seconds) / capture filesize.

- Decide quality: low, standard, or high fidelity — higher fidelity = longer compute.

- Check account tier: free vs paid vs enterprise; factor in probable queueing.

- Run a short test: create a 5–10s test job to gather a real-world baseline.

Practical tips to speed up throughput

- Use recommended capture patterns (smooth camera motion, consistent lighting) so the reconstruction converges faster. Luma’s learning hub and mobile app pages provide capture best practices.

- Reduce input size where acceptable: crop, downsample, or trim footage before upload to reduce processing time and cost.

- Choose lower quality presets for drafts, then finalize at high quality only once you’re happy with composition.

- Schedule heavy runs off-peak if you can; community reports indicate queues drop outside prime hours.

- Consider API / enterprise options if you need scale and predictable SLA; Luma’s API and changelog show ongoing investments in performance and new endpoints like Modify Video to streamline workflows.

How do Luma’s timing numbers compare to other tools?

Comparing generative image/video or NeRF services is complex because each provider optimizes for different tradeoffs (quality vs speed vs cost). For image and very short video generation, Luma’s Dream Machine — especially with Ray2 Flash — competes at sub-minute interactive latency, which is in line with leading consumer-facing generative services. For full-scene NeRF capture and high-fidelity 3D model creation, cloud compute needs and queueing push times higher than quick image generators: expect greater variance and plan accordingly. Partner documentation and third-party writeups commonly indicate minutes for short, simple renders and hours (or unpredictably longer) for complex 3D pipelines.

Final verdict — how long will Luma take for my job?

There’s no single number that fits every user or every job. Use these pragmatic anchors to estimate:

- Image generation (Dream Machine): ~20–30 seconds per small batch under normal load.

- Short video generation (Dream Machine / Ray2): tens of seconds to a few minutes for short clips; Ray2 Flash can be significantly faster on supported flows.

- 3D capture → NeRF: highly variable. Best case: minutes for a small object and light compute; worst case (reported): many hours to days during heavy demand or for very large captures. If you need firm timelines, purchase priority/enterprise plansor run pre-production pilot tests and build planned buffer time into your schedule.

Getting Started

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

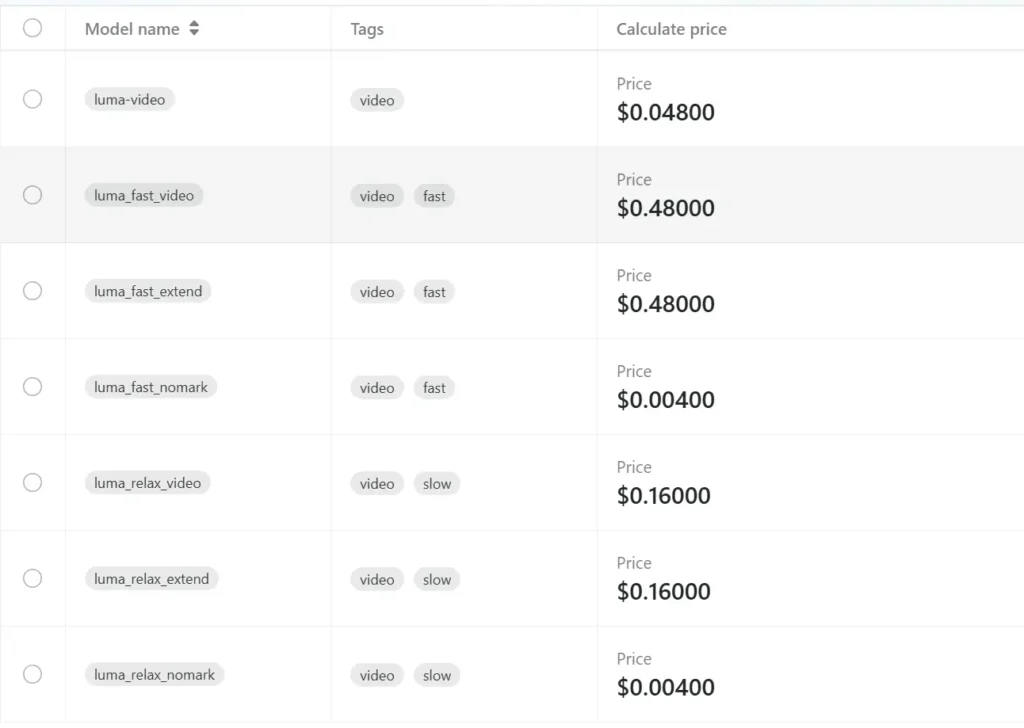

Developers can access Luma API through CometAPI, the latest models version listed are as of the article’s publication date. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate: