The GLM-4.5 series, developed by Zhipu AI (Z.ai), represents a significant advancement in open-source large language models (LLMs). Designed to unify reasoning, coding, and agentic capabilities, GLM-4.5 offers robust performance across various applications. Whether you’re a developer, researcher, or enthusiast, this guide provides detailed information on how to access and utilize the GLM-4.5 series effectively.

What Is GLM-4.5 Series and Why Is It Significant?

GLM-4.5 is a hybrid reasoning model that combines two distinct modes: a “thinking mode” for complex reasoning and tool usage, and a “non-thinking mode” for immediate responses. This dual-mode approach allows the model to handle a wide array of tasks efficiently. The series includes two main variants:

- GLM-4.5: Featuring 355 billion total parameters with 32 billion active parameters, this model is designed for large-scale deployment across reasoning, generation, and multi-agent tasks.

- GLM-4.5-Air: A lightweight version with 106 billion total parameters and 12 billion active parameters, optimized for on-device and smaller-scale cloud inference without sacrificing core capabilities.

Both models support hybrid reasoning modes, offering “thinking” and “non-thinking” modes to balance complex reasoning tasks and quick responses , they are open-source and released under the MIT license, making them accessible for commercial use and secondary development.

Architecture and Design Principles

At its core, GLM-4.5 leverages MoE to dynamically route tokens through specialized expert sub-networks, enabling superior parameter efficiency and scaling behavior (). This approach means fewer parameters need to be activated per forward pass, driving down operational costs while maintaining state-of-the-art performance on reasoning and coding tasks ().

Key Capabilities

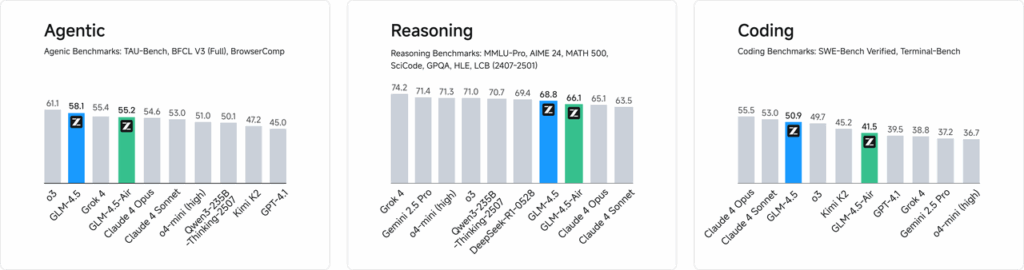

- Hybrid Reasoning and Coding: GLM-4.5 demonstrates SOTA performance on both natural language understanding benchmarks and code generation tests, often rivaling proprietary models in accuracy and fluency .

- Agentic Integration: Built-in tool-calling interfaces allow GLM-4.5 to orchestrate multi-step workflows—such as database queries, API orchestration, and interactive front-end generation—within a single session.

- Multi-Modal Artifacts: From HTML/CSS mini-apps to Python-based simulations and interactive SVGs, GLM-4.5 can output fully functional artifacts, enhancing user engagement and developer productivity .

Why is GLM-4.5 a Game-Changer?

GLM-4.5 has been lauded not only for its raw performance but also for redefining the value proposition of open-source LLMs in enterprise and research settings.

Performance Benchmarks

In independent evaluations across 52 programming tasks—spanning web development, data analysis, and automation—GLM-4.5 consistently outperformed other leading open-source models in tool-calling reliability and overall task completion . In comparative tests against Claude Code, Kimi-K2, and Qwen3-Coder, GLM-4.5 achieved best-in-class scores on benchmarks such as the “SWE-bench Verified” leaderboard .

Cost Efficiency

Beyond accuracy, GLM-4.5’s MoE design drives down inference costs dramatically. Public pricing for API calls starts as low as RMB 0.8 per million input tokens and RMB 2 per million output tokens—approximately one-third the cost of comparable proprietary offerings . Coupled with peak generation speeds of 100 tokens/sec, the model supports high-throughput, low-latency deployments without prohibitive expenses .

How Can You Access GLM-4.5?

1. Direct Access via Z.ai Platform

The most straightforward method to interact with GLM-4.5 is through the Z.ai platform. By visiting chat.z.ai, users can select the GLM-4.5 model and begin interacting via a user-friendly interface. This platform allows for immediate testing and prototyping without the need for complex integrations .users can select either the GLM-4.5 or GLM-4.5-Air model from the top-left corner and start chatting immediately. This interface is user-friendly and requires no setup, making it ideal for quick interactions and demonstrations.

2. API Access for Developers

For developers seeking to integrate GLM-4.5 into applications, the Z.ai API platform provides comprehensive support. The API offers OpenAI-compatible interfaces for both GLM-4.5 and GLM-4.5-Air models, facilitating seamless integration into existing workflows. Detailed documentation and integration guidelines are available at Z.ai API Documentation .

3. Open-Source Deployment

For those interested in local deployment, GLM-4.5 models are available on platforms like Hugging Face and ModelScope. These models are released under the MIT open-source license, allowing for commercial use and secondary development. They can be integrated with mainstream inference frameworks such as vLLM and SGLang .

4. Integration with CometAPI

CometAPI offers streamlined access to GLM-4.5 models through their unified API platform at Dasborad. This integration simplifies authentication, rate limiting, and error handling, making it an excellent choice for developers seeking a hassle-free setup. Additionally, CometAPI’s standardized API format enables easy model switching and A/B testing between GLM-4.5 and other available models .

How Can Developers Access GLM-4.5 Series?

There are multiple channels for obtaining and deploying GLM-4.5, from direct model downloads to managed APIs.

Via Hugging Face and ModelScope

Both Hugging Face and ModelScope host the full GLM-4.5 series under the zai-org namespace. After agreeing to the MIT license, developers can:

- Clone the Repository:

git clone https://huggingface.co/zai-org/GLM-4.5

- Install Dependencies:

pip install transformers accelerate

- Load the Model:

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("zai-org/GLM-4.5")

model = AutoModelForCausalLM.from_pretrained("zai-org/GLM-4.5")

``` :contentReference{index=15}.

Through CometAPI

CometAPI provides a serverless API for GLM‑4.5 and GLM-4.5 Air API at pay-per-token rates, accessible via,by configuring OpenAI-compatible endpoints, you can call GLM-4.5 through OpenAI’s Python client with minimal adjustments to existing codebases.CometAPI not only provides GLM4.5 and GLM-4.5-air but also all official models:

| Model name | introduce | Price |

glm-4.5 | Our most powerful reasoning model, with 355 billion parameters | Input Tokens $0.48 Output Tokens $1.92 |

glm-4.5-air | Cost-Effective Lightweight Strong Performance | Input Tokens $0.16 Output Tokens $1.07 |

glm-4.5-x | High Performance Strong Reasoning Ultra-Fast Response | Input Tokens $1.60 Output Tokens $6.40 |

glm-4.5-airx | Lightweight Strong Performance Ultra-Fast Response | Input Tokens $0.02 Output Tokens $0.06 |

glm-4.5-flash | Strong Performance Excellent for Reasoning Coding & Agents | Input Tokens $3.20 Output Tokens $12.80 |

Python and REST API Integration

For bespoke deployments, organizations can host GLM-4.5 on dedicated GPU clusters using Docker or Kubernetes. A typical RESTful setup involves:

Launching Inference Server:

bashdocker run -p 8000:8000 zai-org/glm-4.5:latest

Sending Requests:

bashcurl -X POST http://localhost:8000/generate \ -H "Content-Type: application/json" \ -d '{"prompt": "Translate to French: Hello.", "max_tokens": 50}' Responses conform to the JSON formats used by popular LLM APIs .

What Are Best Practices for Integrating GLM-4.5 in Applications?

To maximize ROI and ensure robust performance, teams should consider the following:

API Optimization and Rate Limits

- Batching Requests: Group similar prompts to reduce overhead and leverage GPU throughput.

- Caching Common Queries: Store frequent completions locally to avoid redundant inference calls.

- Adaptive Sampling: Dynamically adjust

temperatureandtop_pbased on query complexity to balance creativity and determinism.

Security and Compliance

- Data Sanitization: Preprocess inputs to strip sensitive information before sending to the model.

- Access Control: Implement API keys, IP allowlists, and rate throttling to prevent misuse and abuse.

- Audit Logging: Record prompts, completions, and metadata for compliance with corporate and regulatory requirements, especially in finance or healthcare contexts.

Getting Started

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

For developers seeking to integrate GLM-4.5 into their applications, the CometAPI platform offers a robust solution. The API provides OpenAI-compatible interfaces, allowing for seamless integration into existing workflows. Detailed documentation and usage guidelines are available on the Comet API page.

Developers can access GLM‑4.5 and GLM-4.5 Air API through CometAPI, the latest models version listed are as of the article’s publication date. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Conclusion

GLM-4.5 represents a significant advancement in the field of large language models, offering a versatile solution for a wide range of applications. Its hybrid reasoning architecture, agentic capabilities, and open-source nature make it an attractive option for developers and organizations seeking to leverage advanced AI technologies. By exploring the various access methods outlined in this guide, users can effectively integrate GLM-4.5 into their projects and contribute to its ongoing development.