Em 2025-2026, o cenário de ferramentas de IA continuou a se consolidar: APIs de gateway (como a CometAPI) expandiram-se para fornecer acesso no estilo OpenAI a centenas de modelos, enquanto aplicativos LLM para usuários finais (como o AnythingLLM) continuaram aprimorando seu provedor "OpenAI Genérico" para permitir que aplicativos de desktop e aplicativos com foco em localização chamem qualquer endpoint compatível com OpenAI. Isso torna simples, hoje, rotear o tráfego do AnythingLLM pela CometAPI e obter os benefícios da escolha de modelos, roteamento de custos e faturamento unificado — enquanto ainda se utiliza a interface de usuário local e os recursos de RAG/agente do AnythingLLM.

O que é AnythingLLM e por que você gostaria de conectá-lo ao CometAPI?

O que é qualquer coisaLLM?

AnythingLLM é um aplicativo de IA de código aberto e completo, com cliente local e em nuvem, para a criação de assistentes de bate-papo, fluxos de trabalho de geração aumentada por recuperação (RAG) e agentes baseados em LLM. Ele oferece uma interface de usuário intuitiva, uma API para desenvolvedores, recursos de espaço de trabalho/agente e suporte para LLMs locais e em nuvem — projetado para ser privado por padrão e extensível por meio de plugins. O AnythingLLM expõe uma OpenAI genérico provedor que permite a comunicação com APIs LLM compatíveis com OpenAI.

O que é CometAPI?

CometAPI é uma plataforma comercial de agregação de APIs que expõe Mais de 500 modelos de IA por meio de uma interface REST no estilo OpenAI e faturamento unificado. Na prática, isso permite que você invoque modelos de vários fornecedores (OpenAI, Anthropic, variantes do Google/Gemini, modelos de imagem/áudio, etc.) através da mesma https://api.cometapi.com/v1 endpoints e uma única chave de API (formato sk-xxxxxA CometAPI oferece suporte a endpoints padrão no estilo OpenAI, como: /v1/chat/completions, /v1/embeddings, etc., o que facilita a adaptação de ferramentas que já suportam APIs compatíveis com OpenAI.

Por que integrar o AnythingLLM com a CometAPI?

Três razões práticas:

- Escolha do modelo e flexibilidade do fornecedor: O AnythingLLM pode usar qualquer LLM compatível com OpenAI por meio de seu wrapper genérico OpenAI. Apontar esse wrapper para a CometAPI proporciona acesso imediato a centenas de modelos sem alterar a interface do usuário ou os fluxos do AnythingLLM.

- Otimização de custos/operações: Utilizar a CometAPI permite alternar entre modelos (ou optar por modelos mais baratos) de forma centralizada para controle de custos e manter uma fatura unificada em vez de gerenciar várias chaves de fornecedores.

- Experimentação mais rápida: Você pode comparar diferentes modelos (por exemplo,

gpt-4o,gpt-4.5, variantes de Claude ou modelos multimodais de código aberto) através da mesma interface de usuário do AnythingLLM — útil para agentes, respostas RAG, sumarização e tarefas multimodais.

O ambiente e as condições devem ser preparados antes da integração.

Requisitos de sistema e software (alto nível)

- Computador desktop ou servidor executando AnythingLLM (Windows, macOS, Linux) — instalação em desktop ou instância auto-hospedada. Confirme se você está usando uma versão recente que expõe o Preferências do LLM / Provedores de IA configurações.

- Conta CometAPI e uma chave de API (a

sk-xxxxx(segredo de estilo). Você usará esse segredo no provedor OpenAI genérico do AnythingLLM. - Conectividade de rede da sua máquina para

https://api.cometapi.com(nenhum firewall bloqueando HTTPS de saída). - Opcional, mas recomendado: um ambiente Python ou Node moderno para testes (Python 3.10+ ou Node 18+), curl e um cliente HTTP (Postman / HTTPie) para verificar o funcionamento do CometAPI antes de integrá-lo ao AnythingLLM.

Condições específicas do AnythingLLM

O OpenAI genérico O provedor LLM é a rota recomendada para endpoints que imitam a superfície da API da OpenAI. A documentação do AnythingLLM alerta que este provedor é voltado para desenvolvedores e que você deve entender as entradas que fornece. Se você usa streaming ou seu endpoint não suporta streaming, o AnythingLLM inclui uma configuração para desativar o streaming para OpenAI genérico.

Lista de verificação de segurança e operacional

- Trate a chave da API do Comet como qualquer outro segredo — não a inclua em repositórios; armazene-a nos chaveiros do sistema operacional ou em variáveis de ambiente, sempre que possível.

- Se você planeja usar documentos confidenciais no RAG, certifique-se de que as garantias de privacidade do endpoint atendam às suas necessidades de conformidade (consulte a documentação/termos da CometAPI).

- Defina limites máximos para o número de tokens e para a janela de contexto a fim de evitar custos exorbitantes.

Como configurar o AnythingLLM para usar a CometAPI (passo a passo)?

A seguir, apresentamos uma sequência de passos concreta — seguida de exemplos de variáveis de ambiente e trechos de código para testar a conexão antes de salvar as configurações na interface do AnythingLLM.

Passo 1 — Obtenha sua chave CometAPI

- Cadastre-se ou faça login no CometAPI.

- Acesse “Chaves de API” e gere uma chave — você receberá uma sequência de caracteres semelhante a esta:

sk-xxxxxMantenha isso em segredo.

Etapa 2 — Verificar se a CometAPI funciona com uma solicitação rápida

Use curl ou Python para chamar um endpoint simples de conclusão de chat para confirmar a conectividade.

Exemplo de curl

curl -X POST "https://api.cometapi.com/v1/chat/completions" \

-H "Authorization: Bearer sk-xxxxx" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4o",

"messages": ,

"max_tokens": 50

}'

Se isso retornar um código 200 e uma resposta JSON com um choices array, sua chave e rede estão funcionando. (A documentação da CometAPI mostra a interface e os endpoints no estilo OpenAI).

Exemplo em Python (requests)

import requests

url = "https://api.cometapi.com/v1/chat/completions"

headers = {"Authorization": "Bearer sk-xxxxx", "Content-Type": "application/json"}

payload = {

"model": "gpt-4o",

"messages": ,

"max_tokens": 64

}

r = requests.post(url, json=payload, headers=headers, timeout=15)

print(r.status_code, r.json())

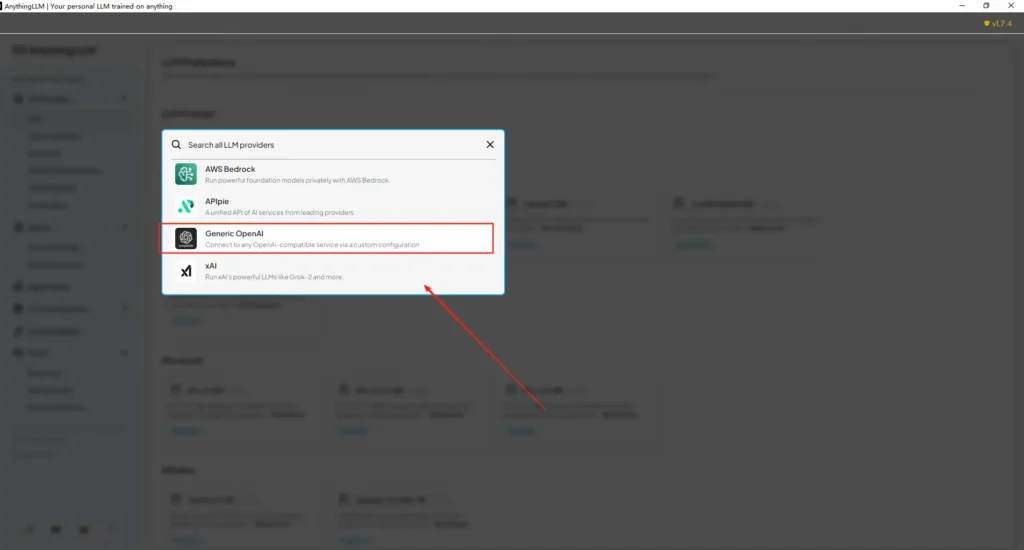

Etapa 3 — Configurar AnythingLLM (interface do usuário)

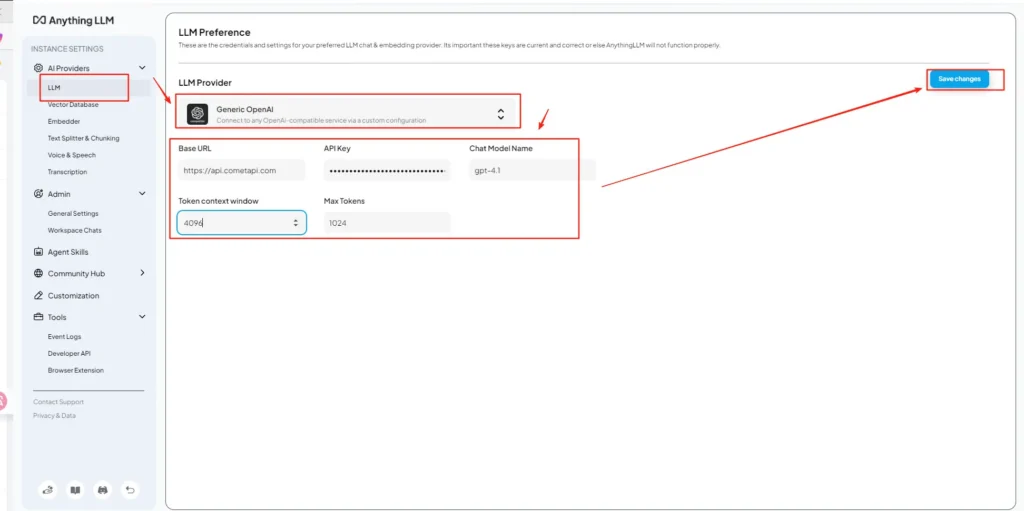

Abra AnythingLLM → Configurações → Provedores de IA → Preferências do LLM (ou caminho similar na sua versão). Use o OpenAI genérico Forneça o provedor e preencha os campos da seguinte forma:

Configuração da API (exemplo)

• Acesse o menu de configurações do AnythingLLM e localize Preferências do LLM em Provedores de IA.

• Selecione OpenAI genérico como provedor de modelo e insirahttps://api.cometapi.com/v1no campo URL.

• Cole osk-xxxxxInsira a chave da API no campo indicado pela CometAPI. Preencha os campos "Contexto do Token" e "Tokens Máximos" de acordo com o modelo desejado. Você também pode personalizar os nomes dos modelos nesta página, adicionando, por exemplo, o nome do modelo.gpt-4omodelo.

Isso está em consonância com as diretrizes "Generic OpenAI" da AnythingLLM (wrapper para desenvolvedores) e com a abordagem de URL base compatível com OpenAI da CometAPI.

Etapa 4 — Defina os nomes dos modelos e os limites de tokens

Na mesma tela de configurações, adicione ou personalize os nomes dos modelos exatamente como a CometAPI os publica (por exemplo, gpt-4o, minimax-m2, kimi-k2-thinking) para que a interface do AnythingLLM possa apresentar esses modelos aos usuários. A CometAPI publica strings de modelo para cada fornecedor.

Etapa 5 — Teste no AnythingLLM

Inicie um novo chat ou use um espaço de trabalho existente, selecione o provedor OpenAI genérico (se você tiver vários provedores), escolha um dos nomes de modelo CometAPI que você adicionou e execute um prompt simples. Se você receber sugestões coerentes, a integração foi concluída.

Como o AnythingLLM usa essas configurações internamente

O wrapper genérico OpenAI do AnythingLLM constrói solicitações no estilo OpenAI (/v1/chat/completions, /v1/embeddingsAssim que você indicar a URL base e fornecer a chave CometAPI, o AnythingLLM encaminhará os chats, as chamadas de agentes e as solicitações de incorporação por meio do CometAPI de forma transparente. Se você usar agentes do AnythingLLM (os @agent fluxos), eles herdarão o mesmo provedor.

Quais são as melhores práticas e as possíveis armadilhas?

Melhores práticas

- Utilize configurações de contexto adequadas ao modelo: A janela de contexto de token e o número máximo de tokens do AnythingLLM devem corresponder ao modelo escolhido no CometAPI. A incompatibilidade leva a truncamentos inesperados ou falhas nas chamadas.

- Proteja suas chaves de API: Armazene as chaves da API do Comet em variáveis de ambiente e/ou no gerenciador de segredos do Kubernetes; nunca as inclua no Git. O AnythingLLM armazenará as chaves em suas configurações locais se você as inserir na interface do usuário — trate o armazenamento do host como confidencial.

- Comece com modelos mais baratos/menores para fluxos de experimento: Use a CometAPI para testar modelos de baixo custo para desenvolvimento e reserve os modelos premium para produção. A CometAPI anuncia explicitamente a troca de custos e a cobrança unificada.

- Monitore o uso e configure alertas: A CometAPI oferece painéis de controle de uso — defina orçamentos/alertas para evitar cobranças inesperadas.

- Testar agentes e ferramentas isoladamente: Os agentes AnythingLLM podem acionar ações; teste-os primeiro com prompts seguros e em instâncias de teste.

Armadilhas comuns

- Interface do usuário vs.

.envconflitos: Ao hospedar o sistema em seu próprio servidor, as configurações da interface do usuário podem ser sobrescritas..envalterações (e vice-versa). Verifique o que foi gerado./app/server/.envSe tudo voltar ao normal após a reinicialização. Relatório de problemas da comunidadeLLM_PROVIDERredefine. - Nomes de modelo incompatíveis: Usar um nome de modelo não disponível na CometAPI resultará em um erro 400/404 do gateway. Sempre verifique os modelos disponíveis na lista de modelos da CometAPI.

- Limites de tokens e streaming: Se você precisar de respostas em fluxo contínuo, verifique se o modelo CometAPI oferece suporte a streaming (e se a versão da interface do usuário do AnythingLLM também oferece). Alguns provedores diferem na semântica de streaming.

Que casos de uso práticos essa integração possibilita?

Geração Aumentada de Recuperação (RAG)

Use os carregadores de documentos e o banco de dados vetorial do AnythingLLM com os LLMs da CometAPI para gerar respostas contextuais. Você pode experimentar com incorporações simples e modelos de chat mais complexos, ou manter tudo na CometAPI para uma cobrança unificada. Os fluxos RAG do AnythingLLM são um recurso integrado essencial.

Automação de agentes

AnythingLLM suporta @agent Fluxos de trabalho (navegar em páginas, acessar ferramentas, executar automações). O roteamento de chamadas LLM dos agentes por meio da CometAPI oferece opções de modelos para etapas de controle/interpretação sem modificar o código do agente.

Testes A/B com múltiplos modelos e otimização de custos

Alternar modelos por espaço de trabalho ou recurso (por exemplo, gpt-4o Para respostas de produção, gpt-4o-mini (para desenvolvedores). A CometAPI torna as trocas de modelos triviais e centraliza os custos.

Oleodutos multimodais

A CometAPI fornece modelos de imagem, áudio e especializados. O suporte multimodal do AnythingLLM (via provedores), juntamente com os modelos da CometAPI, permite a criação de legendas para imagens, a sumarização multimodal ou a transcrição de áudio por meio da mesma interface.

Conclusão

A CometAPI continua a se posicionar como um gateway multimodelos (mais de 500 modelos, API no estilo OpenAI) — o que a torna uma parceira natural para aplicativos como o AnythingLLM, que já oferece suporte a um provedor OpenAI genérico. Da mesma forma, o provedor genérico do AnythingLLM e as opções de configuração recentes facilitam a conexão com esses gateways. Essa convergência simplifica a experimentação e a migração para produção no final de 2025.

Como começar a usar a API Comet

A CometAPI é uma plataforma de API unificada que agrega mais de 500 modelos de IA de provedores líderes — como a série GPT da OpenAI, a Gemini do Google, a Claude da Anthropic, a Midjourney e a Suno, entre outros — em uma interface única e amigável ao desenvolvedor. Ao oferecer autenticação, formatação de solicitações e tratamento de respostas consistentes, a CometAPI simplifica drasticamente a integração de recursos de IA em seus aplicativos. Seja para criar chatbots, geradores de imagens, compositores musicais ou pipelines de análise baseados em dados, a CometAPI permite iterar mais rapidamente, controlar custos e permanecer independente de fornecedores — tudo isso enquanto aproveita os avanços mais recentes em todo o ecossistema de IA.

Para começar, explore as capacidades do modelo deCometAPI no Playground e consulte o guia da API para obter instruções detalhadas. Antes de acessar, certifique-se de ter feito login na CometAPI e obtido a chave da API. CometAPI oferecem um preço muito mais baixo que o preço oficial para ajudar você a se integrar.

Pronto para ir?→ Inscreva-se no CometAPI hoje mesmo !

Se você quiser saber mais dicas, guias e novidades sobre IA, siga-nos em VK, X e Discord!