How to use CometAPI in LobeChat

LobeChat and CometAPI are two fast-moving projects in the open AI ecosystem. In September 2025 LobeChat merged a full CometAPI provider integration that makes it straightforward to call CometAPI’s unified, OpenAI-compatible endpoints from LobeChat. This article explains what LobeChat and CometAPI are, why you’d integrate them, step-by-step integration and configuration (including a ready-to-drop TypeScript provider base configuration), runtime examples, concrete use cases, and production tips for cost, reliability and observability.

What is LobeChat and what features does it offer?

LobeChat is an open-source, modern chat framework designed to host multi-provider LLM chat applications with a polished UI and deployment tooling. It’s built to support multimodal inputs (text, images, audio), knowledge-base / RAG workflows, branching conversations and “chain of thought” visualizations, plus an extensible provider/plugin system so you can swap in different model backends without rearchitecting your app. LobeChat’s docs and repo present it as a production-ready framework for both self-hosted and cloud deployments, with environment-driven configuration and a marketplace for third-party MCP (model control plane) integrations.

Key LobeChat capabilities at a glance

- Multi-provider support (OpenAI, Anthropic, Google Gemini, Ollama, etc.).

- File upload + knowledge base for RAG workflows (documents, PDFs, audio).

- Dev-friendly configuration via environment variables and settings-URL import.

- Extensible runtime: providers are configured with small adapter runtimes so new backends are pluggable.

What is CometAPI ?

CometAPI is a unified AI access layer that exposes 500+ models from many underlying providers through a single, OpenAI-compatible API surface. It is positioned to let developers pick model endpoints for performance, cost or capability without lock-in, and to centralize billing, routing and access. CometAPI advertises unified endpoints for chat/completions and a models list API to discover available model IDs.

Why CometAPI is attractive to teams

- Model choice & portability: switch among many cutting-edge models without changing integration code significantly.

- OpenAI-compatible endpoints: many client libraries and frameworks that expect OpenAI-style HTTP endpoints can work with CometAPI by changing base URL + auth. (Practical examples show

https://api.cometapi.com/v1/as an OpenAI-compatible surface.) - Developer docs & integrations: CometAPI publishes docs and step-by-step guides for integrating with tools like LlamaIndex and other low-code platforms.

Why should you integrate CometAPI into LobeChat?

Short answer: flexibility, cost control, and fast access to new models. LobeChat is built to be provider-agnostic; plugging CometAPI in gives your LobeChat deployment the ability to call many different models through the same code path — swap models for throughput, latency, cost or capability without changing your UI or prompt flows. Also, the community and maintainers have actively added CometAPI provider support to LobeChat, making integration smoother.

Business and technical benefits

- Model diversity without code churn. Need to evaluate Gemini, Claude or a niche image model? CometAPI can expose those model IDs through a single API. This reduces the amount of per-provider plumbing in LobeChat.

- Cost optimization. CometAPI lets you route to cheaper models or select lower-cost providers dynamically, which can substantially reduce per-conversation costs for high-volume deployments.

- Simpler secret management. One API key to manage in LobeChat’s settings or Docker environment instead of multiple provider keys. The LobeChat platform already supports enabling a provider via

ENABLED_<PROVIDER>and a provider key env var pattern, so the integration is operationally consistent. - Keeps LobeChat codebase minimal: provider logic is encapsulated and configured with env vars.

How do I set up and integrate CometAPI in LobeChat?

This section gives an actionable, step-by-step recipe: obtain keys, set environment variables, add the provider to LobeChat (example provider config), and show runtime usage (chat call). The examples follow LobeChat’s provider patterns and the CometAPI OpenAI-compatible endpoints.

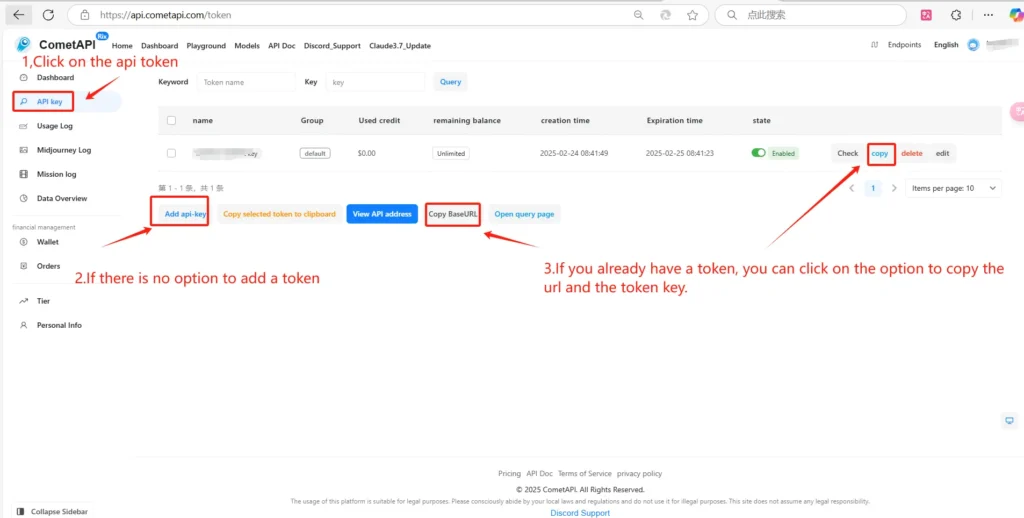

1) Get a CometAPI account and API key

Sign up at CometAPI and open the API console. To register an CometAPI account, you’ll need to use an email address or log in directly with Google One-Click.

After completing the registration, go to the playground and click add new secret key to create a new API key:

Get an API Key.

2) Configuring CometAPI in LobeChat

- Enter Lobe-Chat settings menu, click on the avatar, click on application settings option.

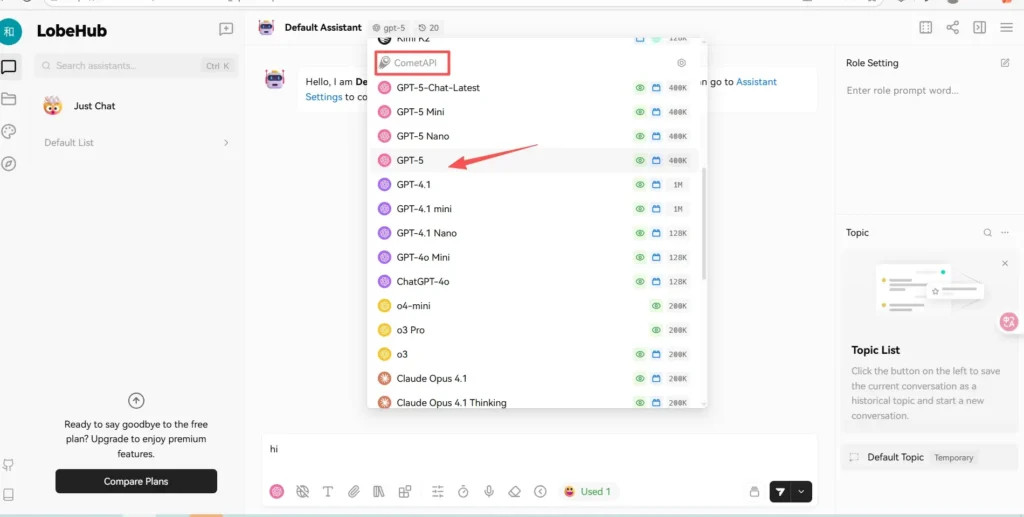

- Select cometapi as the model provider.

- Paste the sk-xxxxx from cometapi in the API key input box and turn on button,select one model to check

3) Testing the Call

Select CometAPI’s one model to check, Enter a simple test command in Lobe-Chat. If the call is successful, you will receive a corresponding answer; if it fails, check if the configuration is correct or contact cometapi online customer service.

Currently, cometapi provides more than 30 latest models from major developers (openAI, grok, claude, gemini) on lobechat.

How can you extend the integration (advanced enhancements)?

Want to go beyond the basics? Here are solid next steps that take your integration from functional to production-grade.

Enhancement 1: Dynamic model orchestration (multi-model agents)

Create agent logic in LobeChat that dynamically farms subtasks to different Comet models (e.g., small embedding model for retrieval, medium model for drafting, high-capability model for summarization). Use LobeChat’s function-call/plugin system to coordinate the workflow and aggregate final responses.

Enhancement 2: Caching embeddings and responses

When using embeddings (for RAG), compute embeddings once and cache them to reduce overhead. If you’re calling CometAPI for embeddings, store vector representations in your vector DB and only recompute on content changes. This reduces tokens and cost.

Enhancement 3: Per-tenant configuration and quotas

If you run a multi-tenant LobeChat instance, control per-tenant limits (requests/hour, model tiers) by writing a middleware that maps tenant id → allowed model list (using CometAPI model IDs). This enables premium tiers with access to better models.

Enhancement 4: Use model metadata and health checks

Implement provider health checks that call a lightweight CometAPI “model ping” or a minimal chat call to ensure latency is within SLAs; gracefully fail to fallback models otherwise. Keep a heartbeat monitor and present provider status in the LobeChat admin UI.

What pitfalls should you watch out for?

- API key exposure: Never store CometAPI keys in client code. Always keep them server-side (LobeChat’s server layer).

- Model name drift: CometAPI may add or deprecate model IDs. Use a server mapping and update

COMETAPI_MODEL_LISTwhen you want to expose new models. - Response format differences: Although CometAPI aims for OpenAI-compatibility, some models or meta fields may differ; always map and sanitize responses before showing them to users.

Getting Started

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !

Conclusion

Integrating CometAPI into LobeChat is a practical way to gain model diversity, cost flexibility, and fast experimentation capability while keeping the polished UX and RAG features that LobeChat provides. The LobeChat community has already made a substantial push to add CometAPI support (provider card, model list, runtime adapters, tests and docs), which you can leverage directly or use as inspiration to implement a custom adapter for specialized needs. For the most accurate integration steps and the latest examples, consult CometAPI’s docs and the LobeChat model-provider docs and repo—links referenced below will help you continue.