Kimi K2 Thinking is the newest agentic reasoning variant in the Kimi K2 family: a large, mixture-of-experts (MoE) model tuned to do sustained, step-by-step reasoning and to call external tools reliably across long multi-step workflows.In this guide I pull together the latest public information, explains what Kimi K2 Thinking is, how it compares with contemporary flagship models (GPT-5 and Claude Sonnet 4.5), how the API works, step-by-step setup and a runnable sample reasoning task, pricing considerations, and recommended production best practices — with code examples so you can get started right away.

What is Kimi K2 Thinking and why is it in the headlines?

Kimi K2 Thinking is the newest “thinking agent” release from Moonshot AI — a trillion-parameter, mixture-of-experts (MoE) family member that’s been explicitly trained and packaged to perform long-horizon, multi-step reasoning while autonomously calling external tools (search, Python execution, web-scraping, etc.). The release (announced in early November 2025) has drawn attention for three reasons: (1) it is open-weight / open-licensed (a “Modified MIT” style license), (2) it supports extremely long contexts (256k token context window), and (3) it demonstrates markedly improved agentic performance on tool-enabled benchmarks versus several leading closed-source frontier models.

Kimi K2 Thinking API and ecosystem support OpenAI-style chat completion semantics plus explicit structured outputs and tool invocation patterns. You send a chat history + tool schema; the model replies with a chain-of-thought representation (if requested) and can output structured JSON that triggers external tooling. Providers expose the ability to stream tokens and return both the human-facing text and a machine-parsable tool-invocation block. This enables implementing agent loops: model → tool → observation → model.

In plain terms: K2 Thinking is designed not just to produce a one-shot answer to a question, but to think out loud, plan, call tools when helpful, inspect results, and iterate — over hundreds of steps if needed — without degrading. That capability is what Moonshot calls “stable long-horizon agency.”

What are the core features of Kimi K2 Thinking?

Key model characteristics

- Mixture-of-Experts (MoE) architecture with ~1 trillion parameters (32B activated per forward pass in common settings).

- 256k token context window for handling very long documents, multi-source research, and extended chains of reasoning.

- Native INT4 quantization / quantization-aware training, enabling big reductions in inference memory and significant speedups compared with naïvely sized weights.

- Built-in tool calling and an API that accepts a list of functions/tools; the model will autonomously decide when to call them and iterate on results.

What this enables in practice

- Deep, stepwise reasoning (chain-of-thought style outputs that can be surfaced to the caller as separate “reasoning content”).

- Stable multi-step agent workflows: The model can maintain goal coherence across 200–300 sequential tool calls, a notable jump from older models that tend to drift after a few dozen steps.

- Open weights + managed API: you can run it locally if you have the hardware, or call it via Moonshot/CometAPI using an OpenAI-compatible API interface.

Kimi K2 Thinking exposes agentic behavior via two core mechanisms: (1) passing a tools list so the model can call functions, and (2) the model emitting internal reasoning tokens that the platform surfaces as text (or structured chains of thought when enabled). I will explain in detail with examples next.

How do I use the Kimi K2 Thinking API

Prerequisites

- API access / account: Create an account on Moonshot’s platform (platform.moonshot.ai) or on a supported API aggregator (CometAPI offers prices lower than the official prices). After signup you can create an API key in the dashboard.

- API key: keep it secure in environment variables or your secret store.

- Client libraries: you can use standard HTTP (curl) or OpenAI-compatible SDKs. Moonshot’s platform documents provide direct examples. Set up your Python environment. You will need the OpenAI Python SDK, which is compatible with the CometAPI API because they both maintain OpenAI compatibility.

If you need local/private hosting: hardware (GPU/cluster) that supports MoE & INT4—Moonshot recommends vLLM, SGLang, and other inference engines for production deployments. The model weights are available on Hugging Face for self-hosting— many teams prefer the hosted API due to the model’s size

Minimal call flow (high level)

- Build a chat request (system + user messages).

- Optionally include

tools(a JSON array describing functions) to enable the model to autonomously call them. - Send the request to the chat/completions endpoint with model set to the K2 Thinking variant.

- Stream and/or collect response chunks and assemble both

reasoning_contentand finalcontent. - When the model requests a tool call, execute the tool on your side, return the result as a follow-up message (or via the provider’s function-return protocol) and let the model continue.

Is “reasoning_content” exposed in the API?

Yes. Kimi K2 Thinking explicitly returns an auxiliary output field (commonly named reasoning_content) that contains the model’s intermediate reasoning trace. Providers and community docs show streaming patterns that emit reasoning_content deltas separately from content deltas — which makes it possible to present a human-readable “thinking” stream while a final answer is being composed. Note: streaming is recommended for large reasoning traces because the response size grows.

cURL — first, a minimal chat completion, :

curl -X POST "https://api.cometapi.com/v1/chat/completions" \

-H "Authorization: Bearer $cometapi_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "kimi-k2-thinking",

"messages": [

{"role":"system","content":"You are a careful reasoning assistant. Show step-by-step reasoning."},

{"role":"user","content":"Outline a 5-step experiment to validate a micro-SaaS idea with $200 budget."}

],

"temperature": 0.2,

"max_tokens": 2048,

"stream": false

}'

This returns

contentand (for Thinking models) areasoning_contentfield you can store or stream

Recommended parameters for Thinking mode

Below are recommended starting parameters for multi-step reasoning tasks. Tweak per your task:

model: pick the K2 Thinking variant (moonshotai/Kimi-K2-Thinkingorkimi-k2-thinking-turbo) — the “Thinking” family exposesreasoning_content.- Kimi-K2-Thinking model cards suggest

temperature = 1.0as a recommended baseline for richer exploration during thinking. Use higher temperature for exploratory reasoning, lower for precise tasks. - Max tokens / context: Thinking models can produce large internal traces — set

max_tokenshigh enough and prefer streaming. - Streaming: enable streaming (

stream=True) to render both reasoning and final content progressively. - Tool schema: include a

tools/functionsarray describing available functions; K2 will decide autonomously when to call them. Provide cleardescriptionand strict JSON schemas for arguments to avoid ambiguous calls.

How do I enable and use tool calling with K2 Thinking?

Include a tools array in the request body. Each tool is described by:

name: string, unique tool identifier.description: short explanation for the model.parameters: JSON schema detailing expected arguments.

When the model decides to call a tool it will emit a tool invocation object (often as a structured token). Your runtime must execute that tool (server-side), capture the output, and feed it back as a tool response message so the model can continue reasoning.

Step-by-step guide

K2 Thinking supports a function/tool schema similar to OpenAI function calling but with explicit support for looping until the model finishes (it may request multiple tool calls). The pattern is:

- Define tool schemas (name, description, JSON schema of parameters).

- Pass

toolsto the chat completion call. - On each response that contains

tool_calls, execute the requested tool(s) and append tool outputs back into the messages asrole: "tool". - Repeat until the model returns a normal completion.

Enable tool invocation (example pattern)

When you want the model to call tools, provide tool schemas in the request, e.g., web_search, code_executor, include them in the request, and instruct the model how to use them.

{

"model": "kimi-k2-thinking",

"messages": [{"role":"system","content":"You can call available tools when needed. Return a JSON tool call when you want to invoke external code."},

{"role":"user","content":"Find the latest CPU microarchitecture benchmarks for RISC-V and summarize differences."}],

"tools": [

{

"name": "web_search",

"description": "Performs a web query and returns top results as JSON",

"input_schema": {"type":"object","properties":{"q":{"type":"string"}}}

}

],

"temperature": 0.1

}

The model may reply with a tool_call object that your agent runtime must detect and route to the registered tool.

This pattern supports arbitrarily deep sequences of tool-invoke → tool-run → model-continue, which is why Kimi K2 Thinking emphasizes stability over many sequential calls in its design.

What does Kimi K2 Thinking API cost?

The official Moonshot (Kimi) platform lists two main priced endpoints for Kimi K2 Thinking:

- kimi-k2-thinking (standard) — input tokens: $0.60 / 1M (cache-miss tier) and $0.15 / 1M (cache-hit tier); output tokens: $2.50 / 1M.

- kimi-k2-thinking-turbo (high-speed) — higher latency/throughput tier: input: $1.15 / 1M; output: $8.00 / 1M (platform / partner pages repeat this).

CometAPI has an advantage in terms of price such as: very low input rate and a lower per-output token rate than comparable high-end models — plus free trial tokens for onboarding:

| Model | Input Tokens | Output Tokens |

|---|---|---|

| kimi-k2-thinking-turbo | $2.20 | $15.95 |

| kimi-k2-thinking | $1.10 | $4.40 |

Cost considerations

- Long contexts (128K–256K tokens) and extensive tool-call chains multiply token consumption, so design prompts and tool interactions to minimize verbose intermediates when cost matters.

- Running agentic flows that produce many tool results may increase output token bills more than typical single-turn chat. Monitor and budget accordingly.

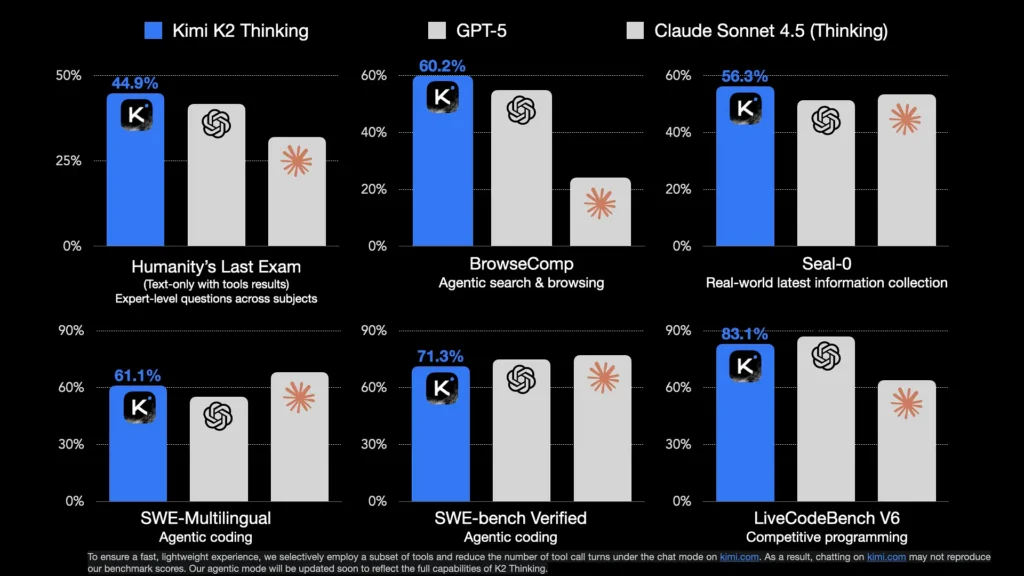

Benchmarks comparison: Kimi K2 Thinking vs GPT-5 vs Claude Sonnet 4.5

Accompanying benchmarks show a nuanced picture: K2 Thinking outperforms GPT-5 and Anthropic’s Claude Sonnet 4.5 on many tool-enabled and agentic benchmarks (for example, BrowseComp and tool-enabled HLE variants), while GPT-5 remains stronger on some text-only or medical benchmarks (e.g., HealthBench in Moonshot’s reported runs).

Takeaway: Kimi K2 Thinking is a competitive agentic model — it excels on reasoning tasks that benefit from tool interleaving and long contexts. It does not uniformly beat GPT-5 and Claude Sonnet 4.5 on every single benchmark (especially some specialized or knowledge-heavy tasks) but on many of the agentic / browsing / long-horizon tests it reports leading results. However, Kimi k2 thinking’s low call cost and open-source nature make it a true king of cost-effectiveness.

When to choose Kimi K2 Thinking vs other models

- Choose Kimi K2 Thinking when your task requires long chains of reasoning, many tool calls, or deep analysis of very large contexts (codebases, long docs).

- Choose GPT-5 when you need the tightest multimodal integration, broad third-party ecosystem support, or specific OpenAI tools and agent frameworks.

- Choose Claude Sonnet 4.5 for workloads that emphasize code edit precision, deterministic editing workflows and Anthropic’s safety toolchain.

| Metric | Kimi K2 Thinking | GPT-5 (High) | Claude Sonnet 4.5 | DeepSeek-V3.2 |

| HLE (w/ tools) | 44.9 | 41.7 | 32 | 20.3 |

| HLE Heavy Mode | 51 | 42 | — | — |

| AIME25 (w/ python) | 99.1 % | 99.6 % | 100 % | 58.1 % |

| GPQA | 84.5 | 85.7 | 83.4 | 79.9 |

| BrowseComp | 60.2 | 54.9 | 24.1 | 40.1 |

| Frames | 87 | 86 | 85 | 80.2 |

| SWE-bench Verified | 71.3 % | 74.9 % | 77.2 % | 67.8 % |

| LiveCodeBench | 83.1 % | 87.0 % | 64.0 % | 74.1 % |

| Context window | 256 k tokens | 400 k tokens | 200 k tokens | 128 k tokens |

| Input pricing | $0.60 / 1 M | $1.25 / 1 M | $3.00 / 1 M | $0.55 / 1 M |

| Output pricing | $2.50 / 1 M | $10.00 / 1 M | $15.00 / 1 M | $2.19 / 1 M |

Best practices

- Stream reasoning: for user-facing apps show a “thinking” UI using streamed

reasoning_content. Streaming reduces latency and avoids huge payloads. () - Schema-first tools: define tight JSON Schemas for tools to reduce ambiguous calls and parsing errors.

- Checkpoint context usage: keep past reasoning traces in a separate long-term memory store rather than embedding enormous trace history into the active prompt; use retrieval to reintroduce only relevant segments.

- Monitoring & guardrails: log both

reasoning_contentand finalcontentto diagnose drift, hallucination, and misuse. Consider redaction or user consent depending on sensitivity.

Conclusion

Kimi K2 Thinking is a major evolution of the K2 line toward robust, long-horizon agency. The API is intentionally compatible with OpenAI/Anthropic client patterns and provides a practical path for integrating agentic reasoning into apps while giving developers control over the tool-call surface.

If you want to experiment quickly, use Kimi K2 Thinking API and start to use! To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !

If you want to know more tips, guides and news on AI follow us on VK, X and Discord!