The recent surge in AI-driven automation has ushered in the need for more dynamic and context-aware workflows. n8n, an open-source workflow automation tool, has emerged as a powerful platform for orchestrating complex processes without extensive coding expertise. Meanwhile, the Model Context Protocol (MCP) standardizes the way AI agents interact with external services, enabling them to discover tools and execute operations in a consistent manner . By integrating n8n with MCP servers, organizations can unlock a new level of flexibility, allowing AI agents to invoke n8n workflows as tools and, conversely, for n8n to consume MCP-compatible services directly within its workflows. This bidirectional capability positions n8n as both a producer and consumer of AI-driven tasks, streamlining end-to-end automation and reducing the need for custom integration code.

What Is n8n?

n8n is a workflow automation platform characterized by its node-based, fair-code licensing model, which allows users to build sequences of actions (nodes) that trigger based on events or schedules. It supports a wide array of integrations out of the box, from HTTP requests and databases to messaging platforms and cloud services . Unlike restrictive “black-box” automation tools, n8n gives developers full control over their workflows, including the ability to self-host and extend functionality via community nodes or custom code.

What Is MCP?

The Model Context Protocol (MCP) is an emerging standard that defines how AI models and agents discover, access, and orchestrate external tools and data sources. It provides a uniform API surface and metadata schema, enabling seamless interoperability between AI agents—such as large language models (LLMs)—and services that expose actionable capabilities . MCP servers publish tool definitions and prompt templates, while MCP clients—often embedded within AI agents—can request and execute these tools based on contextual prompts.

What Recent Updates Make This Integration Essential?

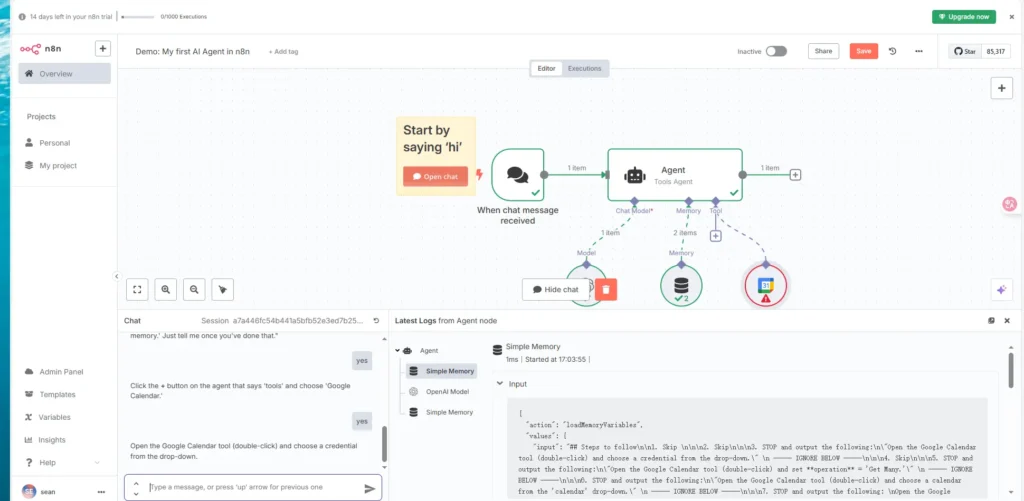

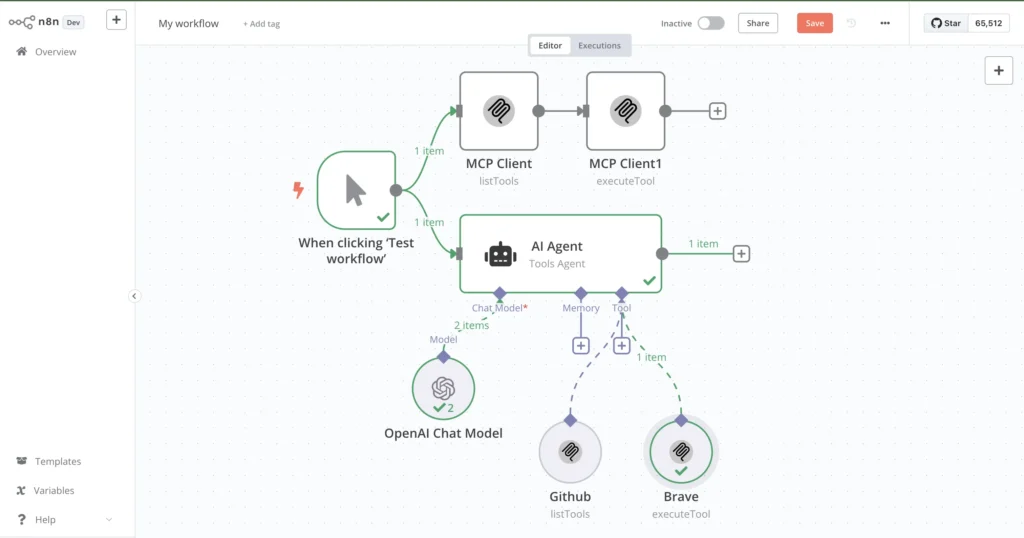

Between February and April 2025, the n8n team officially introduced two key nodes: the MCP Client Tool and the MCP Server Trigger. The MCP Client Tool node allows n8n workflows to call external MCP servers as if they were native nodes, opening up AI-powered functionality with a single interface . Conversely, the MCP Server Trigger node turns an n8n workflow into an MCP server, enabling external AI agents to invoke workflow actions directly. These advancements position n8n at the forefront of AI workflow automation, reducing complexity and enabling rapid development of intelligent automation pipelines.

How Can You Install and Configure n8n for MCP Servers?

Before diving into MCP integrations, you need a functional n8n instance. You can self-host n8n on a local machine or deploy to cloud platforms with one-click installers, Docker containers, or managed services.

Prerequisites

- Node.js: Version 18.17.0, 20.x, or 22.x is recommended; n8n currently does not support Node.js 23.x .

- Git and npm/yarn: For installing n8n and community nodes.

- Docker (optional): Allows for easy containerized deployments.

- A hosting environment: Local machine, VPS, or cloud service like Zeabur, where you can expose an HTTP endpoint for MCP traffic .

Installation Steps

Install Node Version Manager (NVM)

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.39.7/install.sh | bash

source ~/.nvm/nvm.sh

nvm install 18.17.0

nvm use 18.17.0

This ensures compatibility with n8n’s supported Node.js versions .

Install n8n Globally

npm install -g n8n

After installation, invoke n8n to launch the web interface at http://localhost:5678 .

Create Your Account

On first launch, n8n prompts for account creation. Enter an email and password—no credit card required for the free community edition .

Install the MCP Community Node (Optional for Client)

In n8n’s settings under “Community Nodes,” search for and install n8n-nodes-mcp. This plugin provides enhanced MCP client capabilities if your version of n8n lacks the built-in node.

How Do You Set Up the MCP Server Trigger Node in n8n?

Turning n8n into an MCP server enables external AI agents to treat workflows as callable tools. Follow these steps:

Adding the MCP Server Trigger Node

- Create a New Workflow

In the n8n editor, click “New Workflow.” - Add the Node

Search for MCP Server Trigger in the node panel and drag it into the workspace . - Set Activation

Toggle the workflow to “Active” after configuration. The MCP Server Trigger node will generate a unique endpoint, typically at/mcp/<randomId>.

Configuring the Trigger

- MCP URL Path: Accept the default or specify a custom path (e.g.,

/mcp/ai-tools). - Authentication: For initial testing, select “None,” but for production, configure API keys, OAuth, or JWT verification to secure your endpoint.

- Input Schema: Define expected JSON payload keys (e.g.,

tool,params). n8n will parse the incoming JSON and map fields to subsequent nodes automatically.

Once configured, any HTTP POST to the endpoint (e.g., http://your-domain.com/mcp/abc123) triggers the workflow, allowing AI agents to invoke the tools you expose.

How Can You Configure the MCP Client Tool in n8n?

While the Server Trigger exposes n8n as a tool provider, the MCP Client Tool node lets n8n consume external MCP services within workflows.

Installing the Community Node

If you did not install n8n-nodes-mcp earlier, follow these steps now:

- Open Settings → Community Nodes

- Install New Node: Search for

n8n-nodes-mcpand click “Install.” - Restart n8n to load the new node.

Setting Up the MCP Client Tool

- Add the MCP Client Tool Node

In your workflow, search for MCP Client Tool and add it. - Configure Connection

- Server URL: Enter the MCP server’s endpoint (e.g., your own n8n MCP Trigger URL or a third-party service).

- Tool Name: Specify the tool identifier as published by the server (e.g.,

sendEmail,fetchData). - Parameters: Map input fields from previous nodes or workflow variables .

- Handle the Response

The MCP Client Tool node returns structured JSON output, which you can pass to subsequent nodes like “Set” or “HTTP Response” for formatting or further processing.

How Do You Test and Validate Your MCP Server in n8n?

Validation is critical to ensure that your MCP endpoint and client integrations work reliably under various scenarios.

Sending Test Requests

Use tools like curl or Postman to send sample payloads:

curl -X POST http://localhost:5678/mcp/abc123 \

-H 'Content-Type: application/json' \

-d '{"tool": "exampleTool", "params": {"message": "Hello, world!"}}'

A successful response indicates that n8n parsed the request, executed the workflow, and returned the expected result .

Debugging Common Issues

- Invalid JSON: Ensure payloads are well-formed; n8n will reject malformed input with a 400 error.

- Authentication Failures: If using API keys or OAuth, verify that headers and tokens are correctly configured.

- Workflow Errors: Use the n8n execution log to inspect node execution data and error messages.

- Network Connectivity: Confirm that your n8n instance is reachable from the client’s environment, paying attention to firewall rules and DNS settings.

What Are Example Use Cases of n8n with MCP Servers?

Integrating n8n with MCP unlocks diverse automation scenarios across domains—here are a few illustrative examples.

Automating Email Workflows

Suppose you want AI agents to send customizable emails on demand:

- MCP Server Trigger: Exposes a tool named

sendEmail. - Email Node: Connect the trigger to n8n’s “Send Email” node (SMTP, Gmail, etc.).

- Parameter Mapping: Map

to,subject,bodyfrom the MCP payload to the email node’s fields.

The AI agent simply calls the sendEmail tool via MCP, eliminating the need to manage SMTP details in the agent itself .

Fetching and Transforming API Data

To allow AI agents to query and process third-party APIs:

- MCP Server Trigger: Tool named

fetchData. - HTTP Request Node: Configured to call an external API (e.g.,https://api.cometapi.com/v1/chat/completions).

- Set Node: Formats and filters the API response.

- Return: Sends structured JSON to the client.

AI agents can request specific datasets without handling pagination, authentication, or rate limiting.

Building Voice AI Agents

Voice-enabled assistants can leverage n8n as a backend:

- MCP Server Trigger exposes tools like

createTaskorcheckCalendar. - Voice engine translates spoken commands into MCP requests (e.g., “Create a meeting tomorrow at 3 PM”).

- n8n workflows interact with Google Calendar, databases, or custom functions, then return confirmation to the agent.

This approach decouples voice interface logic from backend integrations, simplifying maintenance and evolution.

What Are Best Practices and Security Considerations?

Production-ready MCP integrations require robust security, monitoring, and scalability measures.

Authentication and Access Control

- API Keys: Issue per-client keys with granular scopes (e.g., only allow

readorwriteoperations). - OAuth 2.0 / JWT: For enterprise environments, integrate with identity providers (Okta, Auth0).

- Rate Limiting: Use reverse proxies (NGINX, Traefik) or cloud API gateways to throttle requests and prevent abuse .

Scalability and Performance

- Horizontal Scaling: Deploy multiple n8n instances behind a load balancer to distribute MCP traffic.

- Redis / Database Queues: Offload heavy or long-running tasks to background queues, ensuring quick MCP responses.

- Monitoring: Implement logging (e.g., Elastic Stack) and metrics (Prometheus, Grafana) to track workflow execution times and error rates .

What Does the Future Hold for n8n and MCP Integration?

The ecosystem around MCP and n8n is rapidly evolving, with several promising developments on the horizon.

Upcoming Features

- Dynamic Tool Discovery: Agents may query n8n for available tools and metadata in real time, enabling even more flexible workflows .

- Enhanced Security Nodes: Introduction of built-in encryption, token rotation, and audit logging nodes within n8n.

- Low-Code MCP Client: Further abstractions to simplify MCP consumption without requiring custom node installations.

Community and Ecosystem Growth

- Marketplace of MCP Workflows: Platforms like n8n.io/workflows are already listing sample MCP server templates, fostering sharing and reuse.

- Third-Party Services: Cloud providers and SaaS platforms are beginning to publish MCP endpoints, expanding the reach of AI-driven automation.

- Open Standards Collaboration: The MCP specification is under active development, with contributions from major AI and automation vendors aimed at enhancing interoperability.

See Also How to Use n8n with CometAPI

Conclusion

Integrating n8n with MCP servers transforms static workflows into dynamic, AI-driven services and equips n8n with the ability to consume external AI-oriented tools. The recent introduction of the MCP Client Tool and MCP Server Trigger nodes represents a significant leap forward, streamlining both development and maintenance of intelligent automation pipelines. By following best practices in installation, configuration, security, and testing, organizations can harness the full potential of this integration to innovate faster, reduce engineering overhead, and deliver context-aware automation across diverse use cases.