In the rapidly evolving landscape of artificial intelligence, the synergy between platforms and models is paramount for developing robust AI applications. Dify, an open-source LLM (Large Language Model) application development platform, offers seamless integration capabilities with CometAPI’s powerful models. This article delves into the features of Dify, elucidates the integration process with CometAPI, and provides insights on accessing and optimizing this collaboration.

Why Integrate Dify with CometAPI?

Integrating Dify with CometAPI combines the strengths of both platforms, enabling developers to:

- Leverage Advanced Language Models: Utilize ‘s LLM models that integrated within Dify’s intuitive interface.

- Streamline AI Application Development: Accelerate the transition from prototype to production by harnessing Dify’s comprehensive tools alongside CometAPI’s capabilities.

- Customize and Control AI Solutions: Tailor AI applications to specific needs while maintaining control over data and workflows.

What is CometAPI?

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

What is Dify?

Dify is an open-source platform designed to simplify the development of AI applications powered by large language models. It integrates various functionalities, including AI workflows, Retrieval-Augmented Generation (RAG) pipelines, agent capabilities, model management, and observability features, facilitating a seamless journey from concept to deployment.

Key Features of Dify

- Intuitive Interface: Dify offers a user-friendly interface that simplifies the creation and management of AI applications.

- Comprehensive Model Support: It supports integration with numerous proprietary and open-source LLMs.

- Prompt IDE: Dify provides tools for crafting and testing prompts, comparing model performances, and enhancing application interactions.

- RAG Pipeline: The platform includes a robust RAG pipeline for document ingestion and retrieval, supporting various formats like PDF and PPT.

- Agent Framework: Dify enables the definition of agents with pre-built or custom tools, extending the functionality of AI applications.

- LLMOps: It offers monitoring and analysis tools to observe application logs and performance, aiding in continuous improvement.

- Backend as a Service: Dify provides corresponding APIs for all its features, facilitating easy integration into existing business logic.

How Does Dify Work?

Dify operates by providing a structured environment where developers can build, test, and deploy AI applications. Its architecture supports the integration of various language models, allowing for flexibility and customization in application development.

Workflow in Dify

- Model Integration: Connect and configure language models compatible with OpenAI’s API.(or other DeepSeek R1 API, Grok 4, llama model,geimin models,etc.)

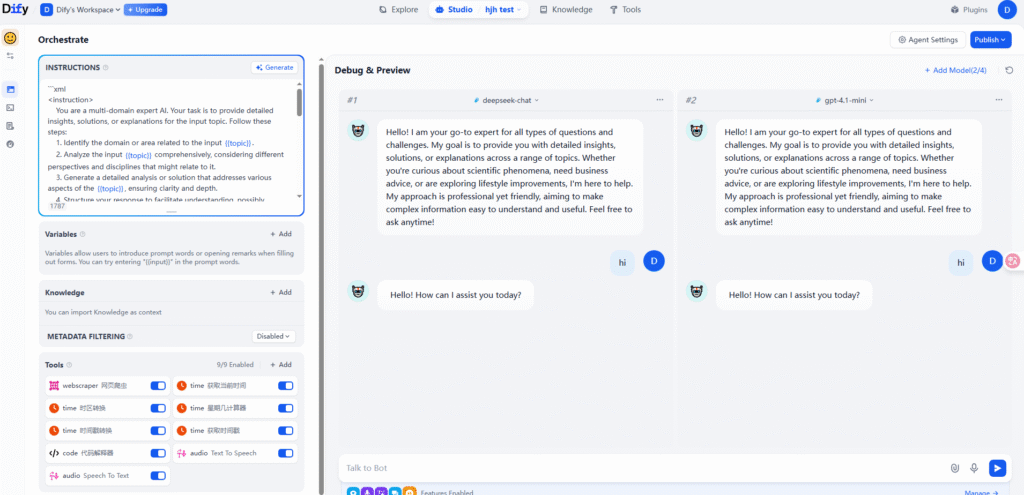

- Prompt Engineering: Develop and refine prompts using Dify’s Prompt IDE to achieve desired outputs.

- Application Development: Utilize Dify’s tools to create applications, incorporating workflows, agents, and RAG pipelines as needed.

- Testing and Optimization: Test applications within Dify, analyze performance logs, and make necessary adjustments.

- Deployment: Deploy the application, leveraging Dify’s backend services and APIs for integration into broader systems.

How to Integrate Dify with CometAPI?

Integrating Dify with CometAPI involves several key steps to ensure a seamless connection between the platforms.

Below is a practical workflow that covers both installing the CometAPI node (plugin) and wiring it into Dify flows. The exact UI labels may evolve, but these steps reflect the current plugin/marketplace + model-provider pattern used by Dify and Flowise.

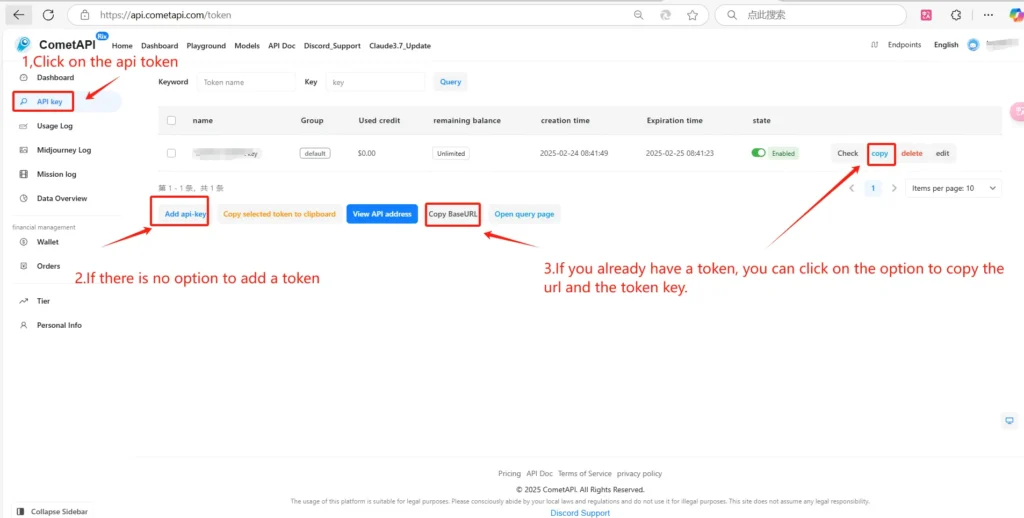

Step 1 — Obtain your CometAPI key

- Sign up or sign into your CometAPI console.

- Create or navigate to your API keys page and copy the

sk-xxxxxkey for the project you’ll use. Store it securely for the next steps.

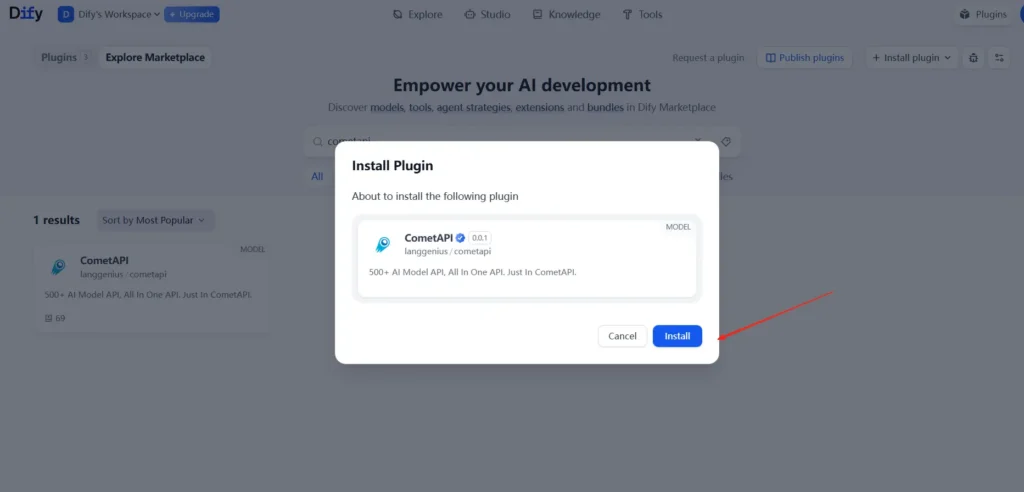

Step 2 — Install the CometAPI plugin in Dify

- In Dify, go to the Marketplace or Plugins section (Dify’s plugin marketplace is the entry point for third-party integrations).

- Find CometAPI (or “Comet” / “CometAPI” provider) and click Install.

- After installation, open the plugin configuration/settings for CometAPI inside Dify.

Note: if your Dify deployment is self-hosted, you may need admin rights to add plugins.

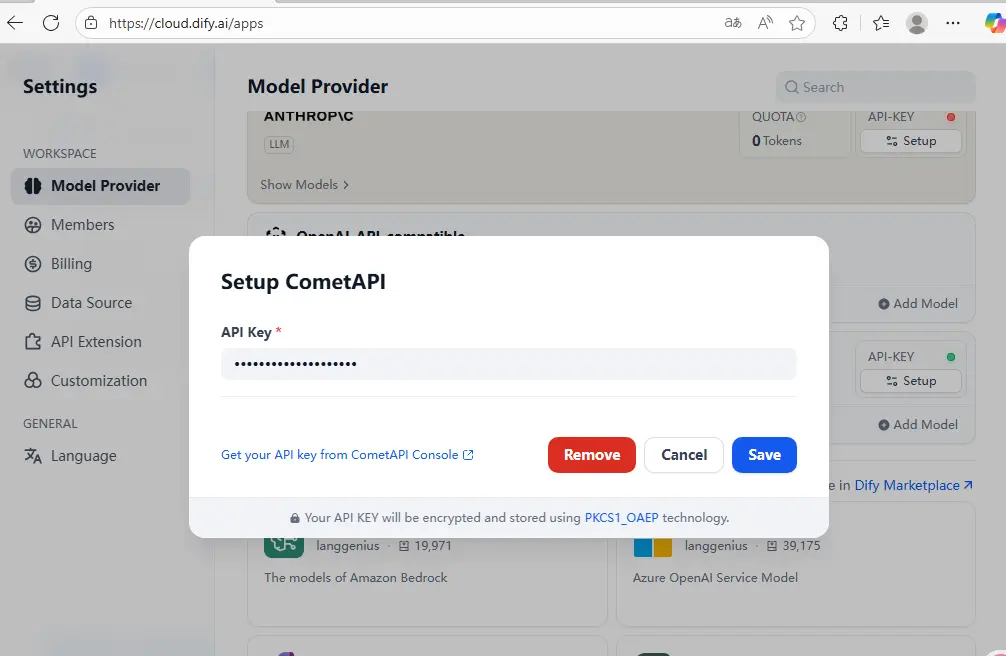

Step 3 — Configure the CometAPI plugin in Dify

- In the plugin’s settings, paste your

sk-xxxxxAPI key in the API Key / Secret field. - Optionally set default model(s) or provider options the plugin asks for (for example, you might choose a default model family).

- Save the plugin configuration. At this point Dify should be able to call CometAPI for model inference. (If Dify provides test invocation buttons, run a small test request to confirm connectivity.)

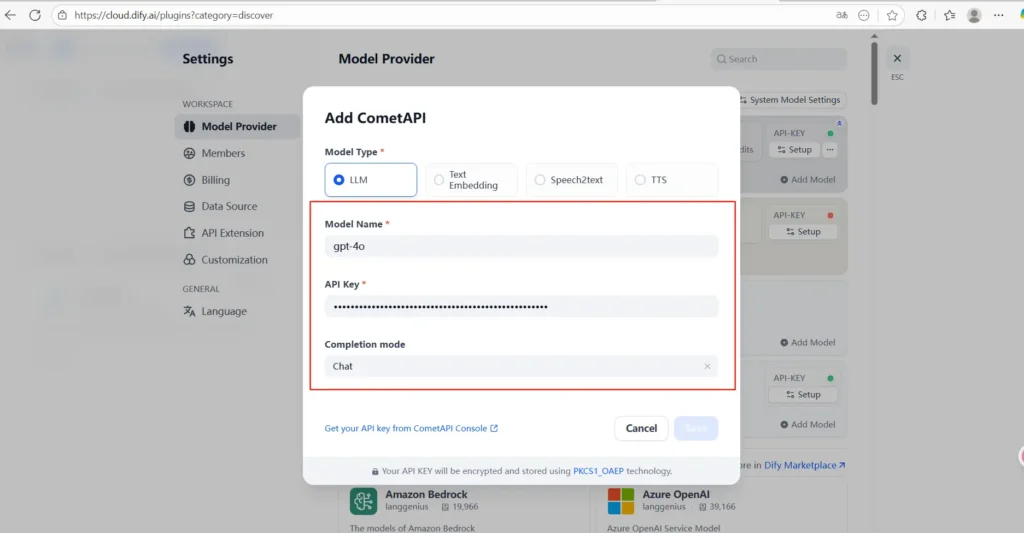

Step 4 — Add CometAPI as a model provider in Dify flows

- Open or create the Dify workflow/agent you want to use.

- Add a node (LLM/Model) and choose CometAPI from the model provider list (this is the CometAPI node installed via the plugin).

- Configure prompt templates, context sources (RAG knowledge base), and temperature/params as you normally would.

- Test an end-to-end conversation: prompt → Dify orchestration → CometAPI model → response. Create a quick test in Dify’s Prompt IDE that calls a small, inexpensive model (e.g.,

o3-minior a similarly low-cost model name supported by CometAPI) and verify a normal text response. Check latency and formatting. - Monitor logs: Use Dify’s observability tools and CometAPI’s dashboard to confirm requests/usage and catch errors (authentication, rate limits).

Example (conceptual) YAML snippet for a Dify task

Dify uses declarative definitions for many flows. The snippet below is conceptual—adjust to your Dify version and plugin fields:

model_provider: cometapi

model: gpt-4o-mini

api_key_secret: dify_plugin_cometapi_key

prompt:

- role: system

content: "You are an assistant..."

(Always consult your Dify installation docs for exact field names.)

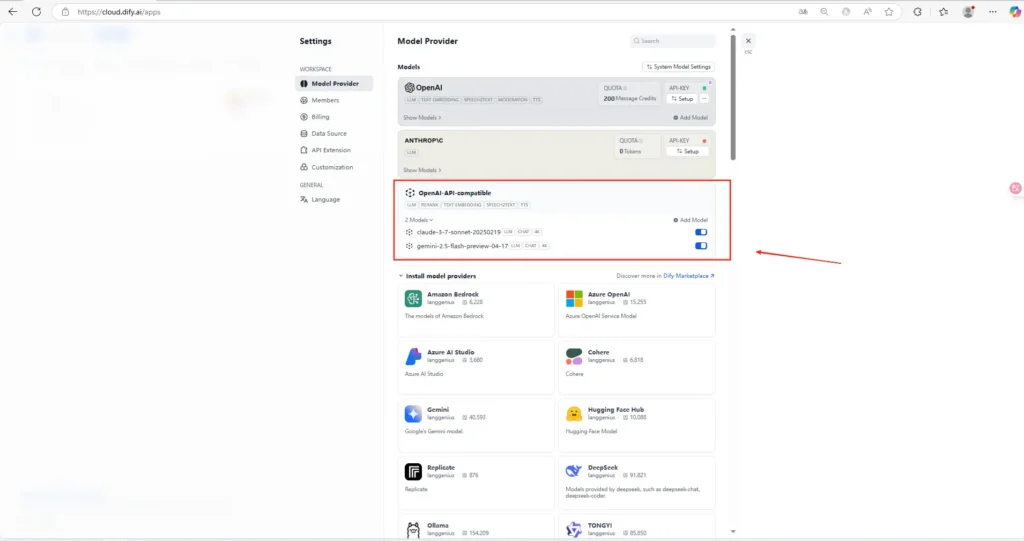

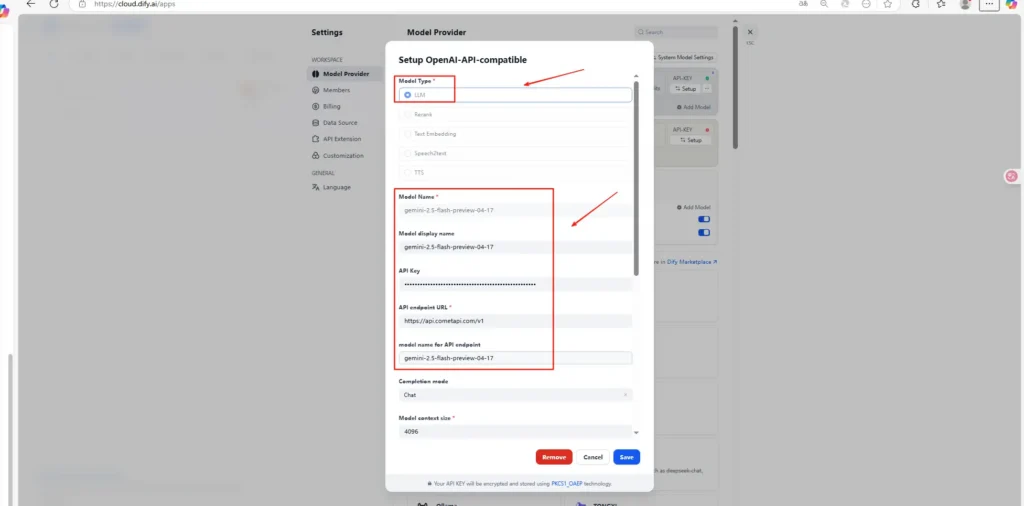

Other Method: OpenAI Format

- Access Dify’s Settings: Navigate to the settings section within the Dify platform.

- Configure Model Providers: Locate and select the option for model providers.

- Add Model as a Provider: Choose to add a new model provider and select OpenAI (or other)from the available options.

- Enter API Credentials: Input your CometAPI API key and configure the API endpoint URL.

- Set Model Parameters: Define parameters such as model type (e.g., GPT-4), context length, and maximum token limits.

- Enable Functionality: Configure additional settings like function calling, tool calling, and multi-modal support as required.

- Save and Test: Save the configuration and test the integration to ensure proper functionality.

In addition to openAI, CometAPI provides more content generation models API such as DeepSeek R1 API, Grok 4, geimin models, etc., as well as text image models and video generation models such as FLUX.1 Kontext, Veo 3 API and Midjourney API etc., to help you build your own workflow.

Common troubleshooting scenarios & solutions

- Auth errors: If Dify shows authentication error, check the

sk-xxxxxkey and whether you pasted it into the correct provider field. Confirm Dify can reachhttps://api.cometapi.com. - Unexpected responses / formatting: Ensure the request format matches OpenAI style (CometAPI accepts OpenAI-compatible format). Also verify

modelparameter names. - High latency: Test different CometAPI models; latency can vary across model families. Also check network egress from your Dify host.

- Cost spikes: Check token limits in Dify and usage in CometAPI dashboard; throttle or switch to cheaper models for non-critical flows.

What real-world use cases benefit from this integration?

Use case 1: Multi-model evaluation for customer service

Spin up a Dify chatflow backed by the CometAPI node and A/B test responses from gpt-4o, claude-3.7 and a smaller, cheaper candidate. For common Q/A, route to a low-cost CometAPI model. For complex or multi-step queries escalate to a higher capability model (or a multimodal model) via CometAPI.

Use case 2: Internal knowledge assistant with safe fallbacks

Build a RAG pipeline in Dify that uses embeddings + retrieval, but calls CometAPI for generation. If the large model hits rate limits, fall back to a smaller CometAPI model automatically. Flowise can be used to prototype prompt chains before moving the flow to Dify for production.

Use case 3: Rapid experimentation for multimodal apps

CometAPI exposes image and audio models (e.g., Suno, Runway). Combine Dify’s orchestration (routing media uploads to the right service) with CometAPI’s model switching to prototype multimodal features quickly.

- Use Dify orchestration to collect briefs, templates, and style guides.

- Use CometAPI to call an image model (Midjourney/ Gemini 2.5 Flash Image API (Nano-Banana) model) and an LLM for captions in the same flow. CometAPI’s unified model roster simplifies this orchestration.

Conclusion

Integrating Dify with CometAPI unlocks powerful possibilities for AI-driven applications, offering a streamlined workflow, extensive customization options, and enhanced AI capabilities. By following best practices, addressing potential challenges, and staying updated with new advancements, developers can maximize the potential of this integration to create innovative AI-powered solutions.

To begin to intergrate CometAPI with Dify, explore the model’s capabilities in the Playground and consult the Dify API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !