Google has just kicked off the Gemini 3 era by releasing Gemini 3 Pro in preview, and the initial signals are unambiguous: this is a major step forward in multimodal reasoning, coding agents, and long-context understanding. The model is positioned as Google’s most capable reasoning and multimodal model yet, optimized for agentic workflows, coding, long-context tasks and multimodal understanding. It ships with a new “Deep Think” reasoning mode, features dramatic improvements on agentic/code benchmarks (Terminal-Bench 2.0 quoted at 54.2%), and is immediately usable via Google AI Studio, API (Vertex AI integrations), and developer tooling such as Google Antigravity.

What is Gemini 3 Pro Preview?

Gemini 3 is presented by Google as the next-generation, most intelligent member of the Gemini family — focused on deeper reasoning, richer multimodal understanding (text, images, video, audio, code), and better agentic behavior (models that plan and act with tools).

Headline features

- Native multimodal understanding — built to accept and reason over text, images, audio and video together (including long/video inputs). Great for mixing documents, screenshots, transcripts and video.

- Huge context window (up to ~1,000,000 tokens) — can ingest/keep in context extremely long documents, large codebases, or hours of transcripts in a single session. This is a core selling point for deep research, code review, and multi-document synthesis.

- Agentic / tool-use capability — designed to power autonomous agents that can call tools, operate terminals, manage task plans, and coordinate multi-step workflows (used in Google Antigravity and other IDE integrations). This makes it especially strong for coding, orchestration, and multi-step automation.

- Stronger reasoning & coding — Google positions Gemini 3 Pro as their top “thinking” model for complex reasoning, math, and code tasks (improved benchmarks and terminal/tool performance).

What’s new in Gemini 3 Pro compared with Gemini 2.5 Pro and others?

Which capabilities improved the most?

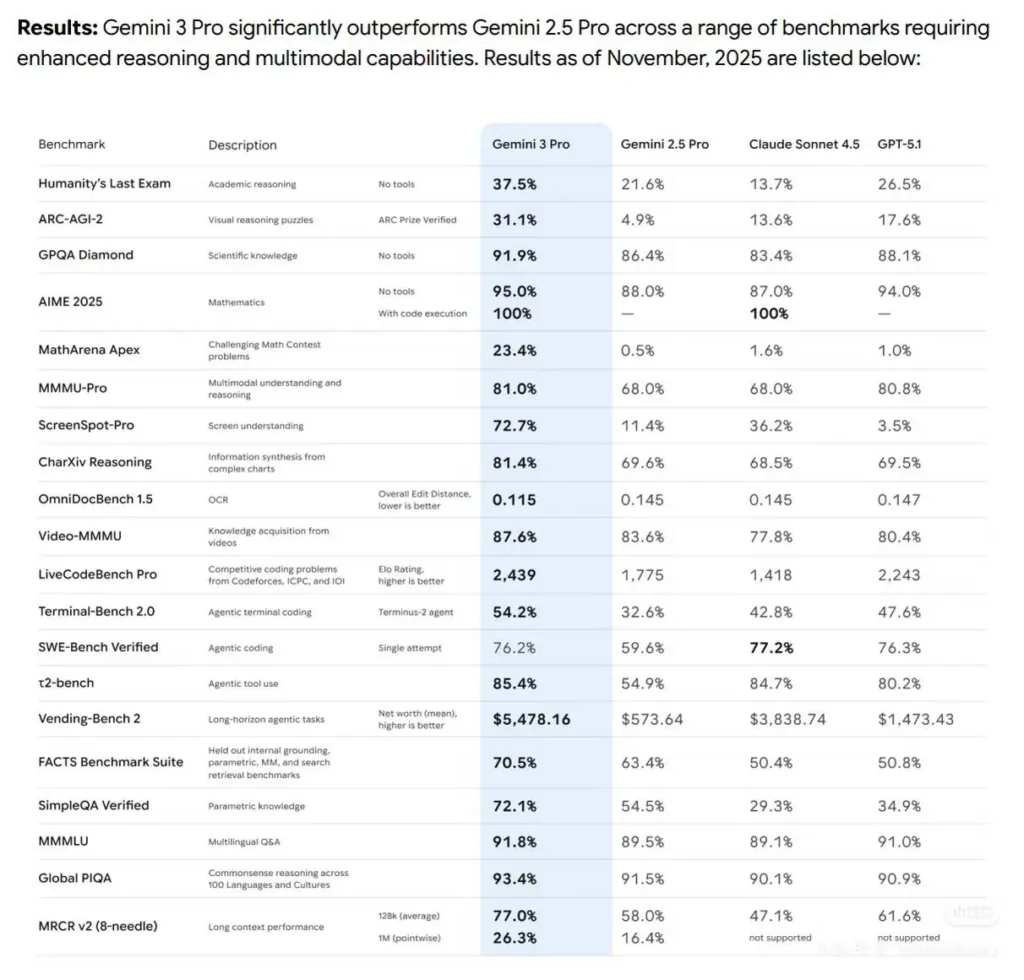

Gemini 3 Pro is marketed as a major step forward in reasoning (mathematical and scientific reasoning), multimodal spatial/visual reasoning, and tool use. Google highlights clear gains over Gemini 2.5 Pro in benchmark suites and in real-world agentic tasks such as coding and terminal automation. Example headline metrics the team released include:

| Benchmark / task | Gemini 3 Pro (reported) | Gemini 2.5 Pro (reported) | Absolute gap (pp) |

|---|---|---|---|

| Humanity’s Last Exam (academic reasoning, no tools) | 37.5% | 21.6% | +15.9. |

| GPQA Diamond (scientific / factual QA) | 91.9% | 86.4% | +5.5. |

| AIME 2025 (mathematics, no tools) | 95.0% | 88.0% | +7.0. |

| AIME with code execution | 100.0% | (2.5 Pro: — ) | — (3 Pro hits perfect score with execution). |

| ARC-AGI-2 (visual reasoning puzzles) | 31.1% | 4.9% | +26.2 — very large multimodal gain. |

| SimpleQA Verified (parametric knowledge) | 72.1% | 54.5% | +17.6. |

These numbers signal that Gemini 3 Pro is optimized for multi-step reasoning, complex tool use, and tightly integrated multimodal tasks (e.g., combining video frames, chart reasoning, and code generation).

Agentic-first developer tooling: Antigravity

To demonstrate agentic workflows, Google released Antigravity — an “agent-first” IDE that uses Gemini 3 Pro as the foundation for multi-agent coding workflows. Antigravity enables agents to interact directly with an editor, terminal and browser, and to produce “Artifacts” (task lists, screenshots, browser records) that document agent actions — addressing traceability and reproducibility in agentic development. This makes Gemini 3 Pro far more practical for real developer workflows than models that focus solely on text generation.

Better tool use and coding

Google reports dramatic improvements on a terminal-centric benchmark (Terminal-Bench 2.0) that measures a model’s ability to operate a computer via the terminal: Gemini 3 Pro scores 54.2% on that test — a large jump relative to previous Gemini versions — indicating real progress in autonomous tool use and code generation.

ly when asked to run scripts, orchestrate tools, or manage multi-step developer tasks. In practice that means fewer hallucinations when the model executes commands, better error handling, and improved ability to recover from failed steps.

How does Gemini 3 Pro perform on benchmarks

Google published a wide suite of benchmark comparisons in the Gemini 3 blog post that span classical NLP reasoning, multimodal understanding, code generation, and agentic tool-use. Key numbers reported directly by Google include:

- LMArena: Gemini 3 Pro scored 1501 Elo, a top placement on the competitive leaderboard (measuring general reasoning/answer quality in pairwise matchups).

- MMMU-Pro (multimodal benchmark): 81% — a sizable increase vs prior models.

- Video-MMMU: 87.6% on video-aware multimodal tasks.

- SimpleQA Verified: 72.1% indicating improvements in factual QA for complex inputs.

- WebDev Arena: 1487 Elo (web development / code reasoning).

- Terminal-Bench 2.0 & SWE-bench Verified: big jumps in agentic tool use and coding agent performance.

- Deep Think: further lifts on highest-difficulty tests (e.g., Humanity’s Last Exam improved from 37.5% to 41.0% in Deep Think on some metrics as reported).

All these indicate a model tuned for depth rather than just surface text generation.

So: yes, Gemini 3 Pro is consistently in the upper tier across many tests today — but “crushes” depends on the task. For pure code generation, some competitors remain neck-and-neck; for long-context, math, and multimodal synthesis, Gemini 3 Pro is frequently reported as best-in-class in early November/November 2025 runs.

How can you access Gemini 3 Pro Preview?

Official entry points

Google made Gemini 3 Pro available in preview across several surfaces:

- Gemini app (consumer / Pro users): The model is rolling out in the Gemini app as part of the “Gemini 3” era launch.

- Google AI Studio / Gemini Developer API: Developers can experiment via AI Studio and the Gemini Developer API. The API has REST and SDK interfaces and supports advanced features such as function calling and streaming.

- Vertex AI (Google Cloud): Enterprises and teams can access Gemini 3 Pro through Vertex AI for production and MLOps workflows. Vertex supports Python, Node, Java, Go, and curl examples.

- Third-party integrations (CometAPI): CometAPI provides access to the Gemini 3 Pro API, with the call name being gemini-3-pro-preview. CometAPI offer a price far lower than the official price to help you integrate.

Quickstart: Python example (official SDK pattern)

Below is a minimal, practical Python example adapted from Google’s Gemini quickstart that demonstrates calling the Gemini API via Google’s GenAI client. Replace GEMINI_API_KEY with your API key obtained from Google AI Studio or your GCP project.

# Example: call Gemini 3 Pro Preview using Google GenAI Python SDK

# Requires: pip install google-generativeai

import os

from google import genai

# Set API key in environment:

# export GEMINI_API_KEY="YOUR_API_KEY"

client = genai.Client() # client picks up GEMINI_API_KEY from env

# Use the preview model identifier. The exact model ID may vary; use the ID listed in the API docs.

model_id = "gemini-3-pro-preview" # or "gemini-3-pro" depending on availability

prompt = """

You are an assistant that writes a short Python function to fetch JSON from a URL,

handle HTTP errors, and return parsed JSON or None on failure.

"""

resp = client.models.generate_content(model=model_id, contents=prompt)

print("MODEL RESPONSE:\n", resp.text)

If you choose CometAPI, replace url with https://api.cometapi.com/v1/chat/completions and key with the key you obtained from CometAPI.

How to get the best results — prompt patterns and tips

Use “thinking” mode for hard problems

If you’re solving progressive reasoning or complex math/code tasks, enable the preview’s “thinking” variant (if available) — it allocates more internal reasoning steps and often yields more reliable solutions on multi-stage tasks. Check model names for a -thinking suffix in the console.

Function calling & tool orchestration

Use declared functions (Vertex AI/GenAI function calling) for reliable, structured outputs and to reduce hallucinations. Let the model propose function calls and execute them deterministically in your environment. The function calling docs include examples for returning typed JSON arguments that you can run safely.

Grounding when you need up-to-date facts

If your app relies on current web facts, use web grounding but watch for grounded prompts costs and rate limits. Grounding is powerful — it lets Gemini query Search or Maps — but every grounded prompt may alter your billing and latency characteristics.

How Gemini 3 Pro stacks up in real-world tasks (use cases)

Code generation & developer productivity

Gemini 3 Pro improves on multi-file reasoning, long repo context, and synthesis of tests/documentation alongside code. Paired with function calling and a terminal agent, it can scaffold and validate medium-sized projects faster than older models. Community tests show elevated LiveCodeBench/Elo coding scores.

Research & STEM workflows

The model’s Deep Think capability and larger reasoning budget make it well suited for research tasks that require multi-step mathematical derivations, dataset synthesis, or multi-file paper summarization. Early benchmark results place it at or near the top for many STEM datasets.

Content design, multimodal creative workflows

Gemini 3 Pro’s multimodal outputs and integration with Veo/Whisk/Flow make it a strong choice for workflows that mix text, images, and video — from marketing storyboards to automated video drafts. Google bundles certain creator tools in AI Ultra for creators who want the highest limits.

Conclusion: does Gemini 3 Pro crush other models?

Gemini 3 Pro Preview is a major step forward. On a broad range of benchmarks and in early real-world testing it frequently leads or ties the best available models in late 2025, particularly in:

- Complex reasoning (math / STEM)

- Multimodal understanding and synthesis

- Agentic workflows and function calling

However, the margin varies by task. For some narrowly framed tasks (certain creative writing styles, or very specialized domain knowledge), other competitive models can still be competitive or preferable depending on cost/latency and ecosystem fit. Benchmarks and leaked scores suggest Gemini 3 Pro often ranks top-tier, but “crushing” is task-dependent — for many enterprise and developer use cases Gemini 3 Pro is now the first model to evaluate.

How to get started with CometAPI

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

Developers can access Gemini 3 Pro Preview API through CometAPI. To begin, explore the model capabilities ofCometAPI in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !

If you want to know more tips, guides and news on AI follow us on VK, X and Discord!