OpenAI’s o3‑pro: Benchmarks, Pricing & Access

OpenAI’s latest reasoning model, o3‑pro, represents a significant leap in performance and capability for AI-driven applications. Launched in early June 2025, o3‑pro offers developers and enterprises advanced reasoning, multimodal understanding, and tool use—all at a premium price point. This article synthesizes the most recent announcements, user reports, and benchmark data to provide a comprehensive overview of o3‑pro’s performance, cost considerations, and availability.

What is o3‑pro?

OpenAI’s latest offering, o3‑pro, marks a significant milestone in AI reasoning models by combining enhanced capabilities with a premium price tag. Launched on June 11, 2025, o3‑pro succeeds the standard o3 model and replaces the o1‑pro in OpenAI’s product lineup, targeting developers and enterprises that prioritize deep analysis and reliability over raw speed. Built on the same underlying architecture as o3—originally introduced in April 2025—o3‑pro integrates real‑time web search, file analysis, visual reasoning, Python execution, and advanced memory features, addressing complex workflows in science, programming, business, and writing. However, the model’s deliberate reasoning approach incurs longer latencies and a substantial cost increase, reflecting its compute‑intensive design.

What distinguishes o3‑pro from the standard o3 model?

Advanced Multimodal Reasoning

OpenAI has rigorously evaluated o3‑pro across multiple standard AI assessments to validate its reasoning prowess. In the mathematics domain, o3‑pro outperforms Google’s Gemini 2.5 Pro on the AIME 2024 benchmark, demonstrating superior logical reasoning and complex equation solving under timed conditions . Similarly, on the GPQA Diamond benchmark—which measures PhD‑level scientific understanding and problem solving—o3‑pro surpasses Anthropic’s Claude 4 Opus, underlining its depth in advanced scientific reasoning.

o3‑pro builds on the strengths of OpenAI’s flagship o3 model by integrating real‑time web browsing, file analysis, visual understanding, and on‑the‑fly Python execution into a single interface. According to OpenAI, this enhanced reasoning ability allows o3‑pro to tackle complex tasks—such as scientific data interpretation, long‑form code debugging, and multimodal content generation—with greater reliability than its predecessor.

Reliability over Latency

These new capabilities come with trade‑offs: o3‑pro’s response times are measurably slower than o3, reflecting the extra compute and context‑processing steps required for advanced tool use . Early adopters report typical latencies of 1.5–2× that of o3 on equivalent prompts, though the precise figures vary based on request complexity.

Feature Limitations at Launch

At launch, o3‑pro users noticed a few temporary limitations: image generation remains unavailable, and certain ChatGPT features—such as ephemeral “Canvas” sessions and temporary chat threads—are disabled while OpenAI scales infrastructure for the new model . These restrictions are expected to ease over the coming months as capacity expands.

How does o3‑pro perform on industry benchmarks?

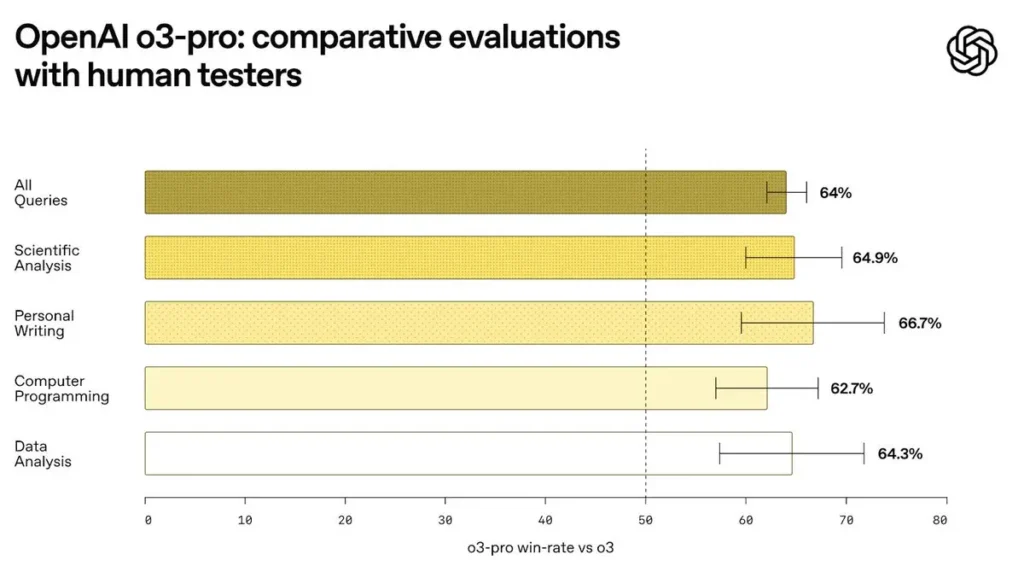

Standardized Reasoning Tests

In internal tests, o3‑pro outperformed o3 by a notable margin on standardized reasoning suites covering mathematics, logic puzzles, and programming challenges. Community‑reported scores place o3 at around 2,517 points, while o3‑pro scores near 2,748—an approximate 9% improvement .

Real‑World Coding Evaluations

Developers running live code generation and debugging tasks have observed that o3‑pro produces more syntactically correct and semantically accurate outputs in single‑shot and few‑shot settings. Benchmarks on coding repositories like CodeSearchNet show a 5–7% boost in functional correctness over o3, particularly on long‑context problems exceeding 4,000 tokens.

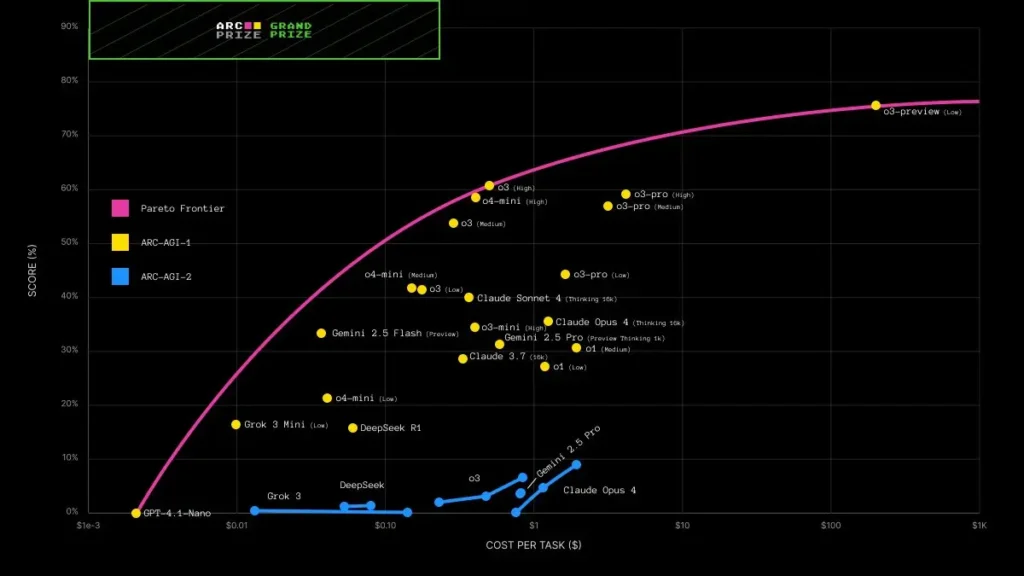

Comparative Performance Against Competitors

In head‑to‑head tests, o3‑pro not only beats Gemini 2.5 Pro and Claude 4 Opus on raw scores but also delivers more consistent outputs under adversarial stress tests .By combining multimodal input handling and dynamic tool use, o3‑pro narrows the gap with specialty models from rivals such as Google PaLM and Anthropic Claude X. Early head‑to‑head tests indicate that o3‑pro matches or exceeds competitor accuracy on complex reasoning benchmarks, although comprehensive third‑party reports are still forthcoming.

What pricing structure should developers expect?

Token‑Based Billing Model

OpenAI continues its token‑based billing: o3‑pro costs $20 per million input tokens and $80 per million output tokens—exactly ten times the cost of the standard o3 model after its recent price cut . By contrast, o3 now runs at $2 per million input tokens and $8 per million output tokens following an 80% price reduction earlier in June 2025.

| Model | Input Token Price | Output Token Price |

|---|---|---|

| o3 | $2 / 1M tokens | $8 / 1M tokens |

| o3‑pro | $20 / 1M tokens | $80 / 1M tokens |

Rationale Behind the Premium

This tenfold price increase reflects the additional compute resources, high‑throughput infrastructure, and specialized tooling integrations o3‑pro demands. OpenAI positions o3‑pro as a “mission‑critical” model for applications where accuracy and advanced reasoning justify the cost premium.

Volume Discounts and Batch API

Enterprises processing large volumes of tokens can still leverage the Batch API to save up to 50% on cached inputs and outputs. While this mechanism primarily benefits high‑volume users of GPT‑4.1 variants, similar batching options are expected to roll out for o‑series models later in 2025.

How can developers and teams access o3‑pro?

API Availability

In head‑to‑head tests, o3‑pro not only beats Gemini 2.5 Pro and Claude 4 Opus on raw scores but also delivers more consistent outputs under adversarial stress tests.

OpenAI made o3‑pro accessible via its public API on June 10, 2025, with immediate support in both the Completions and Chat endpoints. Developers can specify the "o3-pro" model in their API calls, subject to rate limits and quota constraints tied to their subscription tier.

POST https://api.openai.com/v1/chat/completions

{

"model": "o3-pro",

"messages": [{"role":"user","content":"Analyze this dataset..."}],

"max_tokens": 1500

}

ChatGPT Pro & Team Plans

ChatGPT Pro and Team subscribers gain direct access to o3‑pro within the ChatGPT interface. Users may toggle between o3 and o3‑pro in the model selector, though initial availability is limited to a subset of enterprise customers and beta testers .

Via CometAPI API

Developers can access o3-Pro API(model: ”o3-Proo3-pro-2025-06-10”) through CometAPI, the latest models listed are as of the article’s publication date. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

import os

from openai import OpenAI

client = OpenAI(

base_url="https://api.cometapi.com/v1",

api_key="<YOUR_API_KEY>",

)

response = client.chat.completions.create(

model="o3-Pro",

messages=[

{

"role": "system",

"content": "You are an AI assistant who knows everything.",

},

{

"role": "user",

"content": "Tell me, why is the sky blue?"

},

],

)

message = response.choices[0].message.content

print(f"Assistant: {message}")What practical use cases benefit most from o3‑pro?

Scientific Research & Data Analysis

Researchers working with large datasets—ranging from genomics to climate simulations—can leverage o3‑pro’s file analysis and Python execution capabilities to automate hypothesis testing and generate insights without context‑length constraints.

Enterprise Knowledge Workflows

In sectors like finance and legal services, where precision and auditability are paramount, o3‑pro’s improved instruction adherence and multimodal reasoning reduce error rates in contract reviews, financial modeling, and regulatory compliance tasks.

Software Development & DevOps

By combining long‑context code comprehension with live testing through Python execution, o3‑pro streamlines debugging and automates complex refactoring workflows, accelerating delivery cycles for large-scale software projects .

What should organizations consider before upgrading?

Cost‑Benefit Analysis

Teams must weigh the 10× price increase against projected efficiency gains. For high‑value, low‑volume tasks—such as drafting strategic reports or building critical safety systems—the accuracy and tooling support may justify o3‑pro’s premium. For bulk content generation, sticking with standard o3 or o4‑mini models could be more economical .

Infrastructure Readiness

Because o3‑pro imposes higher latency and throughput demands, organizations should audit their API rate limits, network capacity, and error‑retry strategies to avoid bottlenecks during peak usage .

In conclusion

OpenAI’s o3‑pro model sets a new bar for advanced reasoning, multimodal understanding, and integrated tool use in AI. Its benchmark gains and reliability enhancements make it an attractive option for mission‑critical applications, provided budgets and infrastructure can support the elevated costs. As the AI landscape evolves, o3‑pro’s role will solidify in areas demanding the highest levels of accuracy and contextual depth, while more cost‑sensitive workloads may continue leveraging base o‑series models or emerging mini variants.