OpenAI’s recent gpt-oss family (notably the gpt-oss-20B and gpt-oss-120B releases) explicitly targets two different classes of deployment: lightweight local inference (consumer/edge) and large-scale data-center inference. That release — and the flurry of community tooling around quantization, low-rank adapters, and sparse/Mixture-of-Experts (MoE) design patterns — makes it worth asking: how much compute do you actually need to run, fine-tune, and serve these models in production?

OpenAI GPT-OSS: How to Run it Locally or self-host on Cloud, Hardware Requirements

GPT-OSS is unusually well-engineered for accessibility: the gpt-oss-20B variant is designed to run on a single consumer GPU (~16 GB VRAM) or recent high-end laptops using quantized GGUF builds, while gpt-oss-120B—despite its 117B total parameters—is shipped with MoE/active-parameter tricks and an MXFP4 quantization that lets it run on single H100-class GPUs (≈80 GB) or on […]

Could GPT-OSS Be the Future of Local AI Deployment?

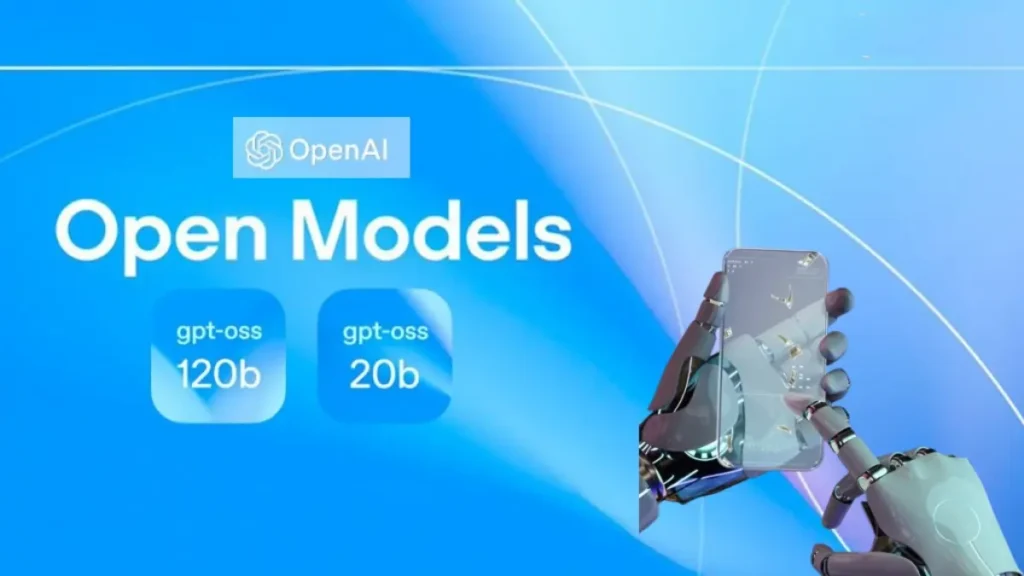

OpenAI has announced the release of GPT-OSS, a family of two open-weight language models—gpt-oss-120b and gpt-oss-20b—under the permissive Apache 2.0 license, marking its first major open-weight offering since GPT-2. The announcement, published on August 5, 2025, emphasizes that these models deliver state-of-the-art reasoning performance at a fraction of the cost associated with proprietary alternatives, and […]

GPT-OSS-20B API

gpt-oss-20b is a portable, open‑weight reasoning model offering o3‑mini‑level performance, agent-friendly tool use, and full chain-of-thought support under a permissive license. While it’s not as powerful as its 120 B counterpart, it’s uniquely suited for on-device, low-latency, and privacy-sensitive deployments. Developers should weigh its known compositional limitations, especially on knowledge-heavy tasks, and tailor safety precautions accordingly.

Model Type: Chat