Veo 3.1 is Coming: Veo is Google’s family of AI video-generation models (Veo 3 / Veo 3 Fast are current). Google has recently shipped big Veo 3 improvements (vertical 9:16, 1080p, Veo 3 Fast, lower pricing) and there are rumors / social posts that Veo 3.1 is imminent — but Google has not published an official Veo 3.1 release bulletin yet. I’ll list confirmed facts, likely/expected changes, and a direct comparison to OpenAI’s Sora 2.

What Veo is

Veo is Google’s line of generative video models (DeepMind / Google Cloud / Gemini family) that turn text or images into short videos — and (in Veo 3) generate audio natively (sound effects, ambient audio, and dialogue). It’s offered on Google Cloud (Vertex AI / Gemini API) for developers and enterprises, and includes built-in provenance / SynthID watermarks on outputs.

What Veo 3 already brought

- Text → video and image → video capabilities (including preview image-to-video).

- Native audio generation (music, ambient sounds, dialogue) — Veo 3 introduced first-class audio.

- Two variants: high-quality Veo 3 and Veo 3 Fast (optimized for speed/iteration).

- Platform availability: made available in Vertex AI / Gemini API (paid preview → general availability updates in mid-2025).

- Safety/provenance: SynthID watermarking and some generation use controls/approval for person/child generation.

So — what is Veo 3.1 expected to bring?

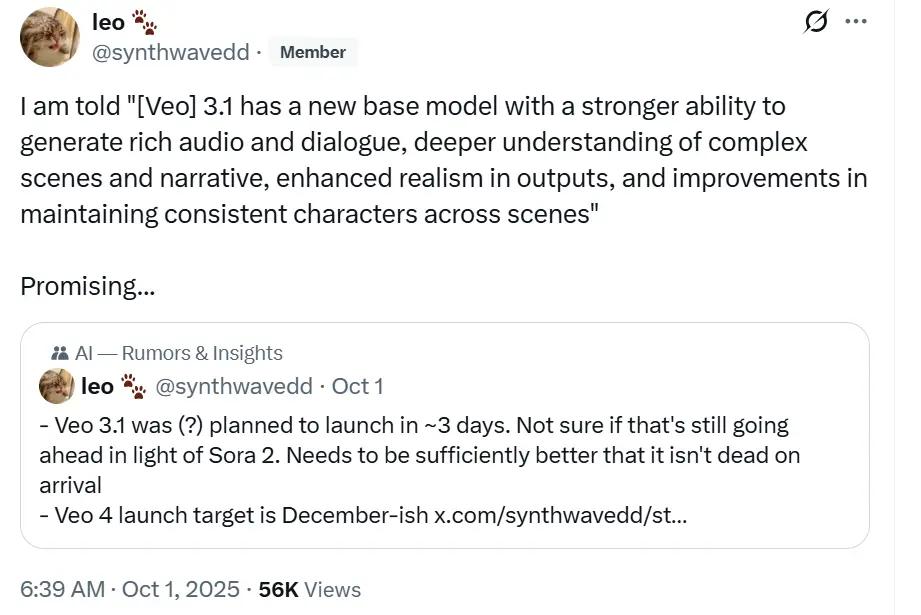

Status: As of now there is no official Veo 3.1 product page from Google describing full release notes. However, multiple Google dev posts / community posts and tweets indicate a near-term incremental update (labelled “Veo 3.1”) that’s expected to focus on iterative improvements to audio, quality, and format support rather than a full new-generation rewrite.

Here are some inferences I made based on x’s post and the characteristics of Veo3:

- Improved native audio (dialogue, multi-voice lip sync) —cleaner dialogue, better SFX mixing and spatialization). Veo 3 already generates audio natively; Veo 3.1 could improve dialogue realism and language support to match recent improvements competitors are shipping.

- Faster/cheaper paths for some common outputs (more Veo 3 Fast parity and optimizations).

- Improved image→video fidelity and better character/pose consistency in multi-frame clips.

- Expanded aspect ratios / resolution controls (more flexible 9:16/16:9 and 1080p across configs). Google already added vertical + 1080p; Veo 3.1 could expand those controls.

- Longer clips / relaxed 8-second cap — community demand and Google’s previous roadmap suggest increased duration is a likely target (Veo 3 today is optimized for 8-second clips).

- Better image→video fidelity and extended image-to-video support (improvements to realism, motion continuity), building on the image→video preview in Veo 3.

Compare Veo 3 / (expected) Veo 3.1 → OpenAI Sora 2

Primary focus

- Veo 3 (Google): short, high-fidelity 8-second videos from text/image prompts; native audio; integrated into Gemini/Gemini API and Vertex AI; optimized for production use and developer API integration.

- Sora 2 (OpenAI): OpenAI’s flagship video+audio model emphasizing physical realism, coherent motion, synchronized dialogue and sound, and an accompanying social app (Sora) with a cameo/consent system for integrating user likenesses and focuses heavily on realism and safety controls.

Strengths

- Veo (now): strong developer/enterprise integration (Vertex AI, Gemini API), production pricing options, clear path for cloud customers, vertical/1080p + fast variant. Good for businesses building into pipelines.

- Sora 2: remarkable physical accuracy and multi-modal sync (dialogue + visuals), and a consumer-facing app integrated with social workflows (cameo feature, moderation). Great for creators wanting realistic narrative scenes and an app ecosystem.

How to access Veo now — and how to be ready for Veo 3.1

- Try in Gemini (consumer / web / mobile): Veo generation is exposed in the Gemini apps (tap the “video” option in the prompt bar). Access level (Pro / Ultra) affects which Veo variants you can use.

- Programmatically / enterprise: use API in CometAPI (Veo model IDs available in the model docs). CometAPI provides veo3-pro, veo3-fast and veo3. For details, please refer to Veo 3 ‘s doc.

Practical tip (developer): to request vertical output, set the aspectRatio parameter (e.g. "9:16") and check the model configuration (Veo 3 vs Veo 3 Fast) and your plan for resolution limits (720p vs 1080p).

How to access Sora 2 (today)

Sora app: Sora 2 launched with a Sora app (invite-limited rollout in US & Canada at launch). OpenAI indicated broader access and API expansion later. If you want to try Sora 2 now, check CpmetAPI’s Sora 2 page. CometAPI has already supported sora 2 API, and generates ~10-second social clips and an emphasis on motion realism for people.

Getting Started

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

Developers can access Veo 3.1 API through CometAPI, the latest model version is always updated with the official website. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !