CometAPI emerges as a unifying platform when Developers and businesses face mounting complexity when integrating and managing diverse AI models, offering a single gateway to over 500 AI models worldwide. By aggregating leading services—from large language models to cutting-edge multimodal systems—CometAPI streamlines development workflows, reduces costs, and accelerates time-to-market. This article delves into the fundamentals of CometAPI, explores its inner workings, guides you through usage steps, and highlights the latest model updates powering its ecosystem.

What is CometAPI?

A Unified AI Model Aggregation Platform

CometAPI is a developer-centric API aggregation platform that unifies access to more than 500 artificial intelligence models under a single, consistent interface. Instead of integrating separately with OpenAI’s GPT series, Anthropic’s Claude, Midjourney, Suno, Google’s Gemini, and others, developers send requests to CometAPI’s endpoint and specify the desired model by name. This consolidation dramatically simplifies integration efforts, reducing the overhead of managing multiple API keys, endpoints, and billing systems .

Key Advantages for Developers and Enterprises

By centralizing models on a single platform, CometAPI provides:

- Simplified Billing: One unified invoice covering all model usage, avoiding fragmented vendor bills .

- Vendor Independence: Effortless switching between models without lock-in, ensuring long-term flexibility .

- High Concurrency & Low Latency: A serverless backbone delivers unlimited transaction-per-minute capacity and sub-200 ms response times for most text-based calls.

- Cost Optimization: Volume discounts of up to 20 % on popular models help teams control their AI expenditures .

How does CometAPI work?

Architecture and Key Components

CometAPI’s core is a high-performance, serverless infrastructure that auto-scales to meet demand. Incoming requests pass through a global load balancer and are routed to the most appropriate model provider endpoint—OpenAI, Anthropic, Google Cloud, and others—based on factors like latency and cost. Behind the scenes, CometAPI maintains:

- API-Key Management: Secure vaulting of user keys and automatic rotation support.

- Rate Multiplier Engine: Transparent pricing multipliers aligned with official model price sheets, ensuring cost consistency.

- Usage Tracking & Billing: Real-time metering dashboards that display token consumption, model usage, and spend alerts.

How to use CometAPI?

1. Get an API Key

Sign up for a free CometAPI account to receive a token (formatted as sk-XXXXX) in your dashboard. This token grants access to all integrated models and is used in the Authorization header of API requests .

2. Configure the Base URL

In your codebase, set the base URL to:

https://www.cometapi.com/console/

All subsequent calls—whether text completion, image generation, or file search—are routed through this single endpoint.

Different clients may need to try the following addresses:

- https://www.cometapi.com/console/

- https://api.cometapi.com/v1

- https://api.cometapi.com/v1/chat/completions

3. Make API Calls in OpenAI Format

CometAPI accepts requests following the OpenAI API schema. For example, to generate text with GPT-4.1:

curl https://api.cometapi.com/v1/chat/completions \

-H "Authorization: Bearer sk-XXXXX" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4.1",

"messages":

}'

Responses and error codes mirror those of the original model providers, enabling seamless migration of existing OpenAI-based integrations.

Replace

YOUR_API_KEYwith the API key you obtained during registration.

Code Example:

import os

from openai import OpenAI

client = OpenAI(

base_url="https://api.cometapi.com/v1/chat/completions",

api_key=os.getenv("COMETAPI_KEY"),

)

response = client.chat.completions.create(

model="claude-opus-4-20250514",

messages=[

{"role": "system", "content": "You are a coding assistant."},

{"role": "user", "content": "Refactor this function to improve performance."},

],

)

print(response.choices.message.content)

This snippet initializes the Anthropic client via CometAPI, sends a chat completion request to Claude Opus 4 instant mode, and prints the assistant’s reply .

4. Software Integration Guide

Integrating CometAPI with third-party platforms (such as n8n, Cherry Studio, Zapier, Cursor, etc.) can significantly improve development efficiency, reduce costs and strengthen governance capabilities.Its advantage:

- Third-party tools usually support calling OpenAI-compatible interfaces through HTTP/REST or dedicated nodes. With CometAPI as the “behind-the-scenes” provider, you can reuse existing workflows or assistant settings without any changes by simply pointing the Base URL to https://www.cometapi.com/console/

- After connecting to CometAPI, there is no need to switch between multiple vendors. You can try different models (such as GPT-4o, Claude 3.7, Midjourney, etc.) in the same environment with one click, greatly accelerating the progress from concept to verifiable prototype.

- Compared with settling with each model provider separately, CometAPI aggregates bills from each provider and provides tiered discounts based on usage, saving an average of 10–20% cost.

Popular software application tutorials can be referenced:

- A Guide to Setting Up Cursor With CometAPI

- How to Use Cherrystudio with CometAPI

- How to Use n8n with CometAPI

Typical application scenarios:

- Automated processes in n8n: Use the HTTP Request node to generate images (Midjourney) first, and then call GPT-4.1 to write captions for them, to achieve one-click multimodal output.

- Desktop assistant in Cherry Studio: Register CometAPI as a custom OpenAI provider, and administrators can accurately enable/disable the required models in the GUI, allowing end users to switch freely in the same dialogue window.Cherry Studio automatically pulls the latest model list from /v1/models, and any newly connected CometAPI models will be displayed in real time, eliminating manual updates.

Which models does CometAPI support?

By bridging providers, CometAPI offers an expansive, ever-growing library of AI capabilities.

Model Categories

- Large Language Models: GPT (o4mini, o3, GPT-4o, GPT-4.1 etc), Claude (claude 4 Sonnet, Opus etc).Google Gemini Lineup(Gemini 2.5).Qwen (Qwen 3,qwq),Mistral & LLaMA-Based Models (mistral-large .llama 4 series) ,Deepseek’s Models etc

- Vision & Multimodal: Midjourney (Fast Imagine, Custom Zoom), Luma ,GPT-image-1,stable-diffusion Models.(sdxl etc)

- Audio & Music: Suno (Music, Lyrics), Udio.

- Specialized & Reverse Engineering: Grok, FLUX, Kling.text-embedding-ada-002 etc.

Recent Model Releases

Gemini 2.5 Pro Preview (2025-06-06): Introduces a 1 million-token context window and native multimodal capabilities for advanced reasoning tasks. Gemini 2.5 official latest version Gemini 2.5 Pro Preview API (model: gemini-2.5-pro-preview-06-05) in CometAPI can access.

DeepSeek R1 (2025-05-28): Offers large-scale reasoning performance for complex query tasks, now available via the standard chat endpoint . DeepSeek R1 official latest version ——DeepSeek R1 API (model: deepseek-r1-0528) in CometAPI can access.

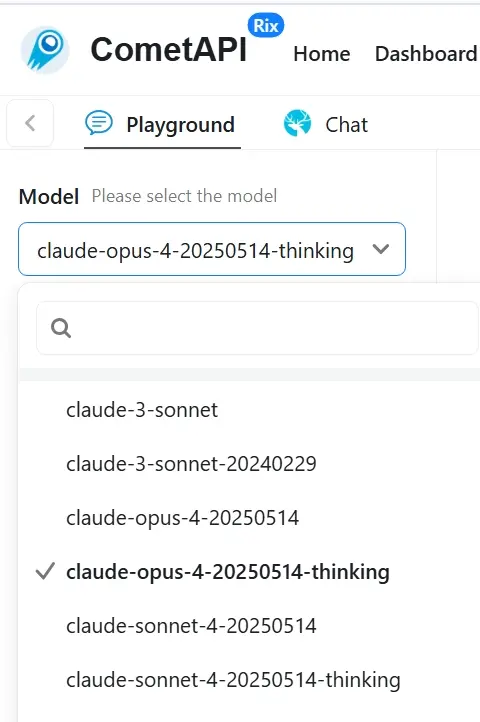

Claude 4 (2025-05-23): Enhances coding and logical reasoning within Anthropic’s Claude series, optimized for cost-effective enterprise use. Claude 4 official latest version ——Claude Opus 4 API (model: claud; claude-opus-4-20250514-thinking)and Claude Sonnet 4 API (model: claude-sonnet-4-20250514 ;claude-sonnet-4-20250514-thinking) in CometAPI can access.We’ve also added cometapi-sonnet-4-20250514-thinking specifically for use in Cursor.

GPT-Image-1 (2025-04-27): Brings GPT-4o’s image-generation prowess directly to developers, supporting high-fidelity outputs and diverse stylistic renderings.And GPT-image-1 API is the top three model for website visits

GPT-4.1 Series (2025-04-15): Includes mini and nano variants that balance performance with reduced latency and costs, setting new benchmarks on long-context tasks. GPT 4.1 official latest version ——GPT-4.1 API (model: gpt-4.1;gpt-4.1-nano;gpt-4.1-mini) in CometAPI can access.

Unified Access to Emerging Models

CometAPI announced support for advanced reverse-engineering models and expanded access to Midjourney and Suno for generative art and music applications. Users can now invoke these models directly via CometAPI’s unified API, enjoying discounts up to 20 percent on popular offerings .

At present, cometAPI is following the official pace. Suno Music API has been updated to version v4.5 and Midjourney API has been updated to version v7. For specific API details and calls, please refer to the API doc written specifically for midjourney and suno. If you want to call other visual models to generate images or videos, such as sora, runway, etc., please refer to the dedicated reference documents on the website。

Note: This list is illustrative, not exhaustive. CometAPI continuously adds new providers and model releases as soon as they become publicly available—often within days of official launch—so you’ll always have instant access to the latest breakthroughs without changing your code.

To see the full, up-to-date roster of all 500 + models, access Model Page

FAQs

1. What pricing model does CometAPI use?

CometAPI applies transparent multipliers to official model prices—multipliers are consistent across providers. Billing is pay-as-you-go with detailed token-based metering and optional volume discounts for high-usage accounts .

2. Can I monitor usage in real time?

Yes. The CometAPI dashboard displays live metrics on API calls, token usage, and spend. You can set custom alerts to notify your team when thresholds are reached.

3. How do I handle large context windows?

CometAPI supports models with up to 1 million-token context windows (e.g., Gemini 2.5 Pro, GPT-4.1-nano), enabling complex, long-form tasks. Simply specify the model name in your API call, and CometAPI routes the request accordingly .

4. What security measures are in place?

All API traffic is encrypted via TLS 1.3. API keys are securely stored in hardware-backed key vaults. For enterprise customers, encrypted reasoning items and data retention controls meet zero-retention compliance standards.

5. How can I get support or report issues?

CometAPI provides detailed documentation, an active Discord community, and email support at . Enterprise customers also receive dedicated account management and SLAs for uptime and performance.

Conclusion

By consolidating hundreds of AI models under one API, CometAPI empowers developers to iterate faster, control costs, and remain vendor-agnostic—all while benefiting from the latest breakthroughs . Whether you’re building the next generation of chatbots, multimedia content generators, or data-driven analytics pipelines, CometAPI provides the unified foundation you need to accelerate your AI ambitions.