In the rapidly advancing world of artificial intelligence, the release of each new large language model (LLM) represents more than a numerical version bump — it signals progress in reasoning, coding ability, and human–machine collaboration. In late September 2025, Zhipu AI (Z.ai) unveiled GLM-4.6, the newest member of its General Language Model family. Building on the robust architecture and strong reasoning foundation of GLM-4.5, this update refines the model’s capabilities in agentic reasoning, coding intelligence, and long-context understanding, while remaining open and accessible to developers and enterprises alike.

What is GLM-4.6?

GLM-4.6 is a major release in the GLM (General Language Model) series designed to balance high-capacity reasoning with practical developer workflows. At a high level, the release targets three tightly related use cases: (1) advanced code generation and reasoning about code, (2) extended-context tasks that require model understanding across very long inputs, and (3) agentic workflows where the model must plan, call tools, and orchestrate multi-step processes. The model is delivered in variants intended for cloud APIs and community model hubs, enabling both hosted and self-hosted deployment patterns.

Practically, GLM-4.6 is positioned as a “developer-first” flagship: its improvements are not only about raw benchmark numbers but about capabilities that materially change how developers build assistants, code copilots, and document- or knowledge-driven agents. Expect a release that places emphasis on instruction tuning for tool use, fine-grained improvements for code quality and debugging, and infrastructure choices that enable very long contexts without linear degeneration in performance.

What does GLM-4.6 aim to solve?

- Reduce the friction of working with long codebases and large documents by supporting longer effective context windows.

- Improve the reliability of code generation and debugging, producing more idiomatic, testable outputs.

- Increase the robustness of agentic behaviors — planning, tool use, and multi-step task execution — through targeted instruction and reinforcement-style tuning.

From GLM-4.5 to GLM-4.6,what changed in practice?

- Context scalin: 128K jump to 200K tokens is the single biggest UX/architecture change for users: long documents, entire codebases, or extended agent transcripts can now be processed as a single context window. This reduces the need for ad hoc chunking or expensive retrieval loops for many workflows.

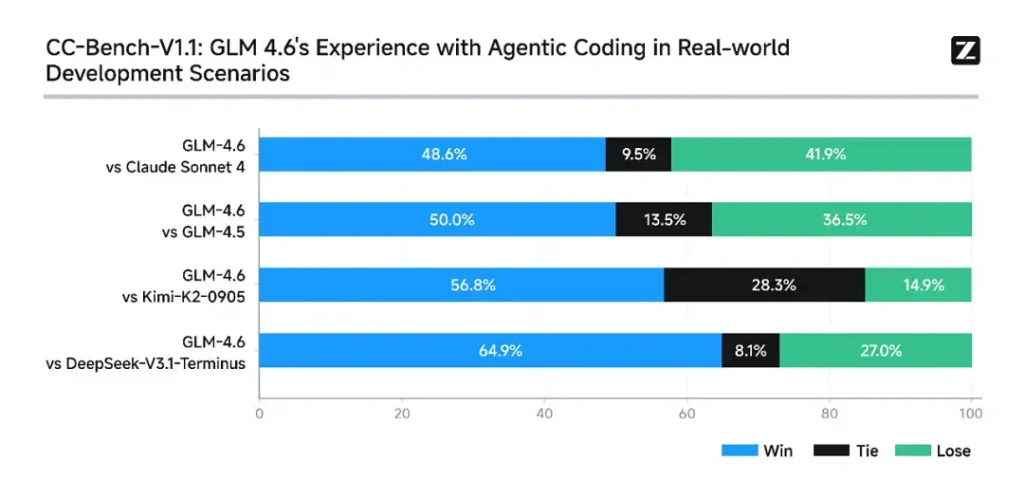

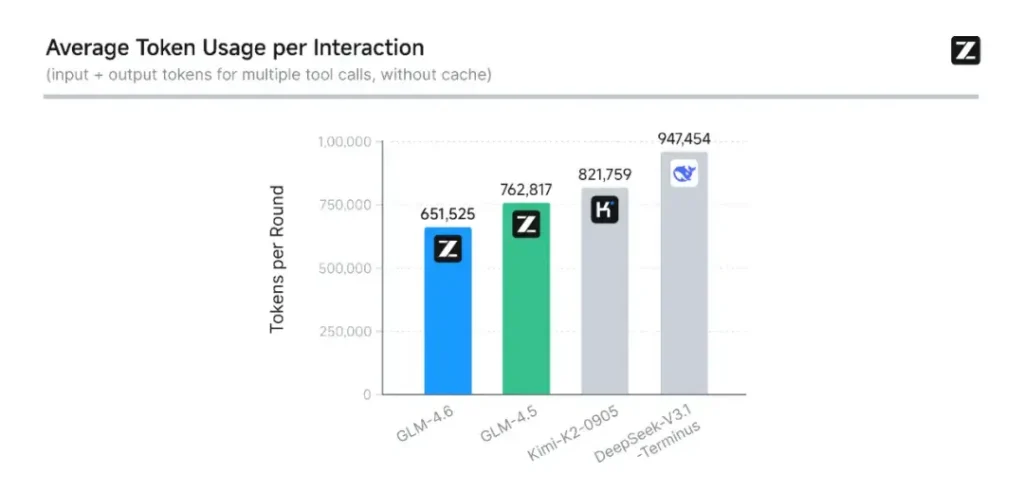

- Coding and real-world evaluation: Z.ai extended CC-Bench (their coding & completion benchmark) with harder, real-task trajectories and reports that GLM-4.6 finishes tasks with ~15% fewer tokens than GLM-4.5 while improving success rates in complex multi-turn engineering tasks. This signals better token efficiency as well as raw capability improvements in applied coding scenarios. Z.ai

- Agent and tool integration: GLM-4.6 includes better support patterns for tool calling and search agents—important for products that rely on the model to orchestrate web search, code execution, or other microservices.

What are the key features of GLM-4.6?

1. Extended Context Window to 200K Tokens

One of the most headline-grabbing features of GLM-4.6 is its massively extended context window. Expanding from 128K in the previous generation to 200K tokens, GLM-4.6 can process entire books, complex multi-document datasets, or hours of dialogue in a single session. This expansion not only enhances comprehension but also enables consistent reasoning over long inputs — a major leap for document summarization, legal analysis, and software engineering workflows.

2. Improved Coding Intelligence

Zhipu AI’s internal CC-Bench benchmark, a suite of real-world programming tasks, shows that GLM-4.6 achieves notable improvements in coding accuracy and efficiency. The model can produce syntactically correct, logically sound code while using approximately 15% fewer tokens than GLM-4.5 for equivalent tasks. This token efficiency means faster, cheaper completions without sacrificing quality — a vital factor for enterprise deployment.

3. Advanced Reasoning and Tool Integration

Beyond raw text generation, GLM-4.6 shines in tool-augmented reasoning. It has been trained and aligned for multi-step planning and for orchestrating external systems — from databases to search tools to execution environments. In practice, this means GLM-4.6 can act as the “brain” of an autonomous AI agent, deciding when to call external APIs, how to interpret results, and how to maintain task continuity across sessions.

4. Enhanced Natural Language Alignment

Through continued reinforcement learning and preference optimization, GLM-4.6 delivers smoother conversational flow, better style matching, and stronger safety alignment. The model adapts its tone and structure to fit context — whether it’s formal documentation, educational tutoring, or creative writing — improving user trust and readability.

What architecture powers GLM-4.6?

Is GLM-4.6 a Mixture-of-Experts model?

Inference method continuity: The GLM team indicates GLM-4.5 and GLM-4.6 share the same fundamental inference pipeline, allowing existing deployment setups to be upgraded with minimal friction. This reduces operational risk for teams already using GLM-4.x.—scaling parameters and model design choices that emphasize specialization for agentic reasoning, coding, and efficient inference. The GLM-4.5 report gives the clearest public description of the family’s MoE strategy and training regimen (multi-stage pretraining, expert model iteration, reinforcement learning for alignment); GLM-4.6 applies those lessons while tuning context length and task-specific capabilities.

Practical architecture notes for engineers

- Parameter footprint vs. activated compute: Large parameter totals (hundreds of billions) do not directly translate to equivalent activation cost on every request—MoE means only a subset of experts activates per token sequence, giving a more favorable cost/throughput tradeoff for many workloads.

- Token precision and formats: The public weights are distributed in BF16 and F32 formats, and community quantizations (GGUF, 4-/8-/bits) are appearing quickly; these allow teams to run GLM-4.6 on varied hardware profiles.

- Inference stack compatibility: Z.ai documents vLLM and other modern LLM runtimes as compatible inference backends, which makes GLM-4.6 feasible for both cloud and on-prem deployments.

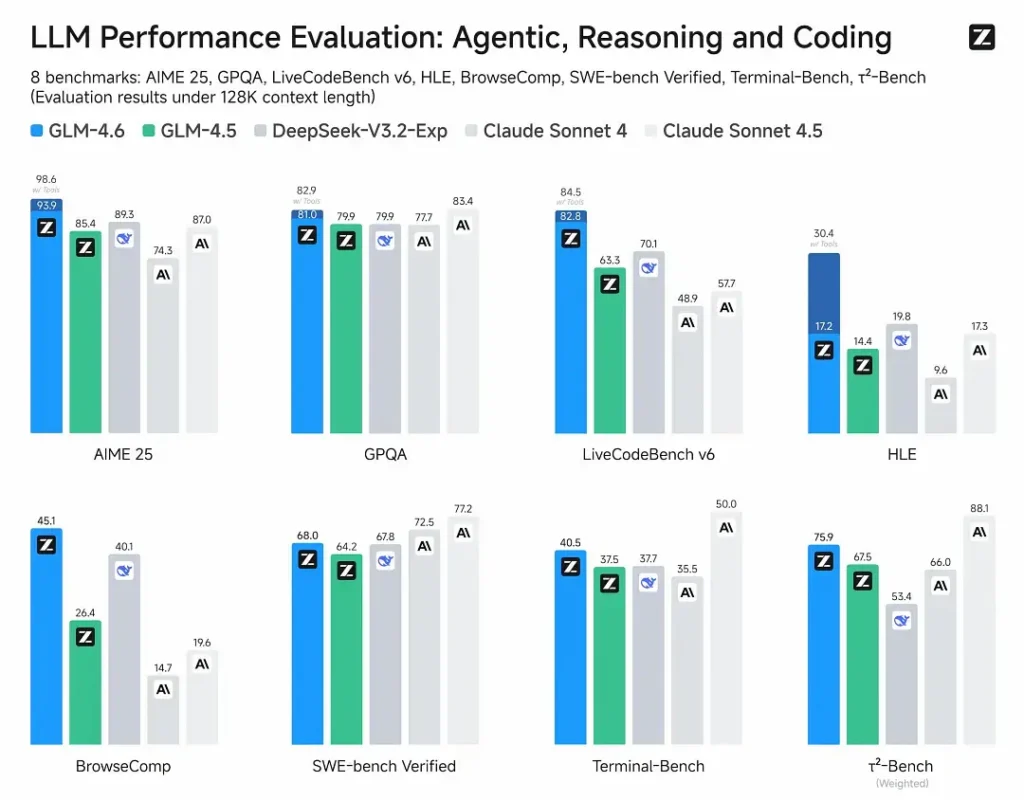

Benchmark performance: how does GLM-4.6 perform?

What benchmarks were reported?

Z.ai evaluated GLM-4.6 across a suite of eight public benchmarks spanning agentic tasks, reasoning, and coding. They also extended CC-Bench (a human-evaluated, real-task coding benchmark run in Docker-isolated environments) to better simulate production engineering tasks (front-end development, testing, algorithmic problem solving). On these tasks GLM-4.6 showed consistent improvements over GLM-4.5.

Coding performance

- Real-task wins: In CC-Bench human evaluations, GLM-4.6 reached near parity with Anthropic’s Claude Sonnet 4 in head-to-head, multi-turn tasks—Z.ai reports a 48.6% win rate in their Docker-isolated, human-judged evaluations (interpretation: near 50/50 with Claude Sonnet 4 on their curated set). At the same time, GLM-4.6 outperformed a number of domestic open models (e.g., DeepSeek variants) on their tasks.

- Token efficiency: Z.ai reports ~15% fewer tokens used to finish tasks compared with GLM-4.5 in CC-Bench trajectories—this matters for both latency and cost.

Reasoning and math

GLM-4.6 claims improved reasoning ability and stronger tool-using performance versus GLM-4.5. Where GLM-4.5 emphasized hybrid “thinking” and direct-reply modes, GLM-4.6 increases robustness for multi-step reasoning—especially when integrated with search or execution tools.

Z.ai’s public messaging positions GLM-4.6 as competitive with leading international and domestic models on their chosen benchmarks—specifically, competitive with Claude Sonnet 4 and outperforming certain domestic alternatives like DeepSeek variants in code/agent tasks. But in some coding-specific subbenchmarks** GLM-4.6 still trails Claude Sonnet 4.5 (a more recent Anthropic release), making the landscape one of close competition rather than outright dominance.

How to Access GLM-4.6

- 1. Through the Z.ai Platform: Developers can access GLM-4.6 directly via Z.ai’s API or chat interface (chat.z.ai). These hosted services allow for prompt experimentation and integration without local deployment. The API supports both standard text completion and structured tool-calling modes — essential for agentic workflows.

- 2. Open Weights on Hugging Face and ModelScope: For those who prefer local control, Zhipu AI has released GLM-4.6 model files on Hugging Face and ModelScope, including safetensors versions in BF16 and F32 precision. Community developers have already produced quantized GGUF versions, enabling inference on consumer-grade GPUs.

- 3. Integration Frameworks: GLM-4.6 integrates smoothly with major inference engines like vLLM, SGLang, and LMDeploy, making it adaptable to modern serving stacks. This versatility lets enterprises choose between cloud, edge, and on-prem deployment depending on compliance or latency requirements.

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

The latest integration GLM-4.6 will soon appear on CometAPI, so stay tuned!While we finalize GLM 4.6 Model upload, explore our other models on the Models page or try them in the AI Playground.

Developers can access GLM‑4.5 API through CometAPI, the latest model version is always updated with the official website. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !

Conclusion — Why GLM-4.6 matters now

GLM-4.6 is an important milestone in the GLM line because it bundles practical developer improvements — longer context windows, targeted coding and agentic optimizations, and tangible benchmark gains — with the openness and ecosystem flexibility that many organizations want. For teams building code assistants, long-form document agents, or tool-enabled automations, GLM-4.6 is worth evaluating as a top candidate.