Kimi K2 represents a significant leap in open‑source large language models, combining state‑of‑the‑art mixture‑of‑experts architecture with specialized training for agentic tasks. Below, we explore its origins, design, performance, and practical considerations for access and use.

What is Kimi K2?

Kimi K2 is a trillion‑parameter mixture‑of‑experts (MoE) language model developed by Moonshot AI. It features 32 billion “activated” parameters—those engaged per token—and a total expert parameter count of 1 trillion, enabling massive capacity without linear inference costs. Built on the Muon optimizer, Kimi K2 was trained on over 15.5 trillion tokens, achieving stability at scales previously considered impractical .The model is offered in two main variants:

Kimi‑K2‑Instruct: Pre‑tuned for conversational and agentic applications, ready for immediate deployment in dialogue systems and tool‑enabled workflows.

Kimi‑K2‑Base: A foundation model suitable for research, custom fine‑tuning, and low‑level experimentation.

How does its architecture work?

- Mixture‑of‑Experts (MoE): At each layer, a gating mechanism selects a small subset of experts (8 out of 384) to process each token, dramatically reducing compute for inference while maintaining a massive knowledge base.

- Specialized Layers: Incorporates a single dense layer alongside 61 total layers, with attention heads numbering 64 and hidden dimensions tailored for MoE efficiency.

- Context and Vocabulary: Supports up to 128 K tokens in context length and a 160 K‑token vocabulary, enabling long‑form understanding and generation.

Why does Kimi K2 matter?

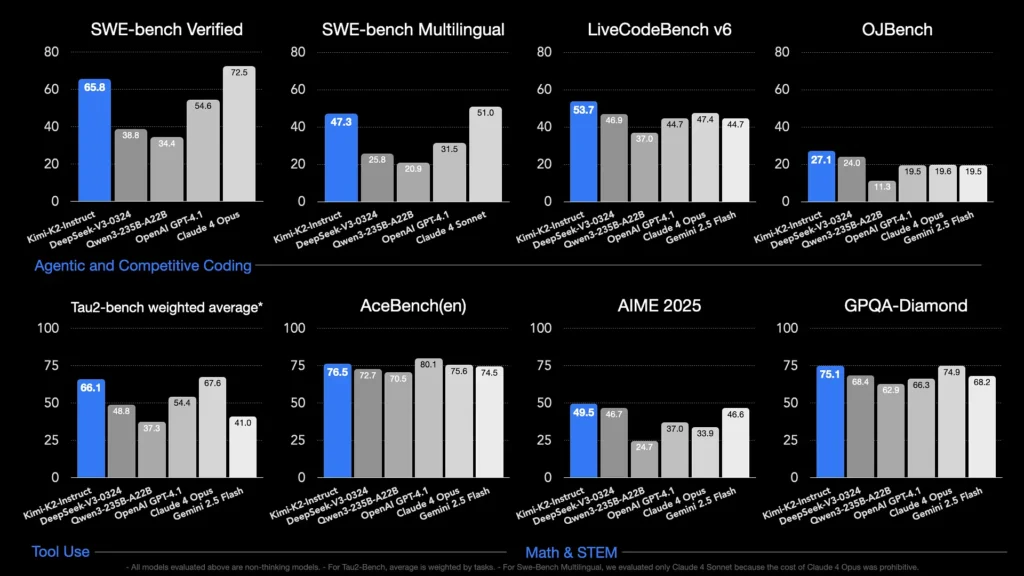

Kimi K2 pushes the frontier of open‑source AI by delivering performance on par with leading proprietary models, particularly in coding and reasoning benchmarks.

What benchmarks showcase its capabilities?

- LiveCodeBench v6: Achieves a pass@1 rate of 53.7%, leading open‑source models and rivaling closed systems like GPT‑4.1 (44.7%).

- SWE‑bench Verified: Scores 65.8%, outperforming GPT‑4.1’s 54.6% and second only to Claude Sonnet 4 in publicly available comparison tests.

- MultiPL‑E & OJBench: Demonstrates robust multilingual coding ability (85.7% on MultiPL‑E) and reliable performance across real‑world programming challenges.

- Math-500: Attains 97.4%, surpassing GPT-4.1’s 92.4%, showcasing its prowess in formal mathematical reasoning.

How is it optimized for agentic tasks?

Beyond raw generation, Kimi K2 was trained with synthetic tool‑use scenarios—Model Context Protocol (MCP) data—to call external tools, reason through multi‑step processes, and autonomously solve problems. This makes it particularly adept in environments like Cline, where it can orchestrate code execution, API interaction, and workflow automation seamlessly .

How can I access Kimi K2?

Access options span official platforms, open‑source distributions, and third‑party integrations, catering to research, development, and enterprise needs.

Official Moonshot AI platform

Moonshot AI offers hosted inference via its platform, providing low‑latency API access to both Kimi‑K2‑Base and Kimi‑K2‑Instruct variants. Pricing is tiered based on compute consumption, with enterprise plans including priority support and on‑prem deployments. Users can sign up at the Moonshot AI website and retrieve API keys for immediate integration.

CometAPI

CometAPI have already integrated K2 into its offerings. It bundle K2 inference with managed GPU infrastructure, SLA guarantees, and scalable pricing tiers, enabling organizations to choose between pay‑as‑you‑go API usage or reserved capacity with volume discounts .

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications.

Developers can access Kimi K2 API(kimi-k2-0711-preview)through CometAPI. To begin, explore the model’s capabilities in the Playground and consult the API guidefor detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key.

Third‑party tool integrations

- Cline: A popular code‑centric IDE that natively supports Kimi K2 via the

cline:moonshotai/kimi-k2provider, giving developers one‑click access to chat, code generation, and agentic workflows within their editor . - Hugging Face Spaces: Community‑hosted demos and minimal UIs let users interact with K2‑Instruct models directly in the browser. A Hugging Face account is required, and performance may vary based on shared backend resources .

How do I use Kimi K2?

Once you’ve chosen an access method, you can employ K2 for a variety of tasks—from chat to code execution to autonomous agents.

Via API or platform SDK

- Authenticate: Retrieve your API key from Moonshot AI or CometAPI.

- Initialize Client: Use the official SDK (Python/JavaScript) or standard HTTP requests.

- Choose Model Variant:

- Kimi‑K2‑Base for fine‑tuning and research.

- Kimi‑K2‑Instruct for plug‑and‑play chat and agents.

- CometAPI’s model:

kimi-k2-0711-preview

- Send Prompts: Format inputs per the chat template (system, user, assistant roles) to leverage optimized instruction‑following behavior. .

Running locally with llama.cpp

For offline or self‑hosted setups, use the Unsloth‑quantized GGUF weights (245 GB for 1.8‑bit dynamic quant).

- Download Weights: From the Moonshot AI GitHub or Hugging Face repository.

- Install llama.cpp: Ensure you have sufficient disk (≥ 250 GB) and combined RAM+VRAM (≥ 250 GB) for ~5 tokens/s throughput.

- Launch Model:

./main --model kimi-k2-gguf.q8_0 --prompt "Your prompt here" - Adjust Settings: Use recommended parameters (

rope_freq_base,context_len) documented in the Unsloth guide for stable performance.

Integrations with development tools

- IDE Plugins: Several community plugins enable K2 in VS Code, Neovim, and JetBrains IDEs. Configuration typically involves specifying the API endpoint and model ID in settings.

- Automation Frameworks: Leverage K2’s agentic core with frameworks like LangChain or Haystack to chain prompts, API calls, and code execution steps into complex automations.

What are the typical use cases for Kimi K2?

K2’s combination of scale, agentic training, and open access makes it versatile across domains.

Coding assistance

From boilerplate generation and refactoring to bug fixing and performance profiling, K2’s SOTA coding benchmarks translate to real‑world productivity gains—often outperforming alternatives in readability and simplicity.

Knowledge and reasoning

With 128 K context length, K2 handles long documents, multi‑document Q&A, and chain‑of‑thought reasoning. Its MoE architecture ensures retention of diverse knowledge without catastrophic forgetting.

Agentic workflows

K2 excels at orchestrating multi‑step tasks—fetching data, invoking APIs, updating codebases, and summarizing results—making it ideal for autonomous assistants in customer support, data analysis, and DevOps.

How Does Kimi K2 Compare to Other Open-Source Models?

While DeepSeek’s V3 and Meta’s recent open releases have dominated headlines earlier in 2025, Kimi K2 differentiates itself through:

Agentic Intelligence

Kimi K2 was explicitly designed for “agentic” workflows—automating tasks via tool calls, shell commands, web automation, and API integrations. Its self-play–augmented training dataset includes diverse tool-call examples, enabling seamless integration with real-world systems.

Cost Efficiency

At roughly 80–90% lower per-token inference cost compared to models like Claude Sonnet 4, Kimi K2 offers enterprise-grade performance without blockbuster pricing, catalyzing rapid adoption among price-sensitive developers.

Licenses and Accessibility

Unlike certain open-source releases encumbered by restrictive licenses, Kimi K2 is available under a permissive license that allows commercial use, derivative works, and local deployments, aligning with Moonshot AI’s open-source ethos.

—

By uniting cutting‑edge MoE design, rigorous agentic training, and open‑source availability, Kimi K2 empowers developers and researchers to build intelligent, autonomous applications without prohibitive costs or closed ecosystems. Whether you’re writing code, crafting complex multi‑step workflows, or experimenting with large‑scale reasoning, K2 offers a versatile, high‑performance foundation.