On July 28, 2025, Beijing‑based startup Zhipu AI officially unveiled its GLM-4.5 series of open‑source large language models, marking its most powerful release to date and targeting advanced intelligent‑agent applications. The announcement—made via a live online event following the World Artificial Intelligence Conference (WAIC)—showcased two variants: the full‑scale GLM‑4.5 with 355 billion total parameters (32 billion active) and the more compact GLM‑4.5‑Air featuring 106 billion total parameters (12 billion active). Both models employ a hybrid “thinking” and “non‑thinking” reasoning architecture designed to balance deep inference with rapid response, and offer a 128,000‑token context window for extensive conversational and task‑oriented use cases .

The launch of GLM-4.5 arrives amid an intensifying domestic AI race. According to China’s state‑run Xinhua News Agency, Chinese developers have released 1,509 large‑language models as of July 2025, leading the global total of 3,755 models—a clear testament to the scale and speed of China’s AI ecosystem expansion.

Open‑Source Licensing of GLM-4.5

In a clear departure from closed‑proprietary paradigms, Z.ai is releasing GLM-4.5 under an MIT‑style, fully auditable open‑source license, granting enterprises full transparency into model weights and training code. Organizations can deploy GLM-4.5 on‑premise, fine‑tune it on proprietary datasets, or integrate it via self‑hosted inference services, thereby avoiding vendor lock‑in and opaque API pricing structures.

The availability of both GLM-4.5 for general agentic tasks and GLM‑4.5‑Air, a lightweight variant optimized for lower‑resource environments, ensures a broad spectrum of use cases—from large‑scale data center deployments to edge‑device inference scenarios .

Strategically, Zhipu’s open‑source approach positions the company against closed‑source Western incumbents like OpenAI. By democratizing access to a GPT‑4‑comparable model under the MIT license, Zhipu aims to cultivate a robust community of downstream developers and set technical benchmarks for agentic AI capabilities. Industry observers note that this move follows a broader trend among China’s “AI Tigers,” including Moonshot AI and Step AI, which have also open‑sourced large models to accelerate innovation cycles .

Performance Benchmarks and Comparative Analysis

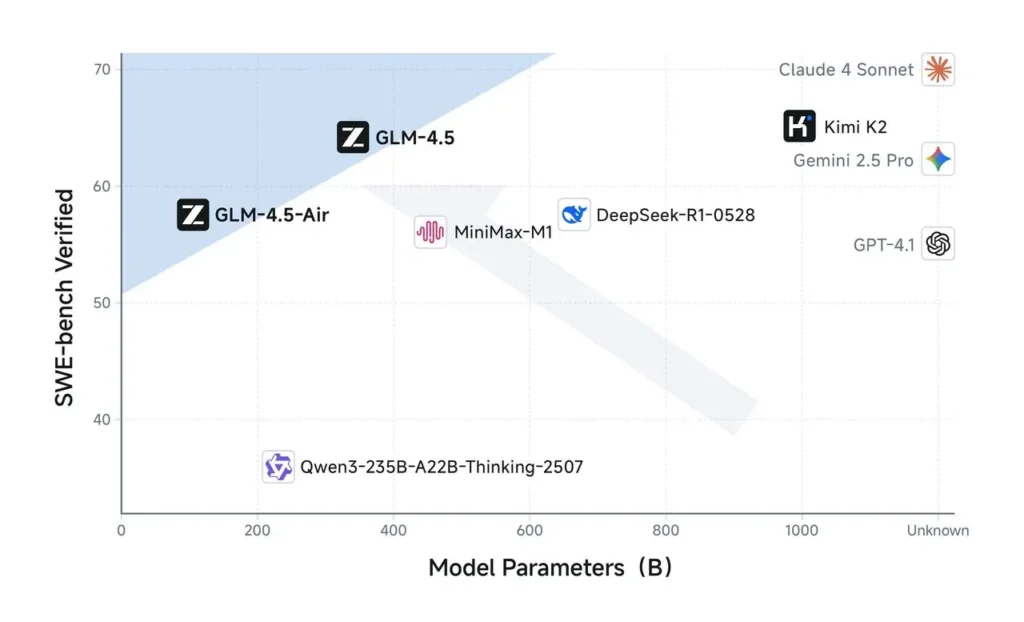

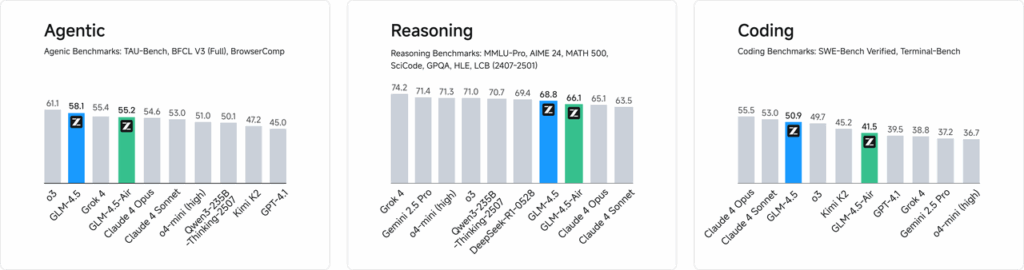

Preliminary benchmarks provided by Zhipu AI indicate that GLM‑4.5 achieves an aggregate score of 63.2 across 12 industry‑standard evaluation suites—placing it third among both open‑source and proprietary models—while the streamlined GLM‑4.5‑Air scores 59.8, balancing efficiency with high accuracy. Internal coding assessments further demonstrate that GLM‑4.5 outperforms major rivals, securing a 53.9 percent win rate against Kimi K2 and an 80.8 percent success rate versus Qwen3‑Coder across 52 diverse programming tasks .

Demonstrations during the live event underscored GLM‑4.5’s agentic capabilities: the model autonomously performed web research—retrieving and synthesizing information from multiple sources—and interfaced with simulated social‑media and development environments to generate posts, execute code snippets, and manipulate user‑interface elements in real time. Interested users can immediately trial the full‑scale model free of charge via Zhipu’s Qingyan portal and CometAPI platform, while developers may access API endpoints on CometAPI’s BigModel service or download the complete model weights from Hugging Face and ModelScope under an MIT license .

Cost efficiency is a cornerstone of Z.ai’s strategy. Trained on a 15 trillion‑token corpus, GLM‑4.5 leverages optimized inference paths to deliver throughput of 100–200 tokens per second—up to eight times faster than comparable domestic rivals—at an advertised price of just $0.11 per million tokens, undercutting models like DeepSeek‑R1 and Alibaba’s latest releases. Under the permissive MIT license, all model weights, code, and documentation are freely available via Hugging Face, aiming to foster a vibrant developer and research community worldwide .

“GLM‑4.5 embodies our commitment to democratizing access to premier AI technology,” said Zhang Peng, CEO of Z.ai, in a CNBC interview. “By open‑sourcing a model that excels in reasoning, coding, and agentic functions, we empower organizations of any size to innovate without the constraints of proprietary APIs or prohibitive costs.”

Getting Started

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.

Developers can access [GLM-4.5 Air API](https://www.cometapi.com/claude-opus-4-api/)and GLM‑4.5 API through CometAPI, the latest claude models version listed are as of the article’s publication date. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.