What is Gemini 3 Deep Think? All You Need to Know

Gemini 3 Deep Think is a new, specialized reasoning mode in Google / DeepMind’s latest foundation model family — Gemini 3 — designed to take more time and internal “deliberation” on hard, multi-step, multimodal problems. It’s being promoted as the version of Gemini that pushes state-of-the-art reasoning and agentic problem solving further than previous releases.

What exactly is Gemini 3 Deep Think?

Definition and positioning

Gemini 3 Deep Think is not a separate model family in the sense of a wholly different neural network architecture released independently — it is an enhanced reasoning mode within the Gemini 3 series that Google describes as “pushing the boundaries of intelligence even further.” Deep Think is explicitly presented as the mode you choose when you need the model to pursue deeper chains of thought, consider multiple hypotheses, and evaluate alternatives before responding — effectively allowing the system to trade latency for higher-quality, more deliberative outputs. Google positions Deep Think as the edition tuned for the most complex, novel and multi-step reasoning tasks (and is being gated to safety testing and Google AI Ultra subscribers initially).

How Deep Think differs from the standard Gemini 3 Pro

Conceptually, Gemini 3 Pro aims for a balanced, low-latency experience suitable for general agentic use and developer integration (e.g., the new Antigravity IDE and Vertex AI integrations). Deep Think is the same family but configured to:

- Reasoning-first decoding and chain-of-thought internalization. Google describes Gemini 3 as using an improved internal “thinking” process that allows the model to execute multi-step planning and internal deliberation more reliably. Deep Think appears to intensify that process — allocating more internal compute, longer internal deliberation chains, and stricter verification heuristics during generation. These changes aim to reduce brittle single-step responses and boost problem solving for novel tasks.

- Larger inference envelope (tools + simulation). Deep Think is optimized to call on tool simulations (simulated browsers, calculators, code runners, or external APIs) in a way that treats the agentic workflow as part of the model’s reasoning loop. That means the model can plan, hypothesize, test (via simulated tools), and revise — a form of internal experimentation that benefits complex coding, math, or research queries.

- Higher compute/latency trade-off. To get this deeper reasoning, Deep Think operates in a higher-cost, higher-latency inference regime than Pro. Google has historically offered such tradeoffs with premium “expert” modes in their models; Deep Think follows that pattern by giving priority to quality and reliability.

How does Gemini 3 Deep Think work?

Understanding “how” requires separating product mode (Deep Think) from the underlying model family (Gemini 3). Deep Think is not a separate, standalone model file you download; rather it’s a configuration — a trained capability tier and inference stack — that unlocks more compute, internal reasoning routines, and specialized decoding behaviors to prioritize depth and correctness over latency or cost.

Tighter tool integration

Deep Think leverages the same agentic tool-calling and sandboxing primitives as Gemini 3 Pro, but with more conservative policies and additional verification steps for each tool call (important for safety in research workflows).

Deep Think trade-offs and inference strategy

Deep Think is explicitly described as trading latency for depth: it runs more compute per query (longer internal deliberation or more thorough search of candidate reasoning paths) and leans on auxiliary mechanisms such as selective code execution or multi-step verification to solve novel problems. That makes it stronger on “frontier” benchmarks (novel, creative or multi-stage problems) but potentially slower and costlier in production.

Chain-of-thought, scratchpads, and iterative refinement

The Deep Think approach relies on mechanisms the research community and companies have used successfully: chain-of-thought style reasoning, internal scratchpads, and staged reasoning where subproblems are solved and validated before integration. The model uses methods to decompose problems, check intermediate steps, and recompose solutions into robust final outputs.

What performance benchmarks does Gemini 3 Deep Think achieve?

Google have published a trove of benchmark numbers that illustrate the scale of the gains claimed for Gemini 3 — and specifically the Deep Think configuration.The strongest public claims for Gemini 3 Deep Think are:

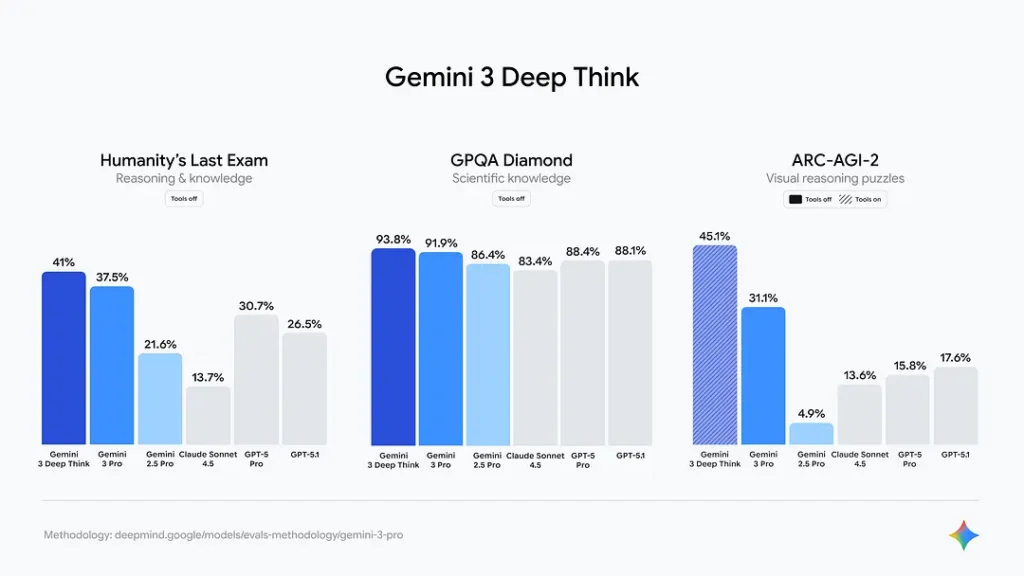

- ARC-AGI (abstract visual reasoning, code-execution variants): Gemini 3 Pro reportedly achieves ~31.1% while Gemini 3 Deep Think reaches ~45.1% on ARC-AGI-2 — a dramatic leap on a benchmark that previously eluded high performance.

- GPQA Diamond (advanced question answering): Gemini 3 Pro was reported around ~91.9% while Deep Think scored ~93.8% in publicized runs. These are high performance levels that place Gemini 3 at or near the top of multiple leaderboards at launch.

- Humanity’s Last Exam (tool-free challenge): Google’s materials report Gemini 3 Deep Think achieving substantially higher tool-free performance (Google cited a figure of ~41.0%), outperforming Gemini 3 Pro on the most demanding, exam-style prompts.

Why these figures matter. These benchmark gains aren’t uniform across all tasks: they are most pronounced on problems requiring multi-step reasoning, abstract visual problem solving, and situations where the model must hold and manipulate large amounts of context. That matches the functional intent behind Deep Think: to demonstrate robust, higher-order reasoning rather than just better surface text prediction.

Gemini 3 Deep Think vs Gemini 2.5 pro

Where Deep Think improves on Gemini 2.x

Reasoning and problem solving: The clearest uplift is in reasoning benchmarks and tasks that require extended internal chains of logic. Aubstantially higher scores on ARC-AGI, Humanity’s Last Exam, and other reasoning suites for Gemini 3 Deep Think compared with Gemini 2.5 Pro. That jump appears to be both algorithmic (different training/fine-tuning) and operational (Deep Think’s inference-time deliberation).

Multimodal understanding: Gemini 3 extends support for richer multimodal inputs — video analysis, handwriting + voice fusion, and more nuanced image-and-text reasoning — and Deep Think amplifies that capability for tasks that mix media types. Where Gemini 2.x handled multimodal tasks well, Gemini 3 Deep Think is presented as both more accurate and more contextually sensitive.

Agentic and tool use: Gemini 3’s emphasis on agentic workflows (creating agents that operate across editors, terminals, browsers, and API calls) marks a qualitative change. Deep Think, by augmenting internal simulation and tool orchestration, provides better planning and verification when interacting with external tools — a capability that was nascent in earlier Gemini generations. Google’s Antigravity IDE is a concrete early integration that demonstrates this.

Coding and developer ergonomics: Gemini 3 Pro already improved one-shot coding and “vibe coding” (high-level spec → scaffolded app generation). Deep Think augments the model’s ability to plan larger projects, generate more coherent multi-file code, and debug across contexts. Early benchmark and partner feedback report clear developer productivity gains compared with 2.x.

Architectural and behavioral differences (H3)

The practical reasons for the gains over Gemini 2.x are multiple and mutually reinforcing:

- MoE backbone improvements and expert routing tuning, enabling more efficient specialization and scale.

- Unified multimodal stack which better fuses cross-modal reasoning (important for ARC-AGI’s visual subproblems).

- Operational modes like Deep Think that intentionally extend internal deliberation and hypothesis testing, trading compute/latency for accuracy.

Practical outcomes for users

For developers and researchers, that translates into:

- Improved capability to automate higher-value workflows (e.g., scientific literature synthesis, architecture design, advanced debugging) that earlier Gemini generations had limited success with.

- Fewer hallucinations and more defensible step-by-step chains of reasoning on complex prompts.

- Better performance when tasks require reasoning across long documents, codebases or mixed media.

How to Access Gemini 3 Deep Think

Option A — Consumer / power-user route: Gemini app + Google AI

According to the official announcement by Google, Gemini 3 Deep Think is not yet broadly available in the general-release tier. It’s being rolled out to safety testers first, then to the “Ultra” subscription level.

Google AI Ultra: US$249.99/month (in the US) for Ultra tier, which includes “Deep Think, Gemini Agent (US only, English only) and highest limits.”

Where to subscribe: subscribe via the Gemini app / Google One / Google AI plans page for your region. The subscription console shows whether Deep Think is already enabled for your account.

Option B — Developer / enterprise route: API

For developers wanting API access: The Gemini 3 API is already live for “Pro” in preview. If you need to use the “Deep think” version, use its variant API. API access is pay-as-you-go and billed per million input / output tokens.

Good news — CometAPI has now integrated the Gemini 3 Pro Preview API, and you can also access the latest ChatGPT 5.1. The API price is cheaper than the official price:

| Model | gemini-3-pro-preview | gemini-3-pro-preview-thinking |

| Input Tokens | $1.60 | $1.60 |

|---|---|---|

| Output Tokens | $9.60 | $9.60 |

Ready to Go?→ Sign up for CometAPI today !

If you want to know more tips, guides and news on AI follow us on VK, X and Discord!

Conclusion

Gemini 3 Deep Think represents a deliberate and pragmatic attempt to productize deeper machine reasoning: the idea that some tasks benefit from internal, staged deliberation and integrated tool use rather than single-shot responses.