DeepSeek: How Does It Work?

In the rapidly evolving field of artificial intelligence, DeepSeek has emerged as a formidable contender, challenging established giants like OpenAI and Google. Founded in July 2023 by Liang Wenfeng, DeepSeek is a Chinese AI company that has garnered attention for its innovative approaches to large language models (LLMs) and its commitment to open-source development. This article delves into the architecture, innovations, and implications of DeepSeek’s models, particularly focusing on its Mixture-of-Experts (MoE) framework and the advancements in its DeepSeek-V2 and DeepSeek-R1 models.

What Is DeepSeek and Why Is It Important?

Artificial Intelligence (AI) has rapidly evolved, with DeepSeek standing out as one of the most ambitious projects to date. DeepSeek, developed by a team of former top-tier AI engineers and researchers, represents a new generation of open-source language models that aim to bridge the gap between large proprietary models (like GPT-4) and the open research community.

Launched in late 2024, DeepSeek introduced several novel ideas about training efficiency, scaling, and memory retrieval, pushing the limits of what open models can achieve.

How Does DeepSeek’s Architecture Differ from Traditional Models?

What Is MoE?

In conventional dense neural networks, every input passes through the entire network, activating all parameters regardless of the input’s nature. This approach, while straightforward, leads to inefficiencies, especially as models scale up.

The Mixture-of-Experts architecture addresses this by dividing the network into multiple sub-networks, or “experts,” each specializing in different tasks or data patterns. A gating mechanism dynamically selects a subset of these experts for each input, ensuring that only the most relevant parts of the network are activated. This selective activation reduces computational overhead and allows for greater model specialization.

The Mixture-of-Experts architecture is a technique designed to improve the efficiency and scalability of large neural networks. Instead of activating all parameters for every input, MoE selectively engages a subset of specialized “expert” networks based on the input data. This approach reduces computational load and allows for more targeted processing.

DeepSeek’s MoE Implementation

DeepSeek’s models, such as DeepSeek-R1 and DeepSeek-V2, utilize an advanced MoE framework. For instance, DeepSeek-R1 comprises 671 billion parameters, but only 37 billion are activated during any given forward pass. This selective activation is managed by a sophisticated gating mechanism that routes inputs to the most relevant experts, optimizing computational efficiency without compromising performance.

What Does a Simplified DeepSeek Transformer Look Like?

Here’s a simplified code example of how DeepSeek might implement a sparse mixture of experts mechanism:

pythonimport torch

import torch.nn as nn

import torch.nn.functional as F

class Expert(nn.Module):

def __init__(self, hidden_dim):

super(Expert, self).__init__()

self.fc = nn.Linear(hidden_dim, hidden_dim)

def forward(self, x):

return F.relu(self.fc(x))

class SparseMoE(nn.Module):

def __init__(self, hidden_dim, num_experts=8, k=2):

super(SparseMoE, self).__init__()

self.experts = nn.ModuleList([Expert(hidden_dim) for _ in range(num_experts)])

self.gate = nn.Linear(hidden_dim, num_experts)

self.k = k

def forward(self, x):

scores = self.gate(x)

topk = torch.topk(scores, self.k, dim=-1)

output = 0

for idx in range(self.k):

expert_idx = topk.indices[..., idx]

expert_weight = F.softmax(topk.values, dim=-1)[..., idx]

expert_output = torch.stack([self.experts[i](x[j]) for j, i in enumerate(expert_idx)])

output += expert_weight.unsqueeze(-1) * expert_output

return output

# Example usage

batch_size, hidden_dim = 16, 512

x = torch.randn(batch_size, hidden_dim)

model = SparseMoE(hidden_dim)

out = model(x)

print(out.shape) # Output shape: (16, 512)

This basic example simulates selecting 2 experts dynamically based on the input and aggregating their outputs.

What Training Strategies Did DeepSeek Use?

How Was Data Collection and Curation Handled?

DeepSeek’s creators placed a massive emphasis on data quality over sheer quantity. While OpenAI and others gathered data from the public internet at large, DeepSeek combined:

- Curated open datasets (Pile, Common Crawl segments)

- Academic corpora

- Code repositories (like GitHub)

- Special synthetic datasets generated using smaller supervised models

Their training involved a multi-stage curriculum learning approach:

- Early stages trained on easier, factual datasets

- Later stages emphasized reasoning-heavy and coding tasks

What Optimization Techniques Were Employed?

Training large language models efficiently remains a major challenge. DeepSeek employed:

- ZeRO-3 Parallelism: Splitting optimizer states, gradients, and parameters across GPUs.

- Int8 Quantization During Training: To minimize memory usage without hurting model quality.

- Adaptive Learning Rates: Using techniques like cosine annealing with warmup.

Here’s a simple snippet showcasing adaptive learning rate scheduling:

pythonfrom torch.optim.lr_scheduler import CosineAnnealingLR

optimizer = torch.optim.AdamW(model.parameters(), lr=1e-4)

scheduler = CosineAnnealingLR(optimizer, T_max=100)

for epoch in range(100):

train(model)

validate(model)

scheduler.step()

This code adjusts the learning rate smoothly during training.

How Does DeepSeek Achieve Superior Performance?

What Role Does Retrieval Play?

DeepSeek integrates a built-in retrieval system—akin to plugging a search engine into a neural network. When given a prompt, the model can:

- Encode the query

- Retrieve relevant documents from an external memory

- Fuse the documents with its own internal knowledge

This allows DeepSeek to stay factual and up-to-date far better than conventional closed models.

Conceptually, it looks something like this:

pythonclass Retriever:

def __init__(self, index):

self.index = index # Assume some pre-built search index

def retrieve(self, query_embedding):

# Search based on similarity

return self.index.search(query_embedding)

class DeepSeekWithRetriever(nn.Module):

def __init__(self, model, retriever):

super().__init__()

self.model = model

self.retriever = retriever

def forward(self, query):

embedding = self.model.encode(query)

docs = self.retriever.retrieve(embedding)

augmented_input = query + " " + " ".join(docs)

output = self.model.generate(augmented_input)

return output

This kind of Retrieval-Augmented Generation (RAG) greatly enhances DeepSeek’s long-term reasoning abilities.

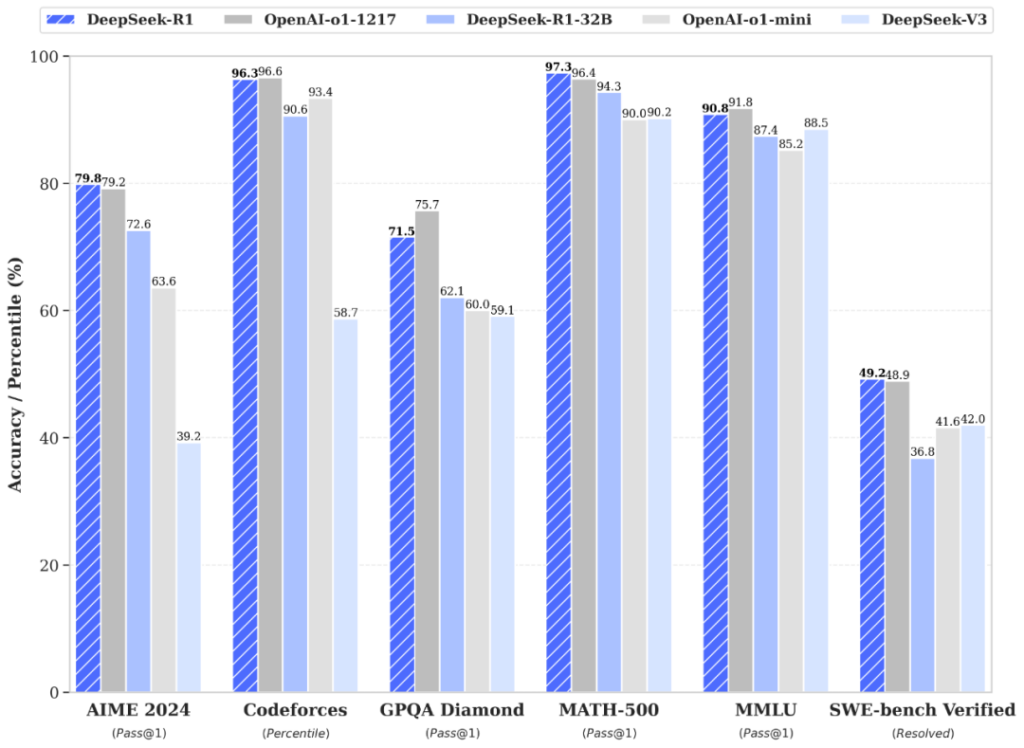

How Is DeepSeek Evaluated?

The model was benchmarked using:

- MMLU: Multi-task language understanding

- HumanEval: Code generation accuracy

- TruthfulQA: Ability to answer truthfully

- BIG-bench: General broad AI evaluation

In most cases, DeepSeek’s largest models (30B, 65B parameters) matched or even exceeded GPT-4-turbo on reasoning tasks while remaining significantly cheaper to run.

What Challenges Remain for DeepSeek?

While impressive, DeepSeek is not without flaws:

- Bias and Toxicity: Even curated datasets can leak problematic outputs.

- Retrieval Latency: RAG systems can be slower than pure generation models.

- Compute Costs: Training and serving these models is still expensive, even with MoE.

The DeepSeek team is actively working on pruning models, smarter retrieval algorithms, and bias mitigation.

Conclusion

DeepSeek represents one of the most important shifts in open AI development since the rise of Transformer-based models. Through architectural innovations like sparse experts, retrieval integration, and smarter training objectives, it has set a new standard for what open models can achieve.

As the AI landscape evolves, expect DeepSeek (and its derivatives) to play a major role in shaping the next wave of intelligent applications.

Getting Started

Developers can access DeepSeek R1 API and DeepSeek V3 API through CometAPI. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Note that some developers may need to verify their organization before using the model.