Kling 2.0 represents a major leap in generative video technology, heralding a new era in which text and image prompts can be transformed into cinematic-quality motion pictures with unprecedented realism and flexibility. Drawing on the latest breakthroughs in neural architectures, multimodal processing, and user customization, Kling 2.0 redefines what’s possible in AI-driven video creation. Below, we explore the platform’s core innovations, performance enhancements, feature set, competitive positioning, and real-world use cases.

What is Kling 2.0?

Kling 2.0 is the latest generation of an AI-powered video creation platform developed by the Chinese tech firm Kuaishou. Unlike a simple incremental update, it represents a ground-up redesign of the video-generation engine, integrating cutting-edge neural mechanisms to deliver films that look and feel as if they were shot by a professional crew .

Background and Evolution

- Origins in Kuaishou’s AI Lab

Kling first debuted as an in-app tool for short-form video enhancement in 2023. Over successive versions, the focus shifted from basic style transfer and background substitution to fully synthesized video scenes. - From 1.6 to 2.0

Version 1.6 introduced rudimentary dynamic motion and template-based styling. By contrast, Kling 2.0 features a comprehensive re-architecting of the core engine, yielding dramatic improvements in fidelity, coherence, and narrative control .

Core Technological Breakthroughs

- 3D Spatiotemporal Joint Attention

A proprietary mechanism that jointly attends to spatial and temporal cues across frames, enabling the system to maintain consistency in lighting, perspective, and object trajectories over time . - Multimodal Fusion Engine

Beyond text → video, Kling 2.0 natively consumes images, sketches, and even rough video clips as auxiliary prompts, blending them seamlessly into the generated output. This fusion allows for rich, customized scenes that adhere tightly to user intent.

How does Kling 2.0 improve video generation?

Kling 2.0 elevates every aspect of AI-driven filmmaking, addressing longstanding challenges such as jittery motion, “plastic” textures, and slow response times.

Dramatic Enhancement in Realism

- Natural Motion Dynamics

Through refined temporal modeling, character movements now flow smoothly, with no abrupt jumps or mechanical stutters—even in complex interactions like object handling and multi-person choreography . - Cinematic Light and Camera Effects

The updated rendering pipeline replicates lens focus shifts, depth-of-field bokeh, and dynamic lighting transitions, imparting a true cinematic feel to every scene.

Millisecond-Scale Responsiveness

- From Days to Minutes

Traditional on-set shoots for dynamic scenarios (e.g., a silhouetted figure sprinting across a rain-soaked rooftop) can take upwards of 24 hours to film and edit. Kling 2.0 delivers comparable sequences in under five minutes, with frame-accurate fidelity and directional camera moves specified in a single text prompt. - Interactive Preview and Refinement

Users receive near-instant previews, enabling rapid iteration on storyboards and shot composition without waiting for full renders.

Advanced Prompt Adherence

- High Fidelity to User Instructions

Compared to prior versions, Kling 2.0 exhibits tighter adherence to even nuanced prompts—such as “low-angle close-up of a dancer leaping through neon rain”—ensuring the final video aligns closely with the creative vision. - Template and Style Control

With over 60 built-in style templates (film noir, anime, documentary, etc.), creators can switch genres seamlessly, enforcing consistent color grading, motion pacing, and grain structure.

What are the key features of Kling 2.0?

The 2.0 release introduces a suite of powerful tools that extend beyond mere video generation.

KLING 2.0 Master for Video Generation

- Reconstructed Neural Backbone

Core architecture rebuilt for enhanced spatiotemporal coherence, delivering sharper textures and more nuanced character expressions. - Dynamic Scene Complexity

Generates both simple and complex multi-actor sequences—like urban chase scenes or wildlife documentaries—without sacrificing frame quality.

KOLORS 2.0 for Image Creation

- Standalone Image Module

Alongside video, KOLORS 2.0 offers advanced image synthesis capabilities, enabling users to extract stills from generated footage or create standalone illustrations with matched style.

Swap-Role Custom Avatar Training

- User-Defined Virtual IPs

By uploading as few as 10 short video clips, users can train bespoke “virtual IPs” or avatars that mimic a specific actor’s movements and facial expressions, facilitating branded content or personalized storytelling. - Cross-Model Compatibility

These avatars transfer seamlessly between the video and image modules, allowing consistency across media types.

Multimodal Narrative Composer

- Integrated Scene Remixing

Mix text prompts, audio cues, and reference images to craft multi-scene narratives. Kling 2.0 intelligently stitches these inputs into coherent story arcs with logical transitions and pacing. - Audio-Visual Synchronization

Basic soundtracks and voiceover synchronization are built in, with algorithms that adjust scene cuts to musical beats or speech rhythms.

How does Kling 2.0 compare to competing platforms?

Facing rivals such as Google’s Veo 3 and Anthropic’s AI video experiments, Kling 2.0 holds its ground through a balanced emphasis on realism, flexibility, and speed.

Kling 2.0 vs. Google Veo 3

- Fidelity and Coherence

Decrypt’s head-to-head tests found Kling 2.1 (the immediate successor) matching or exceeding Veo 3’s output quality, with smoother motion and richer textures. - Template Diversity

Kling’s library of over 60 style templates surpasses Veo’s focused but narrower set, granting creators more genre options without extensive prompt engineering.

Kling 2.0 vs. Other AI Video Tools

- Anthropic Claude Research Integration

While Anthropic has integrated video into its Claude workspace, Kling’s specialized engine delivers faster renders and higher visual fidelity for purely cinematic outputs . - Open-Source Models

Community-driven tools like StableVideo and RunwayML offer broad accessibility but at the cost of slower performance and less polished visuals, positioning Kling 2.0 as the go-to for professional-grade content.

How can users access and utilize Kling 2.0?

Free Tier and Trial Options

Pollo AI hosts a free trial that grants new users access to Kling 2.0’s core features, albeit with limitations on video length (up to 10 seconds) and daily credit allowances. This allows creatives to explore the model’s capabilities without upfront cost.

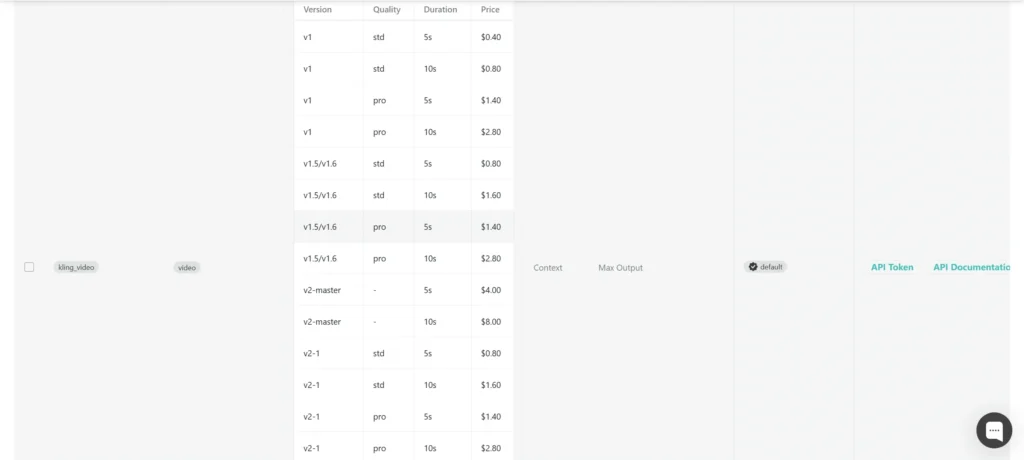

Subscription Plans and Pricing

For heavier use, Kling 2.0 offers tiered subscription plans ranging from $10 to $92 per month. Lower tiers include HD exports and watermark removal, while top-tier plans provide extended clip lengths, priority rendering queues, and API access for integration into custom pipelines .

What Does the Future Hold for Kling AI?

With version 2.0 solidly in the market, Kling AI is already testing its next frontier: real-time collaborative generation and higher-resolution outputs.

Roadmap to Kling 2.1 and Beyond

Beta access to Kling 2.1 debuted on May 29, 2025, introducing tiered quality modes—Standard (720p), High Quality (1080p) and Master (1080p with advanced effects)—at differentiated price points. Early benchmarks indicate faster render times and improved detail retention, especially in texture-rich scenes ().

Real-Time Collaboration and Cloud Editing

Kling AI is piloting a cloud-based editing environment where multiple users can co-create in real time, annotate frames and vote on style presets. This initiative aims to replicate the dynamic of a live studio session, further blurring the line between human creativity and AI assistance ().

Conclusion

Kling AI 2.0 stands at the forefront of AI-driven video creation, marrying advanced neural architectures with a flexible, multimodal design framework. By delivering cinematic realism, lightning-fast responsiveness, and comprehensive customization tools, it transforms the landscape of content production. As AI platforms continue to evolve, Kling 2.0’s blend of technical innovation and user-centric features positions it as a defining milestone—and a glimpse of the future of digital storytelling.

Getting Started

CometAPI provides a unified REST interface that aggregates hundreds of AI models—including ChatGPT family—under a consistent endpoint, with built-in API-key management, usage quotas, and billing dashboards. Instead of juggling multiple vendor URLs and credentials.

To begin, explore models’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key.

Developers can access Kling 2.0 Master API, the latest models listed are as of the article’s publication date. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.