Anthropic this week unveiled Claude Haiku 4.5, a latency-optimized “small” member of its Claude 4 family that the company says delivers near-frontier reasoning and coding performance while running dramatically faster and cheaper than its mid- and top-tier siblings. According to Anthropic, Haiku 4.5 matches much of the practical developer performance of the company’s Sonnet model family — particularly in real-world software engineering tasks — while costing roughly one-third as much per token and producing outputs at more than twice the speed of Sonnet 4. The company positions the release as an answer for teams that want frontier-class outcomes for high-volume, low-latency use cases such as chat assistants, pair programming, and real-time agents.

What is Claude Haiku 4.5 and what are its core features?

What is Haiku 4.5?

Claude Haiku 4.5 is Anthropic’s latest “small” class Claude 4.5 release: engineered for much lower latency and cost while preserving near-frontier capabilities for coding, computer use, and agent tasks. Anthropic positions Haiku 4.5 as a drop-in option where you need fast, high-throughput responses and reasonable reasoning power — e.g., user-facing chat, inline coding assistants, and sub-agents in multi-agent systems.

What are the notable capabilities and limits?

- Low latency, high throughput: Haiku 4.5 is designed to be significantly faster than larger Sonnet/Opus variants, making it suitable for interactive apps and high-volume workloads.

- Near-frontier coding & “computer use”: On many coding and tool-use tasks it matches or comes close to Sonnet’s performance while running at a fraction of the cost.

- Large context window: Haiku 4.5 supports Anthropic’s standard long context (commonly 200k tokens for Claude 4.5 class models).

- Multimodal/tool support: Haiku 4.5 participates in Claude’s tool, code execution, and agent frameworks (e.g., Agent Skills, Claude Code). That makes it practical to embed Haiku agents that can call tools, run sandboxed code, read files, or use web fetch features when the Agent SDK is enabled.

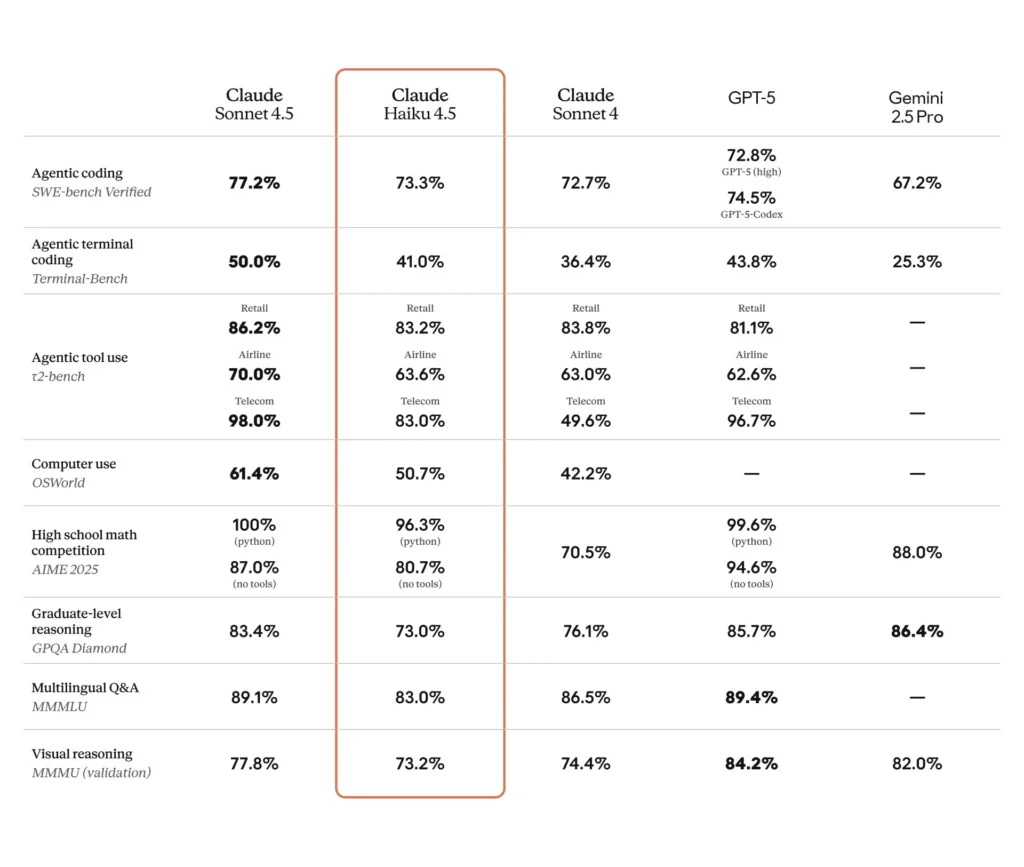

Benchmarks — how Haiku 4.5 stacks up

Anthropic published benchmark results intended to demonstrate Haiku 4.5’s competitiveness on coding and tool-use evaluations. Two headline :

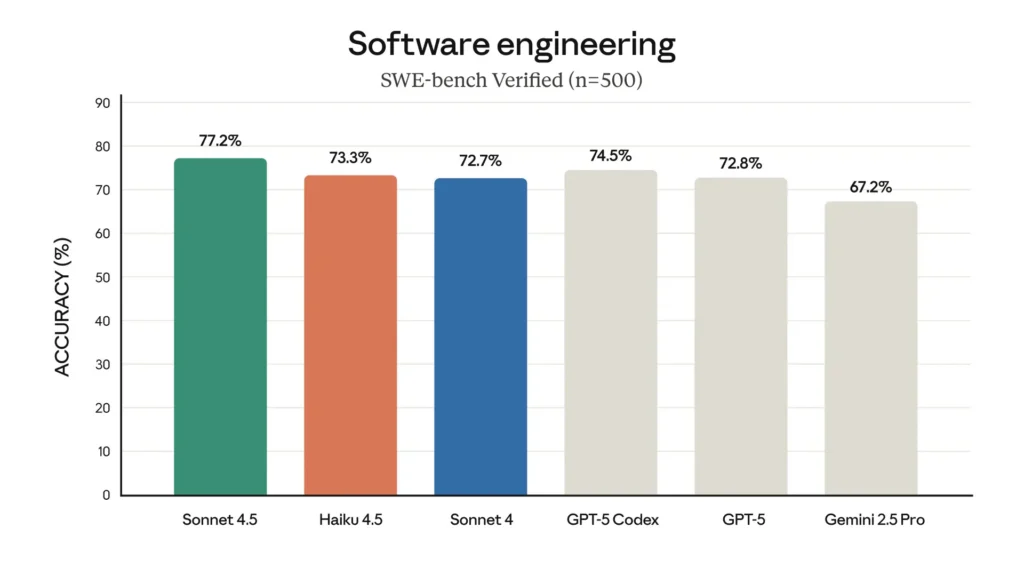

- SWE-bench Verified: Haiku 4.5 scores ~73.3% on SWE-bench Verified, a human-filtered coding benchmark that measures the ability to solve real software engineering problems. Anthropic places that result in the same performance band as Sonnet 4 and close to other leading coding models on that test. Independent tech outlets and analysts reported the same figure in their coverage.

- Terminal/command line tasks: On a terminal/command-line centric benchmark (Terminal-Bench), Anthropic’s tests show Haiku 4.5 trailing the absolute frontier Sonnet 4.5 in some command-line.

Comparative reporting shows Haiku 4.5 frequently matches or slightly lags Sonnet 4 on the highest-end frontier measures (Sonnet 4.5, Opus), while substantially outpacing Haiku 3.5 and earlier small models. Haiku 4.5 sits in the “sweet spot” for workloads where speed and cost matter more than the last few percentage points of accuracy on the hardest benchmarks.

Haiku’s high SWE-bench score means:

- For common coding assistant jobs (autocompletion, scaffolding, code reviews), Haiku 4.5 often delivers code that’s syntactically correct and functionally useful.

- For very complex algorithmic reasoning or deep architectural design, Sonnet/Opus (larger models) may still produce superior end-to-end reasoning, but at higher cost and latency.

How to use Claude haiku 4.5 API

Anthropic Claude API / Claude.ai: The model is available directly through the Claude developer API (model name claude-haiku-4-5) and via Anthropic’s hosted Claude apps, including Claude Code and the consumer chat surfaces where the company chooses to expose it. Anthropic states that developers can use Haiku 4.5 as a drop-in replacement for prior Haiku models or as a complementary tier alongside Sonnet for mixed-model pipelines.

**Third-party platforms:**CometAPI provides access to Claude Haiku 4.5 API at a 20% discount from the official price. You only need to switch the URL to use Claude Haiku 4.5 API through cometapi. Other parameters are consistent with the official ones. Developers can call the model using the model name claude-haiku-4-5-20251001 (CometAPI’s MODEL explicitly lists that name). And the version cometapi-haiku-4-5-20251001 specially prepared for cursor users.

How do I call the Claude Haiku 4.5 API (quick start and best practices)?

Below is a compact, practical API quick-start using CometAPI’s documented developer API patterns. Use this as a copy-paste starting point, then tune parameters (temperature, max_tokens, tools) and apply Anthropic best practices (prompt caching, streaming, and tool use). Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Quick cURL example

This is the minimal cURL pattern (based on Anthropic’s Messages API) adapted for Haiku 4.5:

export ANTHROPIC_API_KEY="sk-xxxx"

curl https://api.cometapi.com/v1/messages \

-H "Content-Type: application/json" \

-H "x-api-key: $ANTHROPIC_API_KEY" \

-H "anthropic-version: 2023-06-01" \

-d '{

"model":"claude-haiku-4-5-20251001",

"max_tokens":800,

"messages":[

{"role":"user","content":"Write a short Python function to convert a CSV into JSON and explain the steps."}

]

}'

This will return a JSON message object with an assistant response and usage counts (input/output tokens). Use max_tokens to bound output length and monitor usage in the response.

Example using Python (requests)

If you prefer Python without a specific client library, the simplest pattern is:

import os, requests, json

API_KEY = os.environ.get("CometAPI_API_KEY")

url = "https://api.cometapi.com/v1/messages"

headers = {

"Content-Type": "application/json",

"x-api-key": API_KEY,

"anthropic-version": "2023-06-01"

}

payload = {

"model": "claude-haiku-4-5-20251001",

"max_tokens": 1200,

"messages": [

{"role": "user", "content": "Summarize the last 50 lines of this log file and highlight errors."}

]

}

resp = requests.post(url, headers=headers, json=payload)

print(json.dumps(resp.json(), indent=2))

Prompting & parameter tips

- Use low temperature (≤0.2) for deterministic technical tasks; raise for creative output.

- Maximize prompt caching: If you repeatedly send the same system prompt or shared context, use Anthropic’s prompt caching and message batching mechanics to reduce cost. Anthropic documents prompt caching with TTLs and pricing benefits.

- Tools & Computer Use: For environments where the model must run code, call system tools (Code Execution Tool, Computer Use Tool) through the API rather than embedding heavy state in the prompt. This reduces tokens and improves safety.

- Prompt engineering: Use clear system instructions, role framing, and examples (few-shot) to get concise, reliable outputs. When you rely on many previous turns, be explicit about the desired format (JSON, code fences, step lists) and prefill the assistant’s expected structure.

How much does Claude haiku 4.5 API cost?

One of Claude Haiku 4.5’s headline claims is cost: Anthropic lists $1 per million input tokens and $5 per million output tokens for Claude Haiku 4.5 in its announcement — roughly one-third the per-token input/output price of Sonnet 4 (Sonnet’s listed cost is about $3 input / $15 output per million tokens). The company frames that delta as enabling “the kinds of high-volume use cases that were previously cost-prohibitive with mid- and top-tier models.”

CometAPI provides access to Claude Haiku 4.5 API at a 20% discount from the official price:

| Input Tokens | $0.80 |

| Output Tokens | $4.00 |

Pricing mechanics & optimizations

- Prefer Haiku for user-facing, high QPS endpoints (chatbots, autocomplete), where per-request response size is modest and latency matters. The lower per-token cost compounds when you have millions of requests.

- Use prompt caching where you serve repeated (identical or similar) prompts to reduce cost. Anthropic offers prompt-caching and other cost controls; combine them with request batching and shorter

max_tokensto control spend. - Monitor input vs output token proportions — Haiku’s pricing separates input/output billing: large context uploads (many input tokens) are cheaper than large outputs (output tokens cost more), so design when to send context vs request concise outputs.

- Architecture: micro-calls vs macro-calls: a common pattern is “planner (Sonnet/Opus) → many executors (Haiku) → verifier (Sonnet/Opus)”. This lets you do high-value reasoning on larger models and cheap execution on Haiku. That pattern increases cost-efficiency at scale.

It should be noted that CometAPI does not necessarily provide batch API and caching functions

When should I choose Haiku 4.5 instead of Sonnet/Opus or other vendors?

Use Haiku 4.5 when your application needs a tightly balanced mix of cost, speed and respectable reasoning/coding ability — especially where you will call the model many times (high QPS), want low latency, or plan to run Haiku as the worker in a multi-agent system. Real examples: IDE assistants, CI test generators, bulk content transforms, ticket classification at high throughput, and agentic execution for micro-tasks. Anthropic explicitly markets Haiku for these production, cost-sensitive patterns.

Choose Haiku 4.5 if:

- You expect many short calls (worker/executor pattern) and per-call latency matters.

- You need low cost per execution and are prepared to offload top-level planning or verification to a stronger model.

- Your workload is tool-centric (programmatic agents invoking code editors, linters, or APIs) and benefits from Haiku’s speed for repeated interactions.

Prefer Sonnet/Opus or other models if:

- Your workload produces enormous outputs per call where Haiku’s per-output token cost would dominate and a different pricing profile wins. Independent comparisons show these tradeoffs matter for very output-heavy tasks.

- Your use case requires long-horizon reasoning, very large context lengths, or the highest possible single-call accuracy (use Sonnet/Opus).

- You need multimodal fusion or specialty vision capabilities that a larger model provides better.

Final thoughts — why Haiku 4.5 matters now

Claude Haiku 4.5 is significant because it reduces the operational and financial barrier to running agentic, parallelized AI at scale. By delivering strong coding and tool-use performance while emphasizing speed and affordability, Haiku enables architectures that are both performant and cost-effective—particularly the multi-agent patterns where many inexpensive workers outperform a single expensive brain in throughput and resilience.

Developers can access Claude Haiku 4.5 API through CometAPI, the latest model version is always updated with the official website. To begin, explore the model’s capabilities in the Playground and consult the API guide for detailed instructions. Before accessing, please make sure you have logged in to CometAPI and obtained the API key. CometAPI offer a price far lower than the official price to help you integrate.

Ready to Go?→ Sign up for CometAPI today !

If you want to know more tips, guides and news on AI follow us on VK, X and Discord!

CometAPI is a unified API platform that aggregates over 500 AI models from leading providers—such as OpenAI’s GPT series, Google’s Gemini, Anthropic’s Claude, Midjourney, Suno, and more—into a single, developer-friendly interface. By offering consistent authentication, request formatting, and response handling, CometAPI dramatically simplifies the integration of AI capabilities into your applications. Whether you’re building chatbots, image generators, music composers, or data‐driven analytics pipelines, CometAPI lets you iterate faster, control costs, and remain vendor-agnostic—all while tapping into the latest breakthroughs across the AI ecosystem.